Dave Keenan & Douglas Blumeyer's guide to RTT/Alternative complexities

In this article we will investigate one of the most advanced tuning concepts that have been developed for regular temperaments: alternative complexities (besides log-product complexity)

This is article 8 of 9 in Dave Keenan & Douglas Blumeyer's guide to RTT, or "D&D's guide" for short. It assumes that you have read all of these prior articles in the series:

- 3. Tuning fundamentals: Learn about minimizing damage to target-intervals

- 5. Units analysis: To look at temperament and tuning in a new way, think about the units of the values in frequently used matrices

- 6. Tuning computation: For methods and derivations; learn how to compute tunings, and why these methods work

- 7. All-interval tuning schemes: The variety of tuning most commonly named and written about on the Xenharmonic wiki

In particular, most of these advanced tuning concepts have been developed in the context of all-interval tuning schemes, so a firm command of the ideas in that article will be very helpful for getting the most out of this article.

Introduction

So far, we've used three different functions for determining interval complexity:

- In an early article of this series, we introduced the most obvious possible function for determining the complexity of a rational number n/d such as those that represent JI intervals: product complexity, the product of the ratio's numerator and denominator.

- However, since that time, we've been doing most of our work using a variant on this function, not product complexity itself; this variant is the log-product complexity, which is the base-2 logarithm of product complexity.

- It wasn't until late in our all-interval tuning schemes article that we introduced our third complexity function, which arises in the explanation of a popular tuning scheme that we call minimax-ES; this uses the Euclideanized version of the log-product complexity.

But these three functions are not the only ones that have been discussed in the context of temperament tuning theory. In this article we will be taking a brief look at several other functions which some theorists have investigated for possible use in the tuning of regular temperaments:

- Sum-of-prime-factors-with-repetition (sopfr) complexity

- Count-of-prime-factors-with-repetition (copfr) complexity

- Log-integer-limit-squared (lils) complexity

- Log-odd-limit-squared (lols) complexity

We'll also consider the Euclideanized versions of each of these four functions.

This is not meant to be an exhaustive list of complexity functions that may be argued to have relevance for tuning theory. It seems like every few months someone suggests a new one. We prepared this article as a survey of these relatively well-established use cases in order to demystify what's out there already and to demonstrate some principles of this nook of the theory, in case this is an area our readers would like to explore further.

Not just for all-interval tuning schemes

These alternative complexity functions were all introduced to regular temperament theory through the use of all-interval tuning schemes, the kind that use a series of clever mathematical tricks in order to avoid the choice of a specific, finite set of intervals to be their tuning damage minimization targets.

Historically speaking, ordinary tuning schemes—those which do tune a specific finite target-interval set—have not involved complexity functions at all, not even log-product complexity. For the most part, anyway, users of ordinary tuning schemes have instead leaned on the careful choice of their target-intervals, achieving fine control of their tuning in this way, and it has been sufficient for them to define damage as the absolute error to their target-intervals. In other words, they have not felt the need to weight their chosen target-intervals' errors relative to each other according to the intervals' complexities.

The argument could be made, then, that this lack of control over the tuning via the choice of specific target-intervals is what caused all-interval tuning schemes to become such a breeding ground for interest in alternative complexity functions. With all-interval tuning schemes, this was the only other way they could achieve that sort of fine control.

And so, while all-interval tuning schemes are where these innovations in complexity functions occurred historically, we note that there's nothing restricting their use to all-interval tuning schemes. There's no reason why ordinary tuning schemes cannot take advantage of both opportunities for fine control that are available to them: target-interval set choice, and alternative complexity functions. In fact, we will later argue that some of these alternative complexity functions may actually be more appropriate to use for ordinary tuning schemes than they are for all-interval tuning schemes.

Naming

The use of alternative functions for determining complexity is what accounts for the profusion of eponymous tuning schemes you may have come across: Benedetti, Weil, Kees, and Frobenius. This is because some theorists have preferred to refer to these functions by a person's name—whether this is the discoverer of the generic math function, the first person to apply it to tuning, or just a person somehow associated with the function—perhaps because eponyms are distinctive and memorable. But if you're like us, the connection between these historical personages and these functions is not obvious, and so we can never seem to remember which one of these tuning schemes is which! We've had to rely on awkward mnemonics to keep the mapping from eponym to function straight. So if you're struggling with this as well, then we hope you'll appreciate and adopt our tuning scheme naming system which drops the eponyms in favor of the actual names of the math functions used, e.g. the minimax-sopfr-S tuning scheme minimaxes the sopfr-simplicity-weight damage to all intervals, where "sopfr" is a standard math function that you can look up, which stands for "sum of prime factors with repetitions" (isn't that better than "Benedetti Optimal"?). Because the math function names are descriptive, we encode meaning rather than encrypt it. And by naming them systematically, we isolate their differences through the structural patterns shared among each name, so we can contrast them at a glance.

This approach is also good because it can accommodate any complexity one might dream up; as long as you can name the complexity function, you can use its name in the name of the corresponding tuning scheme.

This is an extension of the tuning scheme naming system initially laid out at the end of the tuning fundamentals article, and so it also allows for easy comparison with those simpler, ordinary tuning schemes, e.g. the (all-interval) minimax-sopfr-S scheme is very closely related to the TILT minimax-sopfr-S scheme, which is the same except instead of using prime proxy targets in order to target all intervals, it specifically targets only the TILT. So basically the name breaks down into [optimization]-[complexity]-[damage weight slope]. The [complexity] part can be broken down further into [norm power if q ≠ 1] − [q = 1 complexity]; for example, in minimax-E-lils-S, we have minimax is our optimization, E-lils (Euclideanized log-integer-limit-squared) is our complexity function, and S (simplicity-weight) is our damage weight slope. And our complexity can be further broken down into 'E' which is its norm power other than taxicab (q = 1) ("Euclideanized" means q = 2) and "lils" is the log-integer-limit-squared, which is what the complexity function would be, when using the taxicab norm.

Comparing overall complexity qualities

In this first section, we'll be comparing how these functions rank intervals differently. This information is important because if you don't feel that a function does an effective job at determining the relative complexity of musical intervals, then you probably shouldn't use this function as a complexity function in the service of tuning temperaments of those musical intervals! Well, you might choose to anyway, if you perceived a worthwhile tradeoff, such as computational expediency. We'll save discussion of that until later.

Complexity contours

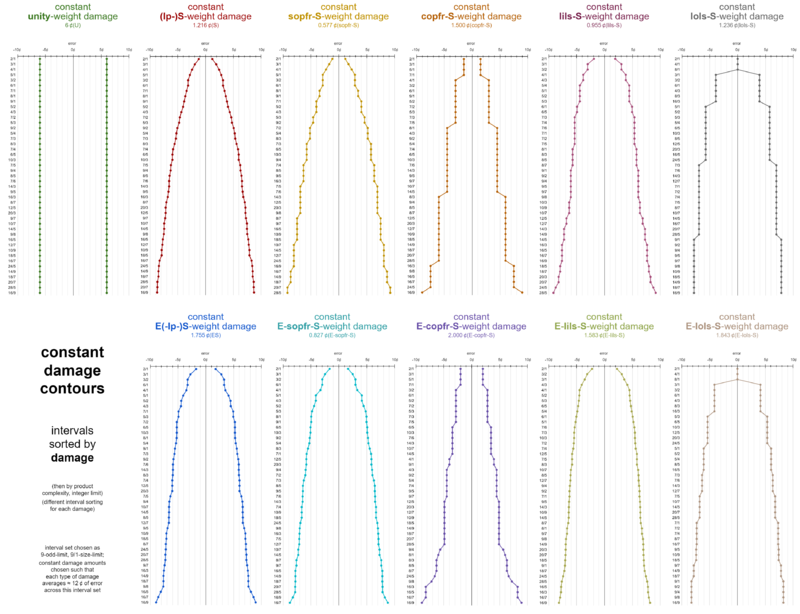

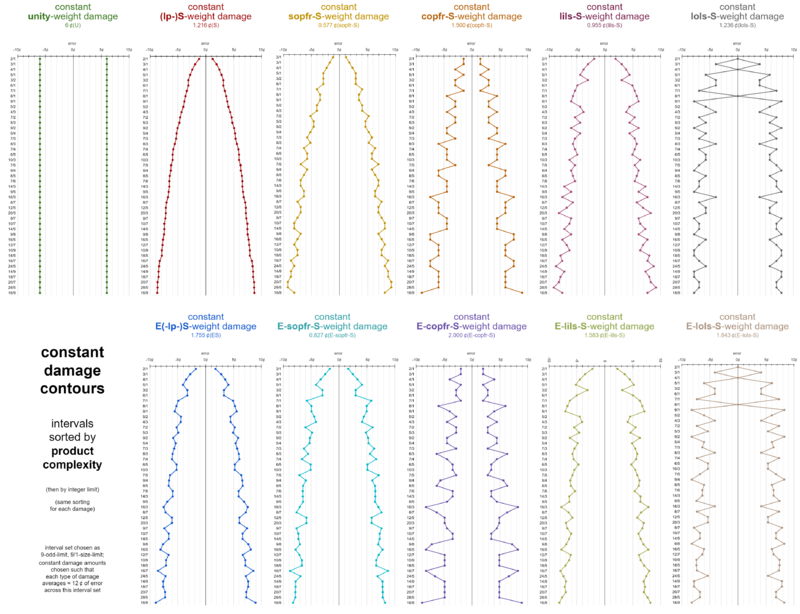

Here's a diagram comparing 10 of our 12 functions (not including "product" or its Euclideanized version; we'll explain why later).

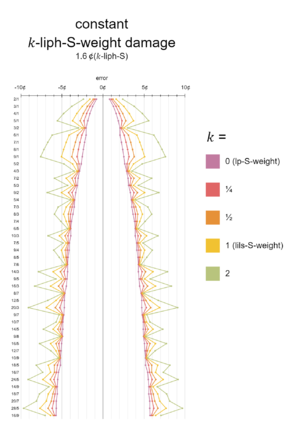

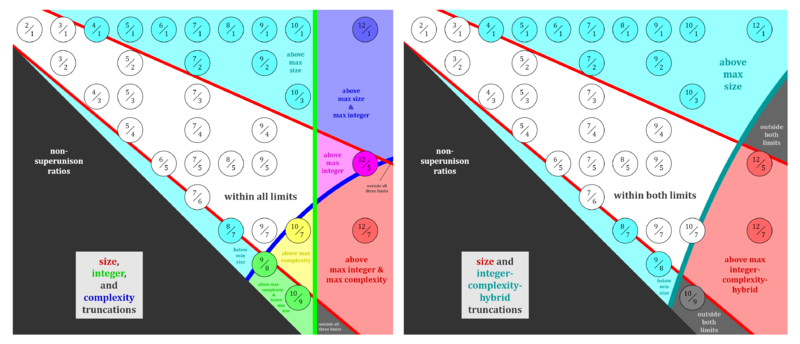

With this diagram, you can look at how each function sorts intervals, and decide for yourself whether you think that's a reasonable order, i.e. does it make musical sense, as a way you might weight errors, whether complexity-weighted or simplicity-weighted. (These happen to be shown simplicity-weighted.) And here's a diagram where they're all sorted according to product complexity. This one, then, comes at the problem from the assumption that product complexity is the "correct" way to sort them, so we can see in the textures of these curves to what extent they deviate from it. This is an assumption we mostly hold (deets on dispute later). You can see how the Euclideanized versions are all worse (more jagged) than their corresponding original version.

Any complexity function has a corresponding simplicity function which simply returns for any given interval the reciprocal of what the complexity function would have returned. Therefore, it is unnecessary to separately provide diagrams like these for simplicity functions. We can find the simplicity contours by turning these inside-out; the ranking won't change other than the order will exactly reverse, with big bars becoming small, and small bars becoming big. We simply reciprocate each individual value.

Explaining the impact of Euclideanization

To review, Euclideanization is when we take a function which can be expressed in the form of a 1-norm (or equivalent summation form) and—preserving its Dual-norm prescalers, if any—change its norm power from 1 to 2.

The 2-norm is how we calculate distance in the physical reality you and I walk around within everyday: 3-dimensional Euclidean space. So it may seem to be a natural choice for measuring distances between notes arranged on a JI lattice such as we might build out of rods and hubs like one might find in a chemistry modeling kit. (Perhaps some readers have actually done this as part of their learning process, or for experimenting with new scales.)

However, it is unfortunately not the case that calculating distances in this sort of space like this gives a realistic notion of harmonic distance.

Let's imagine, for example, a three-dimensional JI lattice with prime 2 on one axis, prime 3 on another axis, and prime 5 on the remaining axis; so we choose a 3D example that's as similar to our physical space as can be. Now let's imagine the vectors for 9/8 and 10/9: [-3 2 0⟩ and [1 -2 1⟩, respectively, each one drawn out from the origin at unison 1/1 [0 0 0⟩ diagonally through the lattice. The norm for 9/8 is [math]\displaystyle{ \sqrt{|{-3}|^2 + |2|^2 + |0|^2} = \sqrt{13} ≈ 3.605 }[/math], while the norm for 10/9 is [math]\displaystyle{ \sqrt{|1|^2 + |{-2}|^2 + |1|^2} = \sqrt{6} ≈ 2.449 }[/math]. This suggests that 9/8 is a more complex interval than 10/9, a suggestion that most musicians would disagree with, being that 9/8 is both lower (prime) limit than 10/9 (3-limit vs. 5-limit), and also that 9/8 contains smaller numbers than 10/9.

To gain some intuition for why the 2-norm gets this comparison wrong, begin by thinking about the space these vectors are traveling through diagonally. What is this space? Imagine stopping at some point along it, floating between nodes of the lattice—what would you say is the pitch at that point? Don't worry about these two questions too much; you probably won't be able to come up with good answers to them. But that's just the point. They don't really make sense here.

We can say that JI lattices like this are convenient tricks for helping us to visualize, understand, and analyze JI scales and chords. But there's no meaning to diagonal travel through this space. We can add a factor of 5 and remove a couple factors of 2 from 9/8 to find 10/9, but really those are three separate, discrete actions. When we travel from 9/8 to 10/9, we can only really think of doing it along the lattice edges, not between and through them. So while there's nothing stopping us from thinking about the space between nodes as if it were like the physical space we live in, and measuring it accordingly, in an important sense none of that space really exists, and the only distances in this harmonic world that are "real" are the distances straight along the edges of the lattice connecting each node to its neighbor nodes. In other words, diagonal travel between JI nodes is a lie. But we choose to accept this lie whenever we use a Euclideanized complexity.

Astute readers may have realized that even if we compare these same two intervals by a 1-norm, i.e. where we measure their distance from the unison at the origin by a series of separate straight segments along the edges between pitches, we still find that the norm for 9/8 is [math]\displaystyle{ \sqrt[1]{|{-3}|^1 + |2|^1 + |0|^1} = 5 }[/math], while the norm for 10/9 is [math]\displaystyle{ \sqrt[1]{|1|^1 + |-2|^1 + |1|^1} = 4 }[/math], that is, we still find that 9/8 is ranked more complex than 10/9. The remaining discrepancy is that we haven't scaled the lattice so that edge lengths are proportional to the sizes of the primes in pitch, which is to say, scaled each axis according to the logarithm of the prime that axis is meant to represent. If every occurrence of a prime 2 is the standard complexity point of 1, but prime 3 counts for [math]\displaystyle{ \log_2{3} \approx 1.585 }[/math] points, and prime 5 counts for [math]\displaystyle{ \log_2{5} \approx 2.232 }[/math] points, then we see 9/8 come out to [math]\displaystyle{ |{-3}\times1| + |2\times1.585| + |0\times2.232| = 6.170 }[/math] and 10/9 come out to [math]\displaystyle{ |1·1| + |{-2}\times1.585| + |1\times2.232| = 6.402 }[/math], which finally gives us a reasonable comparison between these two intervals. This logarithmically-scaled 1-norm may be recognized as log-product complexity. As for unscaled 1-norm—that's actually the count of prime factors with repetition, a different one of the functions we'll be looking at in more detail soon. You can find 9/8 and 10/9 in the previous section's diagrams and see how these are all ranked differently by the different complexities to confirm the points made right here.

Why do we (or some theorists anyway) accept the lie of Euclideanized harmonic space sometimes? The answer is: computational expediency. It's not easier to compute individual 2-norms than it is to compute individual 1-norms, of course. However, it turns out that it is easier to compute the entire optimized tuning of a temperament when you pretend that Euclidean distance is a reasonable way to measure the complexity of an interval, than it is when you insist on measuring it using the truer along-the-edges distance. We can therefore think of Euclideanized distance as a decent approximation of the true harmonic distances, which some theorists decide is acceptable.

Our opinion? There are very few situations where the difference in computation speed for a tuning optimized with a 1-norm and a 2-norm will make a difference. Perhaps an automated script that runs upon loading a web page, where web page load times are famously important to get as lighting fast as possible. But in general, if you know how to compute the answer with a 1-norm, the computer is going to calculate the answer just about as fast as it would with a 2-norm approximation, so just use the 1-norm. The listeners of your music for eternities to come will thank you for giving your computer a few extra seconds to get the tunings as nice as you knew how to ask it for them.

Why we don't "maxize"

You may wonder why we have three norm powers of special interest—1, 2, and [math]\displaystyle{ \infty }[/math]—yet the only norms we're looking at are prescaled (or not prescaled) 1-norms (original) or 2-norms (Euclideanized). Why do we not consider any [math]\displaystyle{ \infty }[/math]-norms?

The short answer is because no theorist has yet demonstrated significant interest in them. And the reason for that is because they're even less harmonically realistic than Euclideanized norms, but without a computational expediency boost to counteract this. Here's a diagram that shows what happens to interval rankings with a [math]\displaystyle{ \infty }[/math] norm, showing how they're even more scrambled than before:

And we can also think of this in terms of what measuring this distance would be like. Please review the way of conceptualizing maxization given here, and then realize that if the shortcoming of measuring harmonic distance with Euclideanized measurement is how it takes advantage of the not-truly-existent diagonal space between lattice nodes, then maxized measurement is the absolute extreme of that shortcoming, or should we say short-cutting, in that it allows you to go as diagonally as possible at all times for no cost.

Why we don't use any other norm power

Too complicated? Who cares? But seriously, no theorist has yet demonstrated interest in any norm power for complexities other than 1 or 2, whether [math]\displaystyle{ \infty }[/math] or otherwise.

Prescaling vs. pretransforming

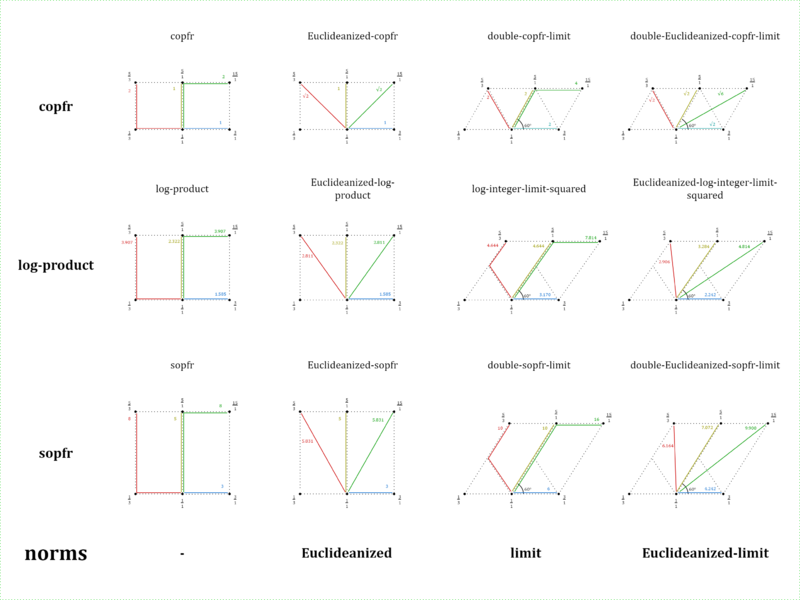

Some of the alternative complexities discussed in this article cannot be said to merely prescale the vectors they take the norm of. For example, the integer-limit type complexities have a shearing effect. So, we may tend to prefer the more general term pretransform in this article, and accordingly the complexity pretransformer X and inverse pretransformer [math]\displaystyle{ X^{-1} }[/math].

The complexities

Here's a monster table, listing all the complexities we'll look at in this article.

| For ordinary tuning schemes and all-interval tuning schemes | For all-interval tuning schemes only | ||||||

|---|---|---|---|---|---|---|---|

| Interval complexity norm or function | Interval complexity function in quotient-based n/d form |

Interval complexity norm in vector-based [math]\displaystyle{ \small \textbf{i} }[/math] form |

Retuning magnitude norm (to be minimized) dual to the interval complexity norm Only has (co)vector-based 𝒓 form |

All-interval tuning scheme | |||

| Name (bold = systematic) (italic = general math) (plain = RTT historical) |

Formula | Example: [math]\displaystyle{ \small \frac{10}{9} }[/math] | Formula | Example: [1 -2 1⟩ | Formula | Example: ⟨1.617 -2.959 3.202] | Name (bold = systematic) (plain = RTT historical) |

| [math]\displaystyle{ \small \begin{align} &\text{copfr-C}\left(\dfrac{n}{d}\right) \\ &= \text{copfr}(nd) \\ \small &= \text{copfr}(n) + \text{copfr}(d) \end{align} }[/math] | [math]\displaystyle{ \small = \text{copfr}(10·9) \\ \small = \text{copfr}(2·5·3·3) \\ \small = 2^0 + 5^0 + 3^0 + 3^0 \\ \small = 4 }[/math] | [math]\displaystyle{ \small \begin{align} &\text{copfr-C}(\textbf{i}) \\ &= \left\|\textbf{i}\right\|_1 \\ \small &= \sqrt[1]{\strut \sum\limits_{n=1}^d \left|\mathrm{i}_n\right|^1} \\ \small &= \sqrt[1]{\strut \left|\mathrm{i}_1\right|^1 + \left|\mathrm{i}_2\right|^1 + \ldots + \left|\mathrm{i}_d\right|^1} \\ \small &= \left|\mathrm{i}_1\right| + \left|\mathrm{i}_2\right| + \ldots + \left|\mathrm{i}_d\right| \end{align} }[/math] | [math]\displaystyle{ \small = |1| + |{-2}| + |1| \\ \small = 4 }[/math] | [math]\displaystyle{ \small \begin{align} &\text{copfr-C}^{*}(𝒓) \\ &= \left\|𝒓\right\|_\infty \\ \small &= \sqrt[\infty]{\strut \sum\limits_{n=1}^d |r_n|^\infty} \\ \small &= \sqrt[\infty]{\strut \left|r_1\right|^\infty + \left|r_2\right|^\infty + \ldots + \left|r_d\right|^\infty} \\ \small &= \max\left(\left|r_1\right|, \left|r_2\right|, \ldots, \left|r_d\right|\right) \end{align} }[/math] | [math]\displaystyle{ \small = \max\left(|1.617|, |{-2.959}|, |3.202|\right) \\ \small = 3.202 }[/math] | ||

| [math]\displaystyle{ \small \begin{align} &\text{E-copfr-C}(\textbf{i}) \\ &= \left\|\textbf{i}\right\|_2 \\ \small &= \sqrt[2]{\strut \sum\limits_{n=1}^d \left|\mathrm{i}_n\right|^2} \\ \small &= \sqrt[2]{\strut \left|\mathrm{i}_1\right|^2 + \left|\mathrm{i}_2\right|^2 + \ldots + \left|\mathrm{i}_d\right|^2} \\ \small &= \sqrt{\strut \mathrm{i}_1^2 + \mathrm{i}_2^2 + \ldots + \mathrm{i}_d^2} \end{align} }[/math] | [math]\displaystyle{ \small = \sqrt{\strut 1^2 + -2^2 + 1^2} \\ \small = \sqrt{\strut 1 + 4 + 1} \\ \small = \sqrt6 \\ \small \approx 2.449 }[/math] | [math]\displaystyle{ \small \begin{align} &\text{E-copfr-C}^{*}(𝒓) \\ &= \text{E-copfr-C}(𝒓) \\ \small &= \left\|𝒓\right\|_2 \\ \small &= \sqrt[2]{\strut \sum\limits_{n=1}^d \left|r_n\right|^2} \\ \small &= \sqrt{\strut r_1^2 + r_2^2 + \ldots + r_2^2} \end{align} }[/math] | [math]\displaystyle{ \small = \sqrt{\strut 1.617^2 + -2.959^2 + 3.202^2} \\ \small \approx \sqrt{\strut 2.615 + 8.756 + 10.253} \\ \small = \sqrt{\strut 21.624} \\ \small \approx 4.650 }[/math] | ||||

| [math]\displaystyle{ \small \begin{align} &\text{lp-C}\left(\dfrac{n}{d}\right) \\ &= \log_2(nd) \\ \small &= \log_2(n) + \log_2(d) \end{align} }[/math] | [math]\displaystyle{ \small = \log_2(10·9) \\ \small = \log_2(90) \\ \small \approx 6.492 }[/math] | [math]\displaystyle{ \small \begin{align} &\text{lp-C}(\textbf{i}) \\ &= \left\|L\textbf{i}\right\|_1 \\ \small &= \sqrt[1]{\strut \sum\limits_{n=1}^d \left|\log_2{p_n} · \mathrm{i}_n\right|^1} \\ \small &= \sqrt[1]{\strut \left|\log_2{p_1} · \mathrm{i}_1\right|^1 + \left|\log_2{p_2} · \mathrm{i}_2\right|^1 + \ldots + \left|\log_2{p_d} · \mathrm{i}_d\right|^1} \\ \small &= \left|\log_2{p_1} · \mathrm{i}_1\right| + \left|\log_2{p_2} · \mathrm{i}_2\right| + \ldots + \left|\log_2{p_d} · \mathrm{i}_d\right| \end{align} }[/math] | [math]\displaystyle{ \small = \left|\log_2{2} · 1\right| + \left|\log_2{3} · {-2}\right| + \left|\log_2{5} · 1\right| \\ \small \approx 1 + 3.170 + 2.322 \\ \small = 6.492 }[/math] | [math]\displaystyle{ \small \begin{align} &\text{lp-C}^{*}(𝒓) \\ &= \left\|𝒓L^{-1}\right\|_\infty \\ \small &= \sqrt[\infty]{\strut \sum\limits_{n=1}^d \left|\frac{r_n}{\log_2{p_n}}\right|^\infty} \\ \small &= \sqrt[\infty]{\strut \left|\frac{r_1}{\log_2{p_1}}\right|^\infty + \left|\frac{r_2}{\log_2{p_2}}\right|^\infty + \ldots + \left|\frac{r_d}{\log_2{p_d}}\right|^\infty} \\ \small &= \max\left(\dfrac{\left|r_1\right|}{\log_2{p_1}}, \dfrac{\left|r_2\right|}{\log_2{p_2}}, \ldots, \dfrac{\left|r_d\right|}{\log_2{p_d}}\right) \end{align} }[/math] | [math]\displaystyle{ \small = \max\left(\dfrac{|1.617|}{\log_2{2}}, \dfrac{|{-2.959}|}{\log_2{3}}, \dfrac{|3.202|}{\log_2{5}}\right) \\ \small = \max\left(1.617, 1.867, 1.379\right) \\ \small = 1.867 }[/math] | ||

| [math]\displaystyle{ \small \begin{align} &\text{E-lp-C}(\textbf{i}) \\ &= \left\|L\textbf{i}\right\|_2 \\ \small &= \sqrt[2]{\strut \sum\limits_{n=1}^d \left|\log_2{p_n} · \mathrm{i}_n\right|^2} \\ \small &= \sqrt[2]{\strut \left|\log_2{p_1} · \mathrm{i}_1\right|^2 + \left|\log_2{p_2} · \mathrm{i}_2\right|^2 + \ldots + \left|\log_2{p_d} · \mathrm{i}_d\right|^2} \\ \small &= \sqrt{\strut (\log_2{p_1} · \mathrm{i}_1)^2 + (\log_2{p_2} · \mathrm{i}_2)^2 + \ldots + (\log_2{p_d} · \mathrm{i}_d)^2} \end{align} }[/math] | [math]\displaystyle{ \small = \sqrt{\strut \left(\log_2{2} · 1\right)^2 + \left(\log_2{3} · {-2}\right)^2 + \left(\log_2{5} · 1\right)^2} \\ \small \approx \sqrt{\strut 1 + 10.048 + 5.391} \\ \small = \sqrt{\strut 16.439} \\ \small \approx 4.055 }[/math] | [math]\displaystyle{ \small \begin{align} &\text{E-lp-C}^{*}(𝒓) \\ &= \left\|𝒓L^{-1}\right\|_2 \\ \small &= \sqrt[2]{\strut \sum\limits_{n=1}^d \left|\frac{r_n}{\log_2{p_n}}\right|^2} \\ \small &= \sqrt[2]{\strut \left|\frac{r_1}{\log_2{p_1}}\right|^2 + \left|\frac{r_2}{\log_2{p_2}}\right|^2 + \ldots + \left|\frac{r_d}{\log_2{p_d}}\right|^2} \\ \small &= \sqrt{\strut (\frac{r_1}{\log_2{p_1}})^2 + (\frac{r_2}{\log_2{p_2}})^2 + \ldots + (\frac{r_d}{\log_2{p_d}})^2} \end{align} }[/math] | [math]\displaystyle{ \small = \sqrt{\strut \left(\frac{1.617}{\log_2{2}}\right)^2 + \left(\frac{{-2.959}}{\log_2{3}}\right)^2 + \left(\frac{3.202}{\log_2{5}}\right)^2} \\ \small \approx \sqrt{\strut 2.615 + 3.485 + 1.902} \\ \small = \sqrt{\strut 8.002} \\ \small \approx 2.829 }[/math] | ||||

| [math]\displaystyle{ \small \text{lils-C}\left(\dfrac{n}{d}\right) \\ = \log_2\left(\max(n, d)^2\right) }[/math] | [math]\displaystyle{ \small = \log_2\left(\max(10, 9)^2\right) \\ \small = \log_2(10·10) \\ \small = \log_2(100) \\ \small \approx 6.644 }[/math] | ||||||

| [math]\displaystyle{ \small \text{lils-C}\left(\dfrac{n}{d}\right) \\ = \log_2{nd} + \left|\log_2{\frac{n}{d}}\right| }[/math] | [math]\displaystyle{ \small = \log_2(10·9) + \left|\log_2(\frac{10}{9})\right| \\ \small \approx 6.492 + |0.152| \\ \small = 6.644 }[/math] | [math]\displaystyle{ \small \begin{align} &\text{lils-C}(\textbf{i}) \\ &= \left\|ZL\textbf{i}\right\|_1 \\ \small &= \left\| \; \left[ \; L\textbf{i} \; \vert \; \smallLLzigzag L\textbf{i} \smallRRzigzag_1 \; \right] \; \right\|_1 \\ \small &= \sum\limits_{n=1}^d\left|\log_2{p_n} · \mathrm{i}_n\right| + \left|\sum\limits_{n=1}^d\left(\log_2{p_n} · \mathrm{i}_n\right)\right| \\ \small &= \left|\log_2{p_1} · \mathrm{i}_1\right| + \left|\log_2{p_2} · \mathrm{i}_2\right| + \ldots + \left|\log_2{p_d} · \mathrm{i}_d\right| \; + \\ \small & \quad\quad\quad \left|\left(\log_2{p_1} · \mathrm{i}_1\right) + \left(\log_2{p_2} · \mathrm{i}_2\right) + \ldots + \left(\log_2{p_d} · \mathrm{i}_d\right)\right| \end{align} }[/math] | [math]\displaystyle{ \small \begin{align} &= \left|\log_2{2} · 1\right| + \left|\log_2{3} · {-2}\right| + \left|\log_2{5} · 1\right| \; + \\ \small & \quad\quad\quad \left|\left(\log_2{2} · 1\right) + \left(\log_2{3} · {-2}\right) + \left(\log_2{5} · 1\right)\right| \\[5pt] \small &= |1| + |-3.170| + |2.322| + \left|1 + {-3.170} + 2.322\right| \\ \small &= 1 + 3.170 + 2.322 + 0.152 \\ \small &\approx 6.644 \end{align} }[/math] | [math]\displaystyle{ \small \begin{align} &\text{lils-C}^{*}(\textbf{𝒓}) \\ &= \max\left( \frac{r_1}{\log_2{p_1}}, \frac{r_2}{\log_2{p_2}}, \ldots, \frac{r_d}{\log_2{p_d}}, 0\right) \; - \\ \small & \quad\quad\quad \min\left(\frac{r_1}{\log_2{p_1}}, \frac{r_2}{\log_2{p_2}}, \ldots, \frac{r_d}{\log_2{p_d}}, 0\right) \end{align} }[/math] | [math]\displaystyle{ \small = \max\left(\frac{1.617}{\log_2{2}}, \frac{{-2.959}}{\log_2{3}}, \frac{3.202}{\log_2{5}}\right) \; - \\ \small \quad \min\left(\frac{1.617}{\log_2{2}}, \frac{{-2.959}}{\log_2{3}}, \frac{3.202}{\log_2{5}}\right) \\ \small \approx \max\left(1.617, {-1.867}, 1.379\right) \; - \\ \small \quad \min\left(1.617, {-1.867}, 1.379\right) \\ \small = 1.617 - {-1.867} \\ \small = 3.484 }[/math] | ||

| [math]\displaystyle{ \small \begin{align} &\text{E-lils-C}(\textbf{i}) \\ &= \left\| ZL\textbf{i} \right\|_2 \\ \small &= \left\| \; \left[ \; L\textbf{i} \; \vert \; \smallLLzigzag L\textbf{i} \smallRRzigzag_1 \; \right] \; \right\|_2 \\ \small &= \sqrt{ \sum\limits_{n=1}^d\left|\log_2{p_n} · \mathrm{i}_n\right|^2 + \left|\sum\limits_{n=1}^d(\log_2{p_n} · \mathrm{i}_n)\right|^2 } \\ \small &= \sqrt{\strut \left|\log_2{p_1} · \mathrm{i}_1\right|^2 + \left|\log_2{p_2} · \mathrm{i}_2\right|^2 + \ldots + \left|\log_2{p_d} · \mathrm{i}_d\right|^2) \; +} \hspace{1mu} \overline{\rule[11pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[11pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[11pt]{0pt}{0pt} } \\ \small & \quad\quad\quad \overline{\rule[12pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[12pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[12pt]{0pt}{0pt} } \hspace{1mu} \overline{\left|(\log_2{p_1} · \mathrm{i}_1) + \left(\log_2{p_2} · \mathrm{i}_2\right) + \ldots + \left(\log_2{p_d} · \mathrm{i}_d\right)\right|^2} \end{align} }[/math] | [math]\displaystyle{ \small \begin{align} &= \sqrt{\strut \left|\log_2{2} · 1\right|^2 + \left|\log_2{3} · {-2}\right|^2 + \left|\log_2{5} · 1\right|^2 \; +} \hspace{1mu} \overline{\rule[11pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[11pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[11pt]{0pt}{0pt} } \\ \small & \quad\quad\quad \overline{\rule[12pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[12pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[12pt]{0pt}{0pt} } \hspace{1mu} \overline{\left|\left(\log_2{2} · 1\right) + \left(\log_2{3} · {-2}\right) + \left(\log_2{5} · 1\right)\right|^2} \\[5pt] \small &= \sqrt{\strut 1^2 + -3.170^2 + 2.322^2 + \left|1 + -3.170 + 2.322\right|^2} \\ \small &= \sqrt{\strut 1 + 10.048 + 5.391 + 0.023} \\ \small &= \sqrt{\strut 16.462} \\ \small &\approx 4.057 \end{align} }[/math] | (unsure; handled via matrix augmentations) | |||||

| [math]\displaystyle{ \begin{align} \small &\text{lols-C}\left(\dfrac{n}{d}\right) \\ &= \log_2\left(\max\left(\right.\right. &&\text{rough}(n, 3), \\ &&&\left.\left.\text{rough}(d, 3)\right)^2\right) \end{align} }[/math] | [math]\displaystyle{ \small = \log_2\left(\max(5, 9)^2\right) \\ \small = \log_2{9·9} \\ \small = \log_2{81} \\ \small \approx 6.340 }[/math] |

| |||||

| [math]\displaystyle{ \small \text{lols-C}\left(\dfrac{n}{d}\right) \\ = \log_2{\text{rough}(nd, 3)} + \left|\log_2{\frac{\text{rough}(n,3)}{\text{rough}(d,3)}}\right| }[/math] | [math]\displaystyle{ \small = \log_2(5 · 9) + \left|\log_2\left(\frac{5}{9}\right)\right| \\ \small \approx 5.492 + \left|{-0.848}\right| \\ \small = 6.340 }[/math] | [math]\displaystyle{ \small \begin{align} &\text{lols-C}(\textbf{i}) \\ &= \sum\limits_{n=2}^d\left|\log_2{p_n} · \mathrm{i}_n\right| + \left|\sum\limits_{n=2}^d\left(\log_2{p_n} · \mathrm{i}_n\right)\right| \\ \small &= |\log_2{p_2} · \mathrm{i}_2| + \ldots + |\log_2{p_d} · \mathrm{i}_d| \; + \\ \small & \quad\quad\quad \left|\left(\log_2{p_2} · \mathrm{i}_2\right) + \ldots + \left(\log_2{p_d} · \mathrm{i}_d\right)\right| \end{align} }[/math] | [math]\displaystyle{ \small \begin{align} &= \left|\log_2{3} · {-2}\right| + \left|\log_2{5} · 1\right| \; + \\ \small & \quad\quad\quad \left|\left(\log_2{3} · {-2}\right) + \left(\log_2{5} · 1\right)\right| \\ \small &\approx 3.170 + 2.322 + \left|{-3.170} + 2.322\right| \\ \small &= 6.340 \end{align} }[/math] | (unsure; handled via matrix augmentations) | |||

| [math]\displaystyle{ \small \begin{align} &\text{E-lols-C}(\textbf{i}) \\ &= \sqrt{ \sum\limits_{n=2}^d\left|\log_2{p_n} · \mathrm{i}_n\right|^2 + \left|\sum\limits_{n=2}^d\left(\log_2{p_n} · \mathrm{i}_n\right)\right|^2 } \\ \small &= \sqrt{\strut \left|\log_2{p_2} · \mathrm{i}_2\right|^2 + \ldots + \left|\log_2{p_d} · \mathrm{i}_d\right|^2) \; +} \hspace{1mu} \overline{\rule[11pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[11pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[11pt]{0pt}{0pt} } \\ \small & \quad\quad\quad \overline{\rule[12pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[12pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[12pt]{0pt}{0pt} } \hspace{1mu} \overline{\left|\left(\log_2{p_2} · \mathrm{i}_2\right) + \ldots + \left(\log_2{p_d} · \mathrm{i}_d\right)\right|^2} \end{align} }[/math] | [math]\displaystyle{ \small \begin{align} &= \sqrt{\strut \left|\log_2{3} · {-2}\right|^2 + \left|\log_2{5} · 1\right|^2 \; +} \hspace{1mu} \overline{\rule[11pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[11pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[11pt]{0pt}{0pt} } \\ \small & \quad\quad\quad \overline{\rule[12pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[12pt]{0pt}{0pt} } \hspace{1mu} \overline{\rule[12pt]{0pt}{0pt} } \hspace{1mu} \overline{\left|\left(\log_2{3} · {-2}) + (\log_2{5} · 1\right)\right|^2} \\[5pt] \small &\approx\sqrt{\strut {-3.170}^2 + 2.322^2 + \left|{-3.170} + 2.322\right|^2} \\ \small &= \sqrt{\strut 10.048 + 5.391 + 0.719} \\ \small &= \sqrt{\strut 16.158} \\ \small &= 4.020 \end{align} }[/math] | (unsure; handled via matrix augmentations) |

| ||||

| [math]\displaystyle{ \small \text{prod-C}\left(\dfrac{n}{d}\right) \\ = nd }[/math] | [math]\displaystyle{ \small = 10·9 \\ \small = 90 }[/math] | [math]\displaystyle{ \small \begin{align} &\text{prod-C}(\textbf{i}) \\ &= \prod\limits_{n=1}^d{p_n^{\left|\mathrm{i}_n\right|}} \\ \small &= p_1^{|\mathrm{i}_1|} \times p_2^{\left|\mathrm{i}_2\right|} \times \ldots \times p_d^{\left|\mathrm{i}_d\right|} \end{align} }[/math] | [math]\displaystyle{ \small = 2^{|1|} \times 3^{|{-2}|} \times 5^{|1|} \\ \small = 2 + 9 + 5 \\ \small = 16 }[/math] | ||||

| [math]\displaystyle{ \small \begin{align} &\text{sopfr-C}\left(\dfrac{n}{d}\right) \\ &= \text{sopfr}(nd) \\ \small &= \text{sopfr}(n) + \text{sopfr}(d) \end{align} }[/math] | [math]\displaystyle{ \small = \text{sopfr}(10·9) \\ \small = \text{sopfr}(2·5·3·3) \\ \small = 2^1+ 5^1 + 3^1 + 3^1 \\ \small = 13 }[/math] | [math]\displaystyle{ \small \begin{align} &\text{sopfr-C}(\textbf{i}) \\ &= \left\|\text{diag}(𝒑)\textbf{i}\right\|_1 \\ \small &= \sqrt[1]{\strut \sum\limits_{n=1}^d\left|p_n · \mathrm{i}_n\right|^1 } \\ \small &= \sqrt[1]{\strut \left|p_1 · \mathrm{i}_1\right|^1 + \left|p_2 · \mathrm{i}_2\right|^1 + \ldots + \left|p_d · \mathrm{i}_d\right|^1} \\ \small &= \left|p_1\mathrm{i}_1\right| + \left|p_2\mathrm{i}_2\right| + \ldots + \left|p_d\mathrm{i}_d\right| \end{align} }[/math] | [math]\displaystyle{ \small = |2 · 1| + |3 · {-2}| + |5 · 1| \\ \small = 2 + 6 + 5 \\ \small = 13 }[/math] | [math]\displaystyle{ \small \begin{align} &\text{sopfr-C}^{*}(\textbf{𝒓}) \\ &= \left\|𝒓\,\text{diag}(𝒑)^{-1}\right\|_\infty \\ \small &= \sqrt[\infty]{\strut \sum\limits_{n=1}^d \left|\frac{r_n}{p_n}\right|^\infty} \\ \small &= \sqrt[\infty]{\strut \left|\frac{r_1}{p_1}\right|^\infty + \left|\frac{r_2}{p_2}\right|^\infty + \ldots + \left|\frac{r_d}{p_d}\right|^\infty} \\ \small &= \max\left(\frac{\left|r_1\right|}{p_1}, \frac{\left|r_2\right|}{p_2}, \ldots, \frac{\left|r_d\right|}{p_d}\right) \end{align} }[/math] | [math]\displaystyle{ \small = \max\left(\frac{|1.617|}{2}, \frac{|{-2.959}|}{3}, \frac{|3.202|}{5}\right) \\ \small \approx \max(0.809, 0.986, 0.640) \\ \small = 0.986 }[/math] | ||

| [math]\displaystyle{ \small \begin{align} &\text{E-sopfr-C}(\textbf{i}) \\ &= \left\|\text{diag}(𝒑)\textbf{i}\right\|_2 \\ \small &= \sqrt[2]{\strut \sum\limits_{n=1}^d \left|p_n · \mathrm{i}_n\right|^2} \\ \small &= \sqrt[2]{\strut \left|p_1 · \mathrm{i}_1\right|^2 + \left|p_2 · \mathrm{i}_2\right|^2 + \ldots + \left|p_d · \mathrm{i}_d\right|^2} \\ \small &= \sqrt{\strut \left(p_1\mathrm{i}_1\right)^2 + \left(p_2\mathrm{i}_2\right)^2 + \ldots + \left(p_d\mathrm{i}_d\right)^2} \end{align} }[/math] | [math]\displaystyle{ \small = \sqrt{\strut |2 · 1|^2 + |3 · {-2}|^2 + |5 · 1|^2} \\ \small = \sqrt{\strut 2^2 + 6^2 + 5^2} \\ \small = \sqrt{\strut 4 + 36 + 25} \\ \small = \sqrt{\strut 65} \\ \small \approx 8.062 }[/math] | [math]\displaystyle{ \small \begin{align} &\text{E-sopfr-C}^{*}(𝒓) \\ &= \left\|𝒓\,\text{diag}(𝒑)^{-1}\right\|_2 \\ \small &= \sqrt[2]{\strut \sum\limits_{n=1}^d \left|\frac{r_n}{p_n}\right|^2} \\ \small &= \sqrt[2]{\strut \left|\frac{r_1}{p_1}\right|^2 + \left|\frac{r_2}{p_2}\right|^2 + \ldots + \left|\frac{r_d}{p_d}\right|^2} \\ \small &= \sqrt{\strut \left(\frac{r_1}{p_1}\right)^2 + \left(\frac{r_2}{p_2}\right)^2 + \ldots + \left(\frac{r_d}{p_d}\right)^2} \end{align} }[/math] | [math]\displaystyle{ \small = \sqrt{\strut \left(\frac{1.617}{2}\right)^2 + \left(\frac{{-2.959}}{3}\right)^2 + \left(\frac{3.202}{5}\right)^2} \\ \small \approx \sqrt{\strut 0.654 + 0.973 + 0.410} \\ \small = \sqrt{\strut 2.037} \\ \small \approx 1.427 }[/math] | ||||

In the next section of this article, we will be taking a look at each of these complexities individually. Formulas, examples, and associated tuning schemes can be found in the reference table above, and below we'll go through each complexity in detail, disambiguating any variable names in the table, suggesting helpful ways to think about the relationships between their formulas, and explaining why some theorists may favor them for regular temperament tuning. Before we begin to look at the complexity functions which we haven't discussed before, though, we'll first be reviewing in this level of detail those few of them that we have already used. This will help us set the stage properly for the newer ones. We'll actually start with the log-product complexity here, that is, we'll look at it before the product complexity, even though the product complexity is conceptually simpler than it, and technically came up first in our article series. We're doing this for similar reasons to why we adopted the log-product complexity as our default complexity in all our theory thus far: it's easier to work with given our LA setup for RTT.

This section is structured so that we follow each function's discussion with a discussion of its Euclideanized version, so we end up with an alternating pattern of original and Euclideanized function discussions.

We've adopted the notational choice for functions which are getting applied for computing complexity by suffixing them with [math]\displaystyle{ \text{-C} }[/math]. For example, [math]\displaystyle{ \text{sopfr}() }[/math] is simply the mathematical function "sum of prime factors with repetition", while [math]\displaystyle{ \text{sopfr-C}() }[/math] is that applied for computing complexity. And any such complexity function has a corresponding simplicity function which returns its reciprocal values, and which is notated instead with the [math]\displaystyle{ \text{-S} }[/math] suffix.

Previously we've referred to a norm form of a complexity as having a dual norm as well as an inverse prescaler. For the remainder of this article, we will be switching nomenclature to inverse pre-transformer for the latter, on account of the fact that some of the alternative complexity functions described here have norm forms which use a non-rectangular matrix in that position, and so it would be somewhat of a misnomer to consider them merely pre-scalers in general.

As a final note before we begin: for purposes of this discussion, a "vector-based form" of a complexity's formula refers specifically to the prime-count vectors that we use for JI ratios; the notion of a "vector-based form" of a mathematical function defined for quotients, i.e. in terms of a numerator and a denominator, has no meaning unless the nature of that vector is specified, and in our case we specify it as a vectorization of the quotients' prime factorization. So for purposes of this article, when we see the quotient n/d, this refers to the same interval as the vector [math]\displaystyle{ \textbf{i} }[/math].

Log-product

Log-product complexity [math]\displaystyle{ \text{lp-C}() }[/math] was famously used by Paul Erlich in the simplicity-weight damage of the "Tenney OPtimal" tuning scheme he introduced in his Middle Path paper, though he referred to it there as "harmonic distance", that being James Tenney's term for it, and Tenney being the first person to apply it to tuning theory (indeed this function is still known to some theorists as "Tenney height"). We still consider this tuning scheme to be the gold standard among the all-interval tuning schemes, and this reflects in how our tuning scheme naming system gives it the simplest name it is capable of giving: "minimax-S". The use of [math]\displaystyle{ \text{lp-C}() }[/math] for the minimax-S tuning scheme is discussed at length in the previous article of this series on all-interval tuning schemes, but it can be used to weight error in ordinary tuning schemes too.

Default status

Log-product complexity was chosen by us (Douglas and Dave) to be our default complexity function. By this it is meant that if one says "miniaverage-C" tuning, the assumption is that the complexity-weight damage is log-product complexity; there is no need to spell out "miniaverage-lp-C". It was chosen as the default for its excellent balance of easy-to-understand and easy-to-compute qualities, while doing a good job capturing the reality of harmonic complexity. For a more in-depth defense of this choice and exploration of other possibilities, see the later section A defense of our choice of log product as our default complexity.

Formulas

The quotient form of the log-product complexity function is the base-2 logarithm of the product of a quotient's numerator and denominator:

[math]\displaystyle{ \text{lp-C}\left(\frac{n}{d}\right) = \log_2(n·d) }[/math]

Or, equivalently, the sum of the base-2 logarithm of each separately:

[math]\displaystyle{ \text{lp-C}\left(\frac{n}{d}\right) = \log_2(n) + \log_2(d) }[/math]

The equivalent vector-based form of the log-product complexity function, to be called on the equivalent vector [math]\displaystyle{ \textbf{i} }[/math], is given as a norm by:

[math]\displaystyle{ \text{lp-C}(\textbf{i}) = \left\|L\textbf{i}\right\|_1 }[/math]

where L is the log-prime matrix, a diagonalized list of the base-2 logs of each prime.

The vector-based form may also be understood as a summation:

[math]\displaystyle{

\begin{align}

\text{lp-C}(\textbf{i}) &= \sqrt[1]{\strut \sum\limits_{n=1}^d \log_2{p_n}\left|\mathrm{i}_n\right|^1} \\

&= \sum\limits_{n=1}^d \log_2{p_n}\left|\mathrm{i}_n\right|

\end{align}

}[/math]

where p_n is the nth prime (assuming this is a temperament of a standard domain, i.e. its basis is a prime limit; in general p_n is the nth entry of whichever prime list 𝒑 your temperament is working with (or in the general case a basis element list). We also note that n is not the numerator and d is not the denominator here; their reappearance together is a coincidence. What n is here is a generic summation index that increments each step of the sum (matching the nth prime up with the nth entry of [math]\displaystyle{ \textbf{i} }[/math]), and d is the dimensionality of [math]\displaystyle{ \textbf{i} }[/math] (and the temperament in general).

Either way of writing this vector-based form—norm, or summation—may be expanded to:

[math]\displaystyle{

\text{lp-C}(\textbf{i}) = \left|\log_2{p_1} · \mathrm{i}_1\right| + \left|\log_2{p_2} · \mathrm{i}_2\right| + \ldots + \left|\log_2{p_d} · \mathrm{i}_d\right|

}[/math]

In order to understand how the vector-based form is equivalent to the quotient form, please refer to our explanation using the logarithmic identities [math]\displaystyle{ \log(a·b) = \log(a) + \log(b) }[/math] and [math]\displaystyle{ \log\left(a^c\right) = c·\log(a) }[/math] in the all-interval tuning schemes article.

Proportionality to size

Because the log-prime matrix L can also be understood to figure into how we compute the sizes of intervals in cents, we see an interesting effect for tunings that use complexities such as [math]\displaystyle{ \text{lp-C}() }[/math] which use it as (or as part of) their complexity pretransformer X. A complexity pretransformer's inverse pretransformer [math]\displaystyle{ X^{-1} }[/math], appears in the minimization target according to RTT's take on the dual norm inequality: [math]\displaystyle{ \left\|𝒓X^{-1}\right\|_{\text{dual}(q)} }[/math]. So given the following:

- The retuning map is the tuning map minus the just tuning map: [math]\displaystyle{ 𝒓 = 𝒕 - 𝒋 }[/math]

- The just tuning map can be understood to be the log-prime matrix left-multiplied by a summation map [math]\displaystyle{ \slant{\mathbf{1}} = \val{1 & 1 & 1 & …} }[/math] and then scaled to cents with an octave-to-cents conversion factor of 1200, that is: [math]\displaystyle{ 𝒋 = 1200\times\slant{\mathbf{1}}L }[/math]

- In [math]\displaystyle{ \text{lp-C}() }[/math] it is the case that [math]\displaystyle{ X = L }[/math].

Then we find:

[math]\displaystyle{

\left\|𝒓X^{-1}\right\|_{\text{dual}(q)}

}[/math]

Substituting in for [math]\displaystyle{ 𝒓 = 𝒕 - 𝒋 }[/math] and [math]\displaystyle{ X^{-1} = X^{-1} = (L)^{-1} }[/math]

[math]\displaystyle{

\left\|(𝒕 - 𝒋)(L^{-1})\right\|_{\text{dual}(q)}

}[/math]

Distributing:

[math]\displaystyle{

\left\|𝒕L^{-1} - 𝒋L^{-1}\right\|_{\text{dual}(q)}

}[/math]

Substituting in for [math]\displaystyle{ 𝒋 = 1200\times\slant{\mathbf{1}}L }[/math]:

[math]\displaystyle{

\left\|𝒕L^{-1} - (1200\times\slant{\mathbf{1}}L)L^{-1}\right\|_{\text{dual}(q)}

}[/math]

And now canceling out the [math]\displaystyle{ LL^{-1} }[/math] on the second term:

[math]\displaystyle{

\left\|𝒕L^{-1} - 1200\times\slant{\mathbf{1}}\cancel{L}\cancel{L^{-1}}\right\|_{\text{dual}(q)}

}[/math]

Note that [math]\displaystyle{ 𝒕 = 1200\times\slant{\mathbf{1}}LGM }[/math], and so the left term could also be expressed as [math]\displaystyle{ 1200\times\slant{\mathbf{1}}LGML^{-1} }[/math], but L and [math]\displaystyle{ L^{-1} }[/math] do not cancel here, because they are not immediate neighbors. We meaningfully transform into [math]\displaystyle{ L^{-1} }[/math] space, deal with M and G there, and then transform back out with L here. So we find that the retuning magnitude is:

[math]\displaystyle{

\left\|𝒕L^{-1} - 1200\times\slant{\mathbf{1}}\right\|_{\text{dual}(q)}

}[/math]

And so this is the minimization target for tuning schemes whose complexity pretransformer equals L. What's cool about this is how we're tuning each prime proportionally to its own size. The second term here is a map consisting of a string of 1200's, one for each prime. And the first term is the tuning map, but with each term scaled proportionally to the log of the corresponding prime, such that if the tempered version of the prime were to be pure, it would equal 1200 here. If we took the 1200 out of the picture, we could perhaps see even more clearly that we were weightingeach prime proportionally to its own size. In other words, since we're minimizing the difference between each prime's tuning and 1, this would mean that a perfect tuning equals 1, narrow tunings are values like 0.99, and wide tunings are values like 1.01. This is in contrast to the non-pretransformed case, where every cent off from any prime makes the same impact to its difference with its just size.

We could think of it this way: while 𝒓 simply gives us the straight amount of error to each prime interval, [math]\displaystyle{ 𝒓L^{-1} }[/math] gives us that error relative to the size of each of those intervals, i.e in terms of a proportion such as could be expressed with a percentage. In other words, since the interval 7/1 is much bigger than the interval 2/1, if they both had the same amount of error, then that'd actually be quite a bit less error per octave for prime 7 (specifically, 1/\log_2{7} as much). So, if we're using an optimizer to minimize a norm of a vector containing this error value and others, by reducing it in this way, we are telling the optimizer—for each next prime bigger than the last—worry about its error proportionally less than the prime before. This is the rationale Paul used when basing his tuning scheme on a Tenney lattice, and we think that if you're going to use an all-interval tuning, then this is a pretty darn appealing reason to use [math]\displaystyle{ \text{lp} }[/math] as the interval complexity function in the simplicity-weighting of your damage. As with non-all-interval tuning schemes, we consider [math]\displaystyle{ \text{lp} }[/math] to be the clear default interval complexity function; this is just a new perspective on that same old intuition.

We might also call attention to the fact that cents and octaves are both logarithmic pitch units. Which is to say that we could simplify the units of such quantities down to nothing if we wanted. That is, we know the conversion factor between these two units, so multiplying by 1/1200 octaves/cent would cancel out the units, leaving us with a dimensionless quantity. So why do we keep these values in the more complicated units of cents per octave? The main reason is convenient size. When tuning temperaments, we tend to deal with smallish damage values: usually less than 10, almost always less than 100. If that gets divided by 1200 (~1000) then we'd typically be comparing amounts in the hundredths. Who wants to compare damages of 0.001 and 0.002, i.e. essentially proportions of octaves? As Graham puts it in his paper Prime Based Error and Complexity Measures, "Because the dimensionless error is quite a small number, using cents per octave instead is a good practical idea."[note 1]

This L-canceling proportionality effect occurs for all-interval tunings that use any complexity with L as its pretransformer (or a component thereof), such as minimax-ES, minimax-lils-S, and minimax-E-lils-S described later in this article.

This effect does not occur for ordinary tunings with simplicity weight damage, i.e. where the simplicity matrix is [math]\displaystyle{ S = \dfrac1L = L^{-1} }[/math]. At least, it doesn't whenever they use anything other than the primes as target-intervals, i.e. [math]\displaystyle{ \mathrm{T} ≠ I }[/math], because having [math]\displaystyle{ \mathrm{T} }[/math] sandwiched between the L and the [math]\displaystyle{ L^{-1} }[/math] prevents them from canceling:

[math]\displaystyle{

\llzigzag \textbf{d} \rrzigzag _p = \llzigzag 1200\times\slant{\mathbf{1}}LGM\mathrm{T}L^{-1} - 1200\times\slant{\mathbf{1}}L\mathrm{T}L^{-1} \, \rrzigzag _p

}[/math]

We'll briefly look at a units analysis of this situation. Interestingly, even though this [math]\displaystyle{ LL^{-1} }[/math] cancels out mathematically, the units do not. That's because these really aren't the same L, conceptually speaking anyway. They have the same shape and entries, but different purposes. The first one is there to convert prime counts into octave amounts, i.e. from frequency space where we multiply primes to pitch space where we add their logs; this L logically has units of oct/p. The second one is there to scale prime factors according to a particular idea of interval complexity, which happens to be (well, we'd say, was engineered to be) in direct correlation with their octave amounts; this pretransformer L has no actual units but carries a unit annotation (C) that it applies to whatever it pretransforms (and [math]\displaystyle{ L^{-1} }[/math] thereby has the annotation (S), for simplicity, being the inverse of L).[note 2] And so when we consider the whole expression [math]\displaystyle{ 1200\times\slant{\mathbf{1}}LL^{-1} }[/math], we find that 1200 has units of ¢/oct, [math]\displaystyle{ \slant{\mathbf{1}} }[/math] has units of oct/oct, L has units of oct/p, and [math]\displaystyle{ L^{-1} }[/math] has units of (S), and when everything's done canceling out, you end up with ¢/p(S). Note that the annotation is not "in the denominator"; it applies equally to the entire unit. And the most logical way to read this would be as if the annotation was on the numerator, in fact, as "simplicity-weighted cents per prime". You can check that this is the same units you'd get for the other term [math]\displaystyle{ 𝒕L^{-1} }[/math], and thus for their difference, [math]\displaystyle{ 𝒓L^{-1} }[/math]. Since the noteworthy thing here was that the matched L's had no effect on the units analysis, we further note that this analysis is valid for any inverse pretransformed retuning map. But we should also say that it's not entirely wrong to think of [math]\displaystyle{ L^{-1} }[/math] as having units of p/oct, i.e. as being the inverse of the L that's used for sizing interval vectors, in which case we end up with ¢/oct units instead; both Keenan Pepper (in his tiptop.py script) calls the units of damage in minimax-S tuning "cents per octave", and Graham Breed considers them cents per octave as well.[note 1]

Euclideanized log-product

To Euclideanize log-product complexity, we simply take the powers and roots of 1 from the vector-based forms (norm, summation, and expanded) and swap them out for 2's. This also changes its name from "(taxicab) log-product complexity" to "Euclideanized" same. Note that the quotient form does not have these powers and thus we have no quotient form of [math]\displaystyle{ \text{E-lp-C}() }[/math].

[math]\displaystyle{

\begin{array} {rcl}

{\color{red}\text{(t-)}}\text{lp-C} & \text{name} & {\color{red}\text{E-}}\text{lp-C} \\

\log_2(n·d) & \text{quotient} & \text{—} \\

\left\|L\textbf{i}\right\|_{\color{red}1} & \text{norm} & \left\|L\textbf{i}\right\|_{\color{red}2} \\

\sqrt[{\color{red}1}]{\strut \sum\limits_{n=1}^d \log_2{p_n}\left|\mathrm{i}_n\right|^{\color{red}1}} & \text{summation} & \sqrt[{\color{red}2}]{\strut \sum\limits_{n=1}^d \log_2{p_n}\left|\mathrm{i}_n\right|^{\color{red}2}} \\

\sqrt[{\color{red}1}]{\strut \left|\log_2{p_1} · \mathrm{i}_1\right|^{\color{red}1} + \left|\log_2{p_2} · \mathrm{i}_2\right|^{\color{red}1} + \ldots + \left|\log_2{p_d} · \mathrm{i}_d\right|^{\color{red}1}} & \text{expanded} & \sqrt[{\color{red}2}]{\strut \left|\log_2{p_1} · \mathrm{i}_1\right|^{\color{red}2} + \left|\log_2{p_2} · \mathrm{i}_2\right|^{\color{red}2} + \ldots + \left|\log_2{p_d} · \mathrm{i}_d\right|^{\color{red}2}}

\end{array}

}[/math]

Tunings used in

This complexity was introduced to tuning theory by Graham Breed, for his Tenney-Euclidean tuning scheme (our "minimax-ES").

Minimax-ES is also sometimes referred to as the "T2" tuning scheme, in recognition of its falling along a continuum of tunings that include minimax-S ("TOP"), or "T1" tuning. The '1' and '2' refers to the norm power, so in "T1" the '1' refers to the ordinary case of taxicab norm, and in "T2" the '2' refers to the Euclideanization (and, theoretically, "T3" would refer to an analogous tuning that used a 3-norm instead, etc.). Unfortunately, because the meaning of the 'T' is "Tenney", a reference to his harmonic lattice which uses a harmonic distance measure that is a pretransformed 1-norm, this is not the cleanest terminology, since any value other than '1' here is overriding that aspect of the meaning of the 'T'. We prefer our systematic naming, which uses an optional "t-" (for taxicab, not Tenney) or an "E-" (for Euclidean) in place of special norm power values of '1' and '2', respectively, and otherwise just uses the number, for example "T3" would be "minimax-3S".

Product

Product complexity is found as the product of a quotient's numerator and denominator:

[math]\displaystyle{ \small \text{prod-C}\left(\dfrac{n}{d}\right) = nd }[/math]

It doesn't get any simpler than that.

Vectorifiable, but not normifiable

We are able to express product complexity in vector-based form. However, unlike every other one of the complexities we look at in this article, it does not work out as a summation; rather, it works out as a product, using the analogous but less-familiar Big-Pi notation instead of Big-Sigma notation ('Π' for 'P' for "product"; 'Σ' for 'S' for "summation").

[math]\displaystyle{ \small \text{prod-C}(\textbf{i}) = \prod\limits_{n=1}^d{p_n^{\left|\mathrm{i}_n\right|}} }[/math]

Which expands like this:

[math]\displaystyle{ p_1^{\left|\mathrm{i}_1\right|} \times p_2^{\left|\mathrm{i}_2\right|} \times \ldots \times p_d^{\left|\mathrm{i}_d\right|} }[/math]

For this same reason—being a product, not a summation—product complexity has no way to be expressed as a norm, and so cannot be used directly for all-interval tuning schemes. And this is one of the key reasons why it hasn't seen as much use in tuning theory as its logarithmic cousin, [math]\displaystyle{ \text{lp-C}() }[/math]. The logarithmic version exhibits that L-canceling effect (which also underpins Paul's trick for computing minimax-S tunings of nullity-1 temperaments), and also we believe accounts for it simply "feeling about right" to the typical tuning theorist we've discussed with. Log-product complexity feels like it was designed expressly for measuring complexity of relative pitch, i.e. pitch deltas, whereas product complexity feels better suited for measuring complexity of relative frequency, i.e. frequency qoppas.[note 3] For more information on this problem, please see the later section Systematizing norms.

Tunings used in

Curiously, even though [math]\displaystyle{ \text{prod-C}() }[/math] cannot be normified, and if it can't be a norm then there can't be a dual norm for it, which means we cannot directly create an all-interval tuning "minimax-prod-S" which minimaxes the product-simplicity-weight damage across all intervals, we can nevertheless achieve this (if one feels like experimenting with a sort of conceptual mismatch between frequency and pitch, in terms of complexity measurement), albeit it comes with a further step of indirection. This is because of a special relationship between product complexity [math]\displaystyle{ \text{prod-C}() }[/math] and sum-of-prime factors-with-repetition complexity [math]\displaystyle{ \text{sopfr-C}() }[/math]: when sopfr-simplicity-weight damage is minimaxed, as is done in the minimax-sopfr-S tuning, it turns out that simultaneously the prod-simplicity-weight damage is also minimized. This is the reason why the historical name for minimax-sopfr-S has been "BOP", short for "Benedetti OPtimal", where "Benedetti height" is an alternative name for product complexity, even though minimax-sofpr-S is computed not with the product complexity but with sum-of-prime-factors-with-repetition complexity. We'll explain this in the later section on sopfr.

Euclideanized product

This is a trick section, here only for parallelism. There is no Euclideanized product complexity [math]\displaystyle{ \text{“E-prod-C”}() }[/math]. Despite product complexity boasting a vector form, that vector-based form involves a product, not a summation, and therefore cannot be expressed as a norm, and Euclideanization is something that can only be done to a norm (or equivalent summation). Compare:

[math]\displaystyle{

\begin{array} {rcl}

\sqrt[{\color{red}1}]{\strut \sum\limits_{n=1}^d \log_2{p_n}\left|\mathrm{i}_n\right|^{\color{red}1}} & {\color{red}\text{(T-)}}\text{lp-C} \rightarrow {\color{red}\text{E-}}\text{lp-C} & \sqrt[{\color{red}2}]{\strut \sum\limits_{n=1}^d \log_2{p_n}\left|\mathrm{i}_n\right|^{\color{red}2}} \\

\prod\limits_{n=1}^d \log_2{p_n}^{\left|\mathrm{i}_n\right|} & {\color{red}\text{(T-)}}\text{prod-C} \rightarrow \text{“}{\color{red}\text{E-}}\text{prod-C”} & {\Large\quad\quad\text{?}} \\

\end{array}

}[/math]

We simply don't have the matching power and root of a norm to work with here. Since this complexity function does not exist, of course, there are no tuning schemes which use it.

Sum-of-prime-factors-with-repetition

The "sum of prime factors with repetition" function, due to its ungainly six-word-long name, is usually initialized to [math]\displaystyle{ \text{sopfr}() }[/math], in all lowercase, and curiously missing the 'w', though perhaps that's for easier comparison with its close cousin function, the "sum of prime factors" (without repetition), which is notated as [math]\displaystyle{ \text{sopf}() }[/math]. And "sopfr" is a dam site more pronounceable than "sopfwr" would be. By "repetition" here it is meant that if a prime factor appears more than once, it is counted for each appearance. It may seem obvious to count all the occurrences of each prime, but there are valuable applications to the without-repetition version of this function (though it's unlikely that it will see much use in tuning theory).

The quotient form of sum-of-prime-factors-with-repetition-complexity [math]\displaystyle{ \text{sopfr-C}\left(\frac{n}{d}\right) }[/math] is simply the sum-of-prime-factors-with-repetition of the numerator times the denominator, [math]\displaystyle{ \text{sopfr}(nd) }[/math], or, equivalently, the [math]\displaystyle{ \text{sopfr}() }[/math] for each of the numerator and denominator separately then added together [math]\displaystyle{ \text{sopfr}(n) + \text{sopfr}(d) }[/math].[note 4]

The vector-based form of [math]\displaystyle{ \text{sopfr-C} }[/math] looks like so:

[math]\displaystyle{

\text{sopfr-C}(\textbf{i}) = \sum\limits_{n=1}^d(p_{n}·\left|\mathrm{i}_{n}\right|)

}[/math]

Where, as we've been doing so far, p_n is the nth entry of 𝒑, the list of primes that match with the counts in each entry [math]\displaystyle{ \mathrm{i}_n }[/math] of the interval vectors [math]\displaystyle{ \textbf{i} }[/math].

Variations

The tuning theorist Carl Lumma has advocated[note 5] for a variation on this tuning where the primes are all squared before prescaling. This is supported in the RTT Library in Wolfram Language. In fact, it also supports pretransforming by [math]\displaystyle{ p^a·\log_2{p}^b }[/math], for any power a or b.

Tunings used in

[math]\displaystyle{ \text{sopfr-C}() }[/math] is used in the minimax-sopfr-S tuning scheme, historically known as "BOP". As explained above, this tuning is named "Benedetti OPtimal" because "Benedetti" is associated with product complexity—not the sopfr complexity we use as our damage weight when computing it. Apparently more theorists care about minimizing product complexity than about minimizing sopfr, which is fine. We have to minimize with [math]\displaystyle{ \text{sopfr-C}() }[/math] weight instead of [math]\displaystyle{ \text{prod-C}() }[/math] weight because [math]\displaystyle{ \text{prod-C}() }[/math] is not normifiable, being a product, not a summation. And minimizing with [math]\displaystyle{ \text{sopfr-C}() }[/math] works because we have a proof that if sopfr-S-weight damage is minimized, then so too is prod-S-weight damage. What follows is an adaptation of the proof found here BOP tuning#Proof of Benedetti optimality on all rationals.

By the dual norm inequality, we have:

[math]\displaystyle{

\dfrac{\left|𝒓\textbf{i}\right|}{\left\|\text{diag}(𝒑)\textbf{i}\right\|_1} \leq \left\|𝒓\text{diag}(𝒑)^{-1}\right\|_\infty

}[/math]

This numerator on the left is the absolute error of an arbitrary interval [math]\displaystyle{ \textbf{i} }[/math] according to some temperament's retuning map 𝒓. And the denominator on the left is equivalent to the sopfr complexity. Dividing by complexity is the same as multiplying by simplicity. And so the entire left-hand side is the sopfr-S-weight damage to an arbitrary interval. We can therefore minimize the damage to all such intervals by minimizing the right-hand side, since that's the direction the inequality points. And minimizing the right-hand side is easier. This much should all be review if you've gone through the all-interval tuning schemes article; we're just taking the same concept that we taught with the log-product complexity, but here doing it with sopfr-complexity.

We said the denominator on the left half is [math]\displaystyle{ \text{sopfr-C}(\textbf{i}) }[/math]. Let's actually substitute that in, for improved clarity in upcoming steps.

[math]\displaystyle{

\dfrac{\left|𝒓\textbf{i}\right|}{\text{sopfr-C}(\textbf{i})} \leq \left\|𝒓\text{diag}(𝒑)^{-1}\right\|_\infty

}[/math]

Our goal is to show that prod-S-weight damage across all intervals is also minimized by minimizing the right-hand side of this inequality:

[math]\displaystyle{

\dfrac{\left|𝒓\textbf{i}\right|}{\text{prod-C}(\textbf{i})} \leq \left\|𝒓\text{diag}(𝒑)^{-1}\right\|_\infty

}[/math]

Now that's not so easy. But what we can do instead is show that this damage is less or equal to the left-hand side of the original inequality. Another level of minimization down:

[math]\displaystyle{

\dfrac{\left|𝒓\textbf{i}\right|}{\text{prod-C}(\textbf{i})} \leq \dfrac{\left|𝒓\textbf{i}\right|}{\text{sopfr-C}(\textbf{i})} \leq \left\|𝒓\text{diag}(𝒑)^{-1}\right\|_\infty

}[/math]

We can just take the first chunk of that and eliminate the redundant numerators:

[math]\displaystyle{

\dfrac{\cancel{\left|𝒓\textbf{i}\right|}}{\text{prod-C}(\textbf{i})} \leq \dfrac{\cancel{\left|𝒓\textbf{i}\right|}}{\text{sopfr-C}(\textbf{i})}

}[/math]

And then reciprocate both sides (which flips the direction of the inequality symbol):

[math]\displaystyle{

\text{prod-C}(\textbf{i}) \geq \text{sopfr-C}(\textbf{i})

}[/math]

So we now just have to prove that the product complexity of any given interval will always be greater or equal than its sopfr complexity. And if we do this, we will have proven that minimax-sopfr-S tuning is the same as minimax-prod-S tuning. So let's consider an exhaustive set of two cases: either [math]\displaystyle{ \textbf{i} }[/math] is prime, or it is not prime.

- If [math]\displaystyle{ \textbf{i} }[/math] is prime, then [math]\displaystyle{ \text{prod-C}(\textbf{i}) = \text{sopfr-C}(\textbf{i}) }[/math]. This is because with only a single prime factor, the trivial product of it with nothing else, and the trivial sum of it with nothing else, will give the same result.

- If [math]\displaystyle{ \textbf{i} }[/math] is not prime, then we have a single case where [math]\displaystyle{ \text{prod-C}(\textbf{i}) = \text{sopfr-C}(\textbf{i}) }[/math], which is 4/1 because 2×2 = 2+2, but in every other case[note 6] we find [math]\displaystyle{ \text{prod-C}(\textbf{i}) \gt \text{sopfr-C}(\textbf{i}) }[/math]

And so since

[math]\displaystyle{

\text{prod-C}(\textbf{i}) \geq \text{sopfr-C}(\textbf{i})

}[/math]

implies

[math]\displaystyle{

\dfrac{\left|𝒓\textbf{i}\right|}{\text{prod-C}(\textbf{i})} \leq \dfrac{\left|𝒓\textbf{i}\right|}{\text{sopfr-C}(\textbf{i})}

}[/math]

and

[math]\displaystyle{

\dfrac{\left|𝒓\textbf{i}\right|}{\text{sopfr-C}(\textbf{i})} \leq \left\|𝒓\text{diag}(𝒑)^{-1}\right\|_\infty

}[/math]

therefore

[math]\displaystyle{

\dfrac{\left|𝒓\textbf{i}\right|}{\text{prod-C}(\textbf{i})} \leq \left\|𝒓\text{diag}(𝒑)^{-1}\right\|_\infty

}[/math]

Done.

Euclideanized sum-of-prime-factors-with-repetition

Not much to see here; only Euclideanized [math]\displaystyle{ \text{sopfr-C}() }[/math], which is to say:

[math]\displaystyle{ \begin{align} \text{sopfr-C} &= \left\|\text{diag}(𝒑)\textbf{i}\right\|_{\color{red}1} \\ {\color{red}\text{E-}}\text{sopfr-C} &= \left\|\text{diag}(𝒑)\textbf{i}\right\|_{\color{red}2} \\ \end{align} }[/math]

Tunings used in

As one should expect, [math]\displaystyle{ \text{E-sopfr-C}() }[/math] is used in the minimax-E-sopfr-S tuning scheme, historically known as "BE", where the 'B' is for "Benedetti" as in the Benedetti height as in the product complexity (which has that special relationship with the sum-of-prime-factors-with-repetition complexity), and the 'E' is as in "Euclidean".

Count-of-prime-factors-with-repetition

The count of prime factors with repetition function is closely related to the sum of prime factors with repetition function, as one might expect considering how their names are off by just this one word. The only difference is that where in [math]\displaystyle{ \text{sopfr}() }[/math] we sum each prime factor, we can think of [math]\displaystyle{ \text{copfr}() }[/math] as doing the same thing except replacing each prime factor—regardless of its size—with the number 1.

Tunings used in

The count of prime factors with repetition has not actually been used in a tuning scheme before to our knowledge. However, we've included it here on the possibility that it might be, but mostly for parallelism with the other pairs of taxicab and Euclideanized versions of the otherwise same complexity function, due to the fact that the Euclideanized version of [math]\displaystyle{ \text{copfr-C}() }[/math] has seen use.

If this minimax-copfr-S tuning were to be used, a noteworthy property it has is that whenever a temperament has only one comma (i.e. is nullity-1) every prime receives an equal amount of absolute error in cents. Compare this with the effect we see for minimax-S tuning as observed by Paul Erlich in his Middle Path paper.

Euclideanized count-of-prime-factors-with-repetition

In terms of its formulas, [math]\displaystyle{ \text{E-copfr-C}() }[/math] is fairly straightforward: it's just the [math]\displaystyle{ \text{copfr-C}() }[/math] but with the powers and roots changed from 1 to 2, so said another way, it's the (un-pretransformed) 2-norm:

[math]\displaystyle{ \small \begin{align} \text{E-copfr-C}(\textbf{i}) &= \left\|\textbf{i}\right\|_2 \\ \small

&= \sqrt[2]{\strut \sum\limits_{n=1}^d \left|\mathrm{i}_n\right|^2} \\ \small

&= \sqrt[2]{\strut \left|\mathrm{i}_1\right|^2 + \left|\mathrm{i}_2\right|^2 + \ldots + \left|\mathrm{i}_d\right|^2} \\ \small

&= \sqrt{\strut \mathrm{i}_1^2 + \mathrm{i}_2^2 + \ldots + \mathrm{i}_d^2} \\

\end{align} }[/math]

Thinking about how this actually ranks intervals, it should become clear soon enough that this is complete garbage as a complexity function. It treats all prime numbers as having equal complexity—the lowest complexity possible—and it treats powers of 2 as the most complex numbers in their vicinity. e.g. It considers 8 to be more complex than 9, 10, 11, 12, 13, 14 and 15. So it's a far cry from monotonicity over the integers, i.e. any higher integer is more complex than the previous one.

Tunings used in

How, you might ask, did such a ridiculously-named[note 7] complexity function, that completely flattens the influence of the different prime factors, and suffers the tuning shortcomings of any Euclideanized tuning scheme of measuring harmonic distance through the non-real diagonal space of the lattice, come to be used as an error weight in tuning schemes? The answer is: because of the pseudoinverse. It happens to be the case that when the pseudoinverse of the temperament mapping is taken to be the generator embedding, this is equivalent to having optimized for minimizing the [math]\displaystyle{ \text{E-copfr-S}() }[/math]-weight (note the 'S' for simplicity) damage across all intervals—no target list, no prescaling, no nothing. Why exactly this works out like this is a topic for the later section where we work through the computations of this tuning. For now we will simply note that the historical name of this tuning scheme is "Frobenius", on account of the fact that it also minimizes the Frobenius norm (a matrix norm that generalizes the 2-norm we use for vectors) of the projection matrix, while in our systematic name this is simply the minimax-E-copfr-S tuning scheme. And we'll say that while this pseudoinverse method is basically the easiest possible method for "optimizing" a tuning that could possibly exist, and was fascinating upon its initial discovery, it does come with the tradeoff of probably not being worth doing in the first place—the ultimate example of streetlight effect in RTT—especially in a modern environment where many tools are available for automatically computing better tunings just as quickly and easily, from the average musician's perspective.

Also of note, this all-interval tuning scheme minimax-E-copfr-S is equivalent to an ordinary tuning that would be called, in our system, "primes miniRMS-U", even though these have different target interval sets ("all" versus "primes"), optimization powers ([math]\displaystyle{ \infty }[/math] versus 2), and damage weight (copfr-S versus unity). The reason being that with minimax-E-copfr-S, we're just minimizing [math]\displaystyle{ \left\|𝒓X^{-1}\right\|_2 }[/math] where [math]\displaystyle{ X^{-1} = I }[/math] and [math]\displaystyle{ 𝒓 = 𝒈M - 𝒋 }[/math], and with primes miniRMS-U we're minimizing [math]\displaystyle{ \llangle|𝒈M - 𝒋|\mathrm{T}W\rrangle_2 }[/math] where both [math]\displaystyle{ \mathrm{T} = I }[/math] on account of "primes" and [math]\displaystyle{ W = I }[/math] on account of the unity-weight. And it doesn't matter whether we compute the minimum of a power norm [math]\displaystyle{ \left\| · \right\|_q }[/math] or a power mean [math]\displaystyle{ \llangle · \rrangle_p }[/math]; we'll find the same tuning either way. Put another way, with either scheme, we find the optimal tuning as the pseudoinverse of M.

A similar equivalence is found between the minimax-copfr-S tuning scheme and the primes minimax-U tuning scheme; the only difference here is that the optimization power of the latter is [math]\displaystyle{ \infty }[/math] which matches with the dual norm power of copfr. And a similar equivalence would also be found between the minimax-M-copfr-S tuning scheme (the "maxized" variant) and the primes-minisum-U tuning scheme, if anyone used maxized variants of complexities.

Log-integer-limit-squared

Before looking at the log-integer-limit-squared complexity, let's first cover the plain integer-limit-squared complexity. Actually, let's just look at the integer limit function. Called on an integer, it would return the integer itself. Called on a rational, well, now we have two integers to look at, so it's going to give us whichever one of the two is greater.

[math]\displaystyle{

\text{il}\left(\frac{n}{d}\right) = \max(n, d)

}[/math]

And in the context of RTT tuning, where tuning a subunison interval is equivalent to tuning its superunison equivalent, we typically normalize to all superunisons, i.e. intervals where the numerator is greater than the denominator, so the integer limit typically means simply to return the numerator and throw away the denominator:

assuming [math]\displaystyle{ \; n \gt d, \quad \text{il}\left(\frac{n}{d}\right) = n }[/math]

The integer-limit-squared, then, is typically just the numerator squared. It's as if instead of dropping the denominator, we replaced it with a copy of the numerator and then took the product complexity.

[math]\displaystyle{

\text{assuming} \; n \gt d, \quad \text{ils}\left(\frac{n}{d}\right) = n\times n = n^2

}[/math]

And the log integer limit, then, is just the logarithm of the above:

[math]\displaystyle{

{\color{red}\text{l}}\text{ils-C}\left(\frac{n}{d}\right) = {\color{red}\log_2}\left(\max(n, d)^2\right)

}[/math]

Owing to the base-2 logarithmic nature of octave-equivalence in human pitch perception, we tend to go with 2 as our logarithm base, as we've done here.

Why squared?

Why use log-integer-limit-squared rather than simply log-integer-limit? The main reason is: for important use cases of tuning schemes based on this complexity, it simplifies formulas. In short, squaring it here actually eliminates annoying factors of 1/2 elsewhere.

There's also an argument that this makes it more nearly equivalent to log-product complexity in concept, as they now are both the log of the product of two 'ators; in the log-product case, it's a numerator and denominator, while in the log-integer-limit-squared case, it's two numerators.

Note that the log of anything squared is the same as two times the log of the original thing. So [math]\displaystyle{ \log\left(\max(n,d)^2\right) = 2\times\log\left(\max(n,d)\right) }[/math].

Also note that "log-integer-limit-squared" tuning is parsed as "log-" of "integer-limit-squared", not as "log-integer-limit" then "squared."

Normifying: The cancelling-out machine

As we've seen many times by now, in order to use this complexity in an all-interval tuning, we need to get it into the form of a norm, so that we can minimize its dual norm. So, how to normify this formula that involves the taking of a maximum value? At first, this may not seem possible. However, theoreticians have developed a clever trick for this. Now this is only the first step to normifying this function; as we will see in a moment, it is indeed still quotient-based. However, we'll also find that this quotient-based formula—since it has gotten rid of the [math]\displaystyle{ \max() }[/math] function which we didn't know how to normify—will eventually be normifiable. Check it out:

[math]\displaystyle{ \text{lils-C}\left(\dfrac{n}{d}\right) = \log_2{nd} + \left|\log_2{\frac{n}{d}}\right| }[/math]

It turns out that the log of the max of the numerator and denominator is always equivalent to the log of their product plus the absolute value of the log of their quotient. On first appearances, this transformation may be completely opaque. To understand how this trick works, let's take a look at the simplest possible version of it, and then adapt that back into our particular use case.

So here's the purest distillation of the idea: [math]\displaystyle{ 2\times\max(a, b) = a + b + |a - b| }[/math]. The key thing is to notice what happens with the absolute value bars. Think about it this way: to extract the maximum value between a and b, we need some way to throw away the smaller of the two values completely, while retaining the greater of the two values exactly as it came in. So, we cleverly leverage the absolute value bars here as a sort of canceling-out machine.

We can prove that trick works by exhaustively checking all three of the possible cases:

- [math]\displaystyle{ a \gt b }[/math]

- [math]\displaystyle{ a \lt b }[/math]

- [math]\displaystyle{ a = b }[/math]

First, let's check [math]\displaystyle{ a \gt b }[/math]. In this case, [math]\displaystyle{ |a - b| = a - b }[/math], which is to say that we can simply drop the absolute value bars, because we know that the value inside of them is positive. That gives us:

[math]\displaystyle{

\begin{align}

\text{if} \; a \gt b, 2\times\max(a, b) &= a + b + |a - b| \\

&= a + b + {\color{red}(a - b)} \\

&= a \cancel{+ b} + a \cancel{- b} \\

&= a + a \\

&= 2a

\end{align}

}[/math]

And when [math]\displaystyle{ a \lt b }[/math], then [math]\displaystyle{ |a - b| = b - a }[/math]. It's always going to be the big one minus the small one. So that gives us:

[math]\displaystyle{

\begin{align}

\text{if} \; a \lt b, 2\times\max(a, b) &= a + b + |a - b| \\

&= a + b + {\color{red}(b - a)} \\

&= \cancel{a} + b + b \cancel{- a} \\

&= b + b \\

&= 2b

\end{align}

}[/math]

Voilà. We've achieved our canceling-out machine. We can also notice that as a side-effect of the canceling-out machine we not only preserve the greater of a and b, but create an extra copy of it. So that's the reason for the factor of 2: to deal with that side-effect. (Alternatively, we could have looked at the [math]\displaystyle{ \max(a, b) }[/math] and kept a factor of 1/2 on the right side of the equality.)

But just to be careful, let's also check the edge case of [math]\displaystyle{ a = b }[/math]. Here, the stuff inside the absolute value bars goes to zero, and we can substitute a for b (or vice versa), so we get:

[math]\displaystyle{

\begin{align}

\text{if} \; a = b, 2\times\max(a, b) &= a + b + |a - b| \\

&= a + {\color{red}a} + {\color{red}(0)} \\

&= a + a \\

&= 2a

\end{align}

}[/math]

Okay, now that we've proved that [math]\displaystyle{ 2\times\max(a, b) = a + b + |a - b| }[/math] we substitute [math]\displaystyle{ \log_2{n} }[/math] for a and [math]\displaystyle{ \log_2{d} }[/math] for b to obtain: