Generator embedding optimization

When optimizing tunings of regular temperaments, it is fairly quick and easy to find approximate solutions, using (for example) the general method which is discussed in D&D's guide to RTT and available in D&D's RTT library in Wolfram Language. This RTT library also includes four other methods which quickly and easily find exact solutions. These four methods are further different from the general method insofar as they are not general; each one works only for certain optimization problems. It is these four specialized exact-solution methods which are the subject of this article.

Two of these four specialized methods were briefly discussed in D&D's guide, along with the general method, because these specialized methods are actually even quicker and easier than the general method. These two are the only held-intervals method, and the pseudoinverse method. But there's still plenty more insight to be had into how and why exactly these methods work, in particular for the pseudoinverse method, so we'll be doing a much deeper dive into it in this article here than was done in D&D's guide.

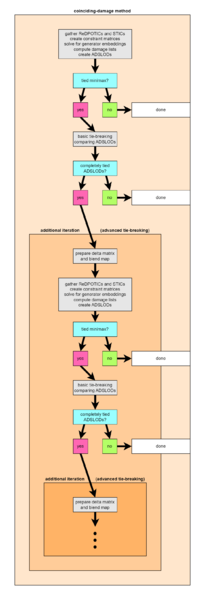

The other two of these four specialized methods—the zero-damage method, and the coinciding-damage method—are significantly more challenging to understand than the general method. Most students of RTT would not gain enough musical insight by familiarizing themselves with them to have justified the investment. This is why these two methods were not discussed in D&D's guide. However, if you feel compelled to understand the nuts and bolts of these methods anyway, then those sections of the article may well appeal to you.

This article is titled "Generator embedding optimization" because of a key feature these four specialized methods share: they can all give their solutions as generator embeddings, i.e. lists of prime-count vectors, one for each generator, where typically these prime-count vectors have non-integer entries (and are thus not JI). This is different from the general method, which can only give generator tuning maps, i.e. sizes in cents for each generator. As we'll see, a tuning optimization method's ability to give solutions as generator embeddings is equivalent to its ability to give solutions that are exact.

Intro

A summary of the methods

The three biggest sections of this article are dedicated to three specialized tuning methods, one for each of the three special optimization powers: the pseudoinverse method is used for [math]\displaystyle{ p = 2 }[/math] (miniRMS tuning schemes), the zero-damage method is used for [math]\displaystyle{ p = 1 }[/math] (miniaverage tuning schemes), and the coinciding-damage method is used for [math]\displaystyle{ p = ∞ }[/math] (minimax tuning schemes).

These three methods also work for all-interval tuning schemes, which by definition are all minimax tuning schemes (optimization power [math]\displaystyle{ ∞ }[/math]), differing instead by the power of the power norm used for the interval complexity by which they simplicity-weight damage. But it's not the interval complexity norm power [math]\displaystyle{ q }[/math] which directly determines the method used, but rather its dual power, [math]\displaystyle{ \text{dual}(q) }[/math]: the power of the dual norm minimized on the retuning magnitude. So the pseudoinverse method is used for [math]\displaystyle{ \text{dual}(q) = 2 }[/math], the zero-damage method is used for [math]\displaystyle{ \text{dual}(q) = 1 }[/math], and the coinciding-damage method is used for [math]\displaystyle{ \text{dual}(q) = ∞ }[/math].

If for some reason you've decided that you want to use a different optimization power than those three, then no exact solution in the form of a generator embedding is available, and you'll need to fall back to the general tuning computation method, linked above.

The general method also works for those special powers [math]\displaystyle{ 1 }[/math], [math]\displaystyle{ 2 }[/math], and [math]\displaystyle{ ∞ }[/math], however, so if you're in a hurry, you should skip this article and lean on that method instead (though you should be aware that the general method offers less insight about each of those tuning schemes than their specialized methods do).

Exact vs. approximate solutions

Tuning computation methods can be classified by whether they give an approximate or exact solution.

The general method is an approximate type; it finds the generator tuning map [math]\displaystyle{ 𝒈 }[/math] directly, using trial-and-error methods such as gradient descent or differential evolution whose details we won't go into. The accuracy of approximate types depends on how long you are willing to wait.

In contrast, the exact type work by solving for a matrix [math]\displaystyle{ G }[/math], the generator embedding.

We can calculate [math]\displaystyle{ 𝒈 }[/math] from this [math]\displaystyle{ G }[/math] via [math]\displaystyle{ 𝒋G }[/math], that is, the generator tuning map is obtained as the product of the just tuning map and the generator embedding.

Because [math]\displaystyle{ 𝒈 = 𝒋G }[/math], if [math]\displaystyle{ 𝒈 }[/math] is the primary target, not [math]\displaystyle{ G }[/math], and a formula for [math]\displaystyle{ G }[/math] is known, then it is possible to substitute that into [math]\displaystyle{ 𝒈 = 𝒋G }[/math] and thereby bypass explicitly solving for [math]\displaystyle{ G }[/math]. For example, this was essentially what was done in the Only-held intervals method and Pseudoinverse method sections of D&D's guide: Tuning computation).

Note that with any exact type that solves for [math]\displaystyle{ G }[/math], since it is possible to have an exact [math]\displaystyle{ 𝒋 }[/math], it is also possible to find an exact [math]\displaystyle{ 𝒈 }[/math]. For example, the approximate value of the 5-limit [math]\displaystyle{ 𝒋 }[/math] we're quite familiar with is ⟨1200.000 1901.955 2786.314], but its exact value is ⟨[math]\displaystyle{ 1200×\log_2(2) }[/math] [math]\displaystyle{ 1200×\log_2(3) }[/math] [math]\displaystyle{ 1200×\log_2(5) }[/math]], so if the exact tuning of quarter-comma meantone is [math]\displaystyle{ G }[/math] = {[1 0 0⟩ [0 0 ¼⟩], then this can be expressed as an exact generator tuning map [math]\displaystyle{ 𝒈 }[/math] = {[math]\displaystyle{ (1200×\log_2(2))(1) + (1200×\log_2(3))(0) + (1200×\log_2(5))(0) }[/math] [math]\displaystyle{ (1200×\log_2(2))(0) + (1200×\log_2(3))(0) + (1200×\log_2(5))(\frac14) }[/math]] = {[math]\displaystyle{ 1200 }[/math] [math]\displaystyle{ \dfrac{1200×\log_2(5)}{4} }[/math]].

Also note that any method which solves for [math]\displaystyle{ G }[/math] can also produce [math]\displaystyle{ 𝒈 }[/math] via this [math]\displaystyle{ 𝒋G }[/math] formula. But methods which solve directly for [math]\displaystyle{ 𝒈 }[/math] cannot provide a [math]\displaystyle{ G }[/math], even if a [math]\displaystyle{ G }[/math] could have been computed for the given type of optimization problem (such as a minimax type, which notably is the majority of tuning optimizations used on the wiki). In a way, tuning maps are like a lossily compressed form of information from embeddings.

Here's a breakdown of which computation methods solve directly for [math]\displaystyle{ 𝒈 }[/math], and which can solve for [math]\displaystyle{ G }[/math] instead:

| Optimization power | Method | Solution type | Solves for |

|---|---|---|---|

| [math]\displaystyle{ 2 }[/math] | Pseudoinverse | Exact | [math]\displaystyle{ G }[/math] |

| [math]\displaystyle{ 1 }[/math] | Zero-damage | Exact | [math]\displaystyle{ G }[/math] |

| [math]\displaystyle{ ∞ }[/math] | Coinciding-damage | Exact | [math]\displaystyle{ G }[/math] |

| General | Power | Approximate | [math]\displaystyle{ 𝒈 }[/math] |

| power limit | |||

| N/A | Only held-intervals | Exact | [math]\displaystyle{ G }[/math] |

The generator embedding

Roughly speaking, if [math]\displaystyle{ M }[/math] is the matrix which isolates the temperament information, and [math]\displaystyle{ 𝒋 }[/math] is the matrix which isolates the sizing information, then [math]\displaystyle{ G }[/math] is the matrix that isolates the tuning information. This is a matrix whose columns are prime-count vectors representing the generators of the temperament. For example, a Pythagorean tuning of meantone temperament would look like this:

[math]\displaystyle{

G =

\left[ \begin{array} {rrr}

1 & {-1} \\

0 & 1 \\

0 & 0 \\

\end{array} \right]

}[/math]

The first column is the vector [1 0 0⟩ representing [math]\displaystyle{ \frac21 }[/math], and the second column is the vector [-1 1 0⟩ representing [math]\displaystyle{ \frac32 }[/math]. So generator embeddings will always have the shape [math]\displaystyle{ (d, r) }[/math]: one row for each prime harmonic in the domain basis (the dimensionality), one column for each generator (the rank).

Pythagorean tuning is not a common tuning of meantone, however, and is an extreme enough tuning of that temperament that it should be considered unreasonable. We gave it as our first example anyway, though, in order to more gently introduce the concept of generator embeddings, because its prime-count vector columns are simple and familiar, while in reality, most generator embeddings consist of prime-count vectors which do not have integer entries. Therefore, these prime-count vectors do not represent JI intervals, and are unlike any prime-count vectors we've worked with so far. For another example of a meantone tuning, then, one which is more common and reasonable, let's consider the quarter-comma tuning of meantone. Its generator embedding looks like this:

[math]\displaystyle{

G =

\left[ \begin{array} {rrr}

1 & 0 \\

0 & 0 \\

0 & \frac14 \\

\end{array} \right]

}[/math]

Algebraic setup

The basic algebraic setup of tuning optimization looks like this:

[math]\displaystyle{

\textbf{d} = |\,𝒈M\mathrm{T}W - 𝒋\mathrm{T}W\,|

}[/math]

When we break [math]\displaystyle{ 𝒈 }[/math] down into [math]\displaystyle{ 𝒋 }[/math] and a [math]\displaystyle{ G }[/math] we're solving for, the algebraic setup of tuning optimization comes out like this:

[math]\displaystyle{

\textbf{d} = |\,𝒋GM\mathrm{T}W - 𝒋G_{\text{j}}M_{\text{j}}\mathrm{T}W\,|

}[/math]

We can factor things in both directions this time (and we'll take [math]\displaystyle{ 𝒋 }[/math] outside the absolute value bars since it's guaranteed to have no negative entries):

[math]\displaystyle{

\textbf{d} = 𝒋\,|\,(GM - G_{\text{j}}M_{\text{j}})\mathrm{T}W\,|

}[/math]

But wait—there are actually two more matrices we haven't recognized yet, on the just side of things. These are [math]\displaystyle{ G_{\text{j}} }[/math] and [math]\displaystyle{ M_{\text{j}} }[/math]. Unsurprisingly, these two are closely related to [math]\displaystyle{ G }[/math] and [math]\displaystyle{ M }[/math], respectively. The subscript [math]\displaystyle{ \text{j} }[/math] stands for "just intonation", so this is intended to indicate that these are the generators and mapping for JI.

We could replace either or both of these matrices with [math]\displaystyle{ I }[/math], an identity matrix. On account of both [math]\displaystyle{ G_{\text{j}} }[/math] and [math]\displaystyle{ M_{\text{j}} }[/math] being identity matrices, we can eliminate them from our expression

[math]\displaystyle{

\textbf{d} = 𝒋\,|\,(GM - II)\mathrm{T}W\,|

}[/math]

Which reduces to:

[math]\displaystyle{

\textbf{d} = 𝒋\,|\,(P - I)\mathrm{T}W\,|

}[/math]

Where [math]\displaystyle{ P }[/math] is the projection matrix found as [math]\displaystyle{ P = GM }[/math].

So why do we have [math]\displaystyle{ G_{\text{j}} }[/math] and [math]\displaystyle{ M_{\text{j}} }[/math] there at all? For maximal parallelism between the tempered side and the just side. In part this is a pragmatic decision, because as we work with these sorts of expressions moving forward, we'll prefer something rather than nothing in this position anyway. But there's also a pedagogical goal here, which is to convey how in JI, the mapping matrix and the generator embedding really are identity matrices, and it can be helpful to stay mindful of it.

You can imagine reading a [math]\displaystyle{ (3, 3) }[/math]-shaped identity matrix like a mapping matrix: how many generators does it take to approximate prime 2? One of the first generator, and nothing else. How many to approximate prime 3? One of the second generator, and nothing else. How many to approximate prime 5? One of the third generator, and nothing else. So this mapping is not much of a mapping at all. It shows us only that in this temperament, the first generator may as well be a perfect approximation of prime 2, the second generator may as well be a perfect approximation of prime 3, and the third generator may as well be a perfect approximation of prime 5. Any temperament which has as many generators as it has primes may as well be JI like this.

And then the fact that the generator embedding on the just side is also an identity matrix finishes the point. The vector for the first generator is [1 0 0⟩, a representation of the interval [math]\displaystyle{ \frac21 }[/math]; the vector for the second generator is [0 1 0⟩, a representation of the interval [math]\displaystyle{ \frac31 }[/math]; and the vector for the third generator is [0 0 1⟩, a representation of the interval [math]\displaystyle{ \frac51 }[/math].

We can even understand this in terms of a units analysis, where if [math]\displaystyle{ M_{\text{j}} }[/math] is taken to have units of g/p, and [math]\displaystyle{ G_{\text{j}} }[/math] is taken to have units of p/g, then together we find their units to be ... nothing. And an identity matrix that isn't even understood to have units is definitely useless and to be eliminated. Though it's actually not as simple as the [math]\displaystyle{ \small \sf p }[/math]'s and [math]\displaystyle{ \small \sf g }[/math]'s canceling out; for more details, see here.

So when the interval vectors constituting the target-interval list [math]\displaystyle{ \mathrm{T} }[/math] are multiplied by [math]\displaystyle{ G_{\text{j}}M_{\text{j}} }[/math] they are unchanged, which means that multiplying the result by [math]\displaystyle{ 𝒋 }[/math] simply computes their just sizes.

Deduplication

Between target-interval set and held-interval basis

Generally speaking, held-intervals should be removed if they also appear in the target-interval set. If these intervals are not removed, the correct tuning can still be computed; however, during optimization, effort will have been wasted on minimizing damage to these intervals, because their damage would have been held to 0 by other means anyway.

Of course, there is some cost to the deduplication itself, but In general, it should be more computationally efficient to remove these intervals from the target-interval set in advance, rather than submit them to the optimization procedures as-is.

Duplication of intervals between these two sets will most likely occur when using a target-interval set scheme (such as a TILT or OLD) that automatically chooses the target-interval set.

Constant damage target-intervals

There is also a possibility, when holding intervals, that some target-intervals' damages will be constant everywhere within the tuning damage space to be searched, and thus these target-intervals will have no effect on the tuning. Their preservation in the target-interval set will only serve to slow down computation.

For example, in pajara temperament, with mapping [⟨2 3 5 6] ⟨0 1 -2 -2]}, if the octave is held unchanged, then there is no sense keeping [math]\displaystyle{ \frac75 }[/math] in the target-interval set. The octave [1 0 0 0⟩ maps to [2 0} in this temperament, and [math]\displaystyle{ \frac75 }[/math] [0 0 -1 1⟩ maps to [1 0}. So if the first generator is fixed in order to hold the octave unchanged, then ~[math]\displaystyle{ \frac75 }[/math]'s tuning will also be fixed.

Within target-interval set

We also note a potential for duplication within the target-interval set, irrespective of held-intervals: depending on the temperament, some target-intervals may map to the same tempered interval. For another pajara example, using the TILT as a target-interval set scheme, the target-interval set will contain [math]\displaystyle{ \frac{10}{7} }[/math] and [math]\displaystyle{ \frac75 }[/math], but pajara maps both of those intervals to [1 0}, and thus the damage to these two intervals will always be the same.

However, critically, this is only truly redundant information in the case of a minimax tuning scheme, where the optimization power [math]\displaystyle{ p = ∞ }[/math]. In this case, if the damage to [math]\displaystyle{ \frac75 }[/math] is the max, then it's irrelevant whether the damage to [math]\displaystyle{ \frac{10}{7} }[/math] is also the max. But in the case of any other optimization power, both the presence of [math]\displaystyle{ \frac75 }[/math] and of [math]\displaystyle{ \frac{10}{7} }[/math] in the target-interval set will have some effect; for example, with [math]\displaystyle{ p = 1 }[/math], miniaverage tuning schemes, this means that whatever the identical damage to this one mapped target-interval [1 0} may be, since two different of our target-intervals map to it, we care about its damage twice as much, and thus it essentially gets counted twice in our average damage computation.

Should redundant mapped target-intervals be removed when computing minimax tuning schemes? It's a reasonable consideration. The RTT Library in Wolfram Language does not do this. In general, this may add more complexity to the code than the benefit is worth; it requieres minding the difference between the requested target-interval set count [math]\displaystyle{ k }[/math] and the count of deduped mapped target-intervals, which would require a new variable.

Only held-intervals method

The only held-intervals method was mostly covered here: Dave Keenan & Douglas Blumeyer's guide to RTT/Tuning computation#Only held-intervals method. But there are a couple adjustments we'll make to how we talk about it here.

Unchanged-interval basis

In the D&D's guide article, this method was discussed in terms of held-intervals, which are a trait of a tuning scheme, or in other words, a request that a person makes of a tuning optimization procedure which that procedure will then satisfy. But there's something interesting that happens once we request enough many intervals to be held unchanged—that is, when our held-interval count [math]\displaystyle{ h }[/math] reaches the size of our generator count, also known as rank [math]\displaystyle{ r }[/math]—then we have no room left for optimization. At this point, the tuning is entirely determined by the held-intervals. And thus we get another, perhaps better, way to look at the interval basis: no longer in terms of a request on a tuning scheme, but as a characteristic of a specific tuning itself. Under this conceptualization, what we have is not a helf-interval basis [math]\displaystyle{ \mathrm{H} }[/math], but an unchanged-interval basis [math]\displaystyle{ \mathrm{U} }[/math].

Because in the majority of cases within this article it will be more appropriate to conceive of this basis as a characteristic of a fully-determined tuning, as opposed to a request of tuning scheme, we will be henceforth be dealing with this method in terms of [math]\displaystyle{ \mathrm{U} }[/math], not [math]\displaystyle{ \mathrm{H} }[/math]

Generator embedding

So, substituting [math]\displaystyle{ \mathrm{U} }[/math] in for [math]\displaystyle{ \mathrm{H} }[/math] in the formula we learned from the D&D's guide article:

[math]\displaystyle{

𝒈 = 𝒋\mathrm{U}(M\mathrm{U})^{-1}

}[/math]

This tells us that if we know the unchanged-interval basis for a tuning, i.e. every unchanged-interval in the form of a prime-count vector, then we can get our generators. But the next difference we want to look at here is this: the formula has bypassed the computation of [math]\displaystyle{ G }[/math]! We can expand [math]\displaystyle{ 𝒈 }[/math] to [math]\displaystyle{ 𝒋G }[/math]:

[math]\displaystyle{

𝒋G = 𝒋\mathrm{U}(M\mathrm{U})^{-1}

}[/math]

And cancel out:

[math]\displaystyle{

\cancel{𝒋}G = \cancel{𝒋}\mathrm{U}(M\mathrm{U})^{-1}

}[/math]

To find:

[math]\displaystyle{

G = \mathrm{U}(M\mathrm{U})^{-1}

}[/math]

Pseudoinverse method

Similarly, we can take the pseudoinverse formula as presented in Dave Keenan & Douglas Blumeyer's guide to RTT/Tuning computation#Pseudoinverse method, substitute [math]\displaystyle{ 𝒋G }[/math] for [math]\displaystyle{ 𝒈 }[/math], and cancel out:

[math]\displaystyle{

\begin{align}

𝒈 &= 𝒋\mathrm{T}W(M\mathrm{T}W)^{+} \\

𝒋G &= 𝒋\mathrm{T}W(M\mathrm{T}W)^{+} \\

\cancel{𝒋}G &= \cancel{𝒋}\mathrm{T}W(M\mathrm{T}W)^{+} \\

G &= \mathrm{T}W(M\mathrm{T}W)^{+} \\

\end{align}

}[/math]

Connection with the only held-intervals method

Note the similarity between the pseudoinverse formula [math]\displaystyle{ A^{+} = A^\mathsf{T}(AA^\mathsf{T})^{-1} }[/math] and the only held-interval interval [math]\displaystyle{ G = 𝒋\mathrm{U}(M\mathrm{U})^{-1} }[/math]; in fact, it's the same formula, if we simply substitute in [math]\displaystyle{ M^\mathsf{T} }[/math] for [math]\displaystyle{ \mathrm{U} }[/math].

What this tells us is that for any tuning of a temperament where [math]\displaystyle{ G = M^{+} }[/math], the held-intervals are given by the transpose of the mapping, [math]\displaystyle{ M^\mathsf{T} }[/math]. (Historically this tuning scheme has been called "Frobenius", but we would call it "minimax-E-copfr-S".)

For example, in the [math]\displaystyle{ G = M^{+} }[/math] tuning of meantone temperament ⟨1202.607 696.741], with mapping [math]\displaystyle{ M }[/math] equal to:

[math]\displaystyle{

\left[ \begin{array} {r}

1 & 1 & 0 \\

0 & 1 & 4 \\

\end{array} \right]

}[/math]

The held-intervals are [math]\displaystyle{ M^\mathsf{T} }[/math]:

[math]\displaystyle{

\left[ \begin{array} {r}

1 & 0 \\

1 & 1 \\

0 & 4 \\

\end{array} \right]

}[/math]

or in other words, the two held-intervals are [1 1 0⟩ and [0 1 4⟩, which as ratios are [math]\displaystyle{ \frac61 }[/math] and [math]\displaystyle{ \frac{1875}{1} }[/math], respectively. Those may seem like some pretty strange intervals to be unchanged, for sure, but there is a way to think about it that makes it seem less strange. This tells us that whatever the error is on [math]\displaystyle{ \frac21 }[/math], it is the negation of the error on [math]\displaystyle{ \frac31 }[/math], because when those intervals are combined, we get a pure [math]\displaystyle{ \frac61 }[/math]. This also tells us that whatever the error is on [math]\displaystyle{ \frac31 }[/math], that it in turn is the negation of the error on [math]\displaystyle{ \frac{625}{1} = \frac{5^4}{1} }[/math].[note 1] Also, remember that these intervals form a basis for the held-intervals; any interval that is a linear combination of them is also unchanged.

As another example, the unchanged-interval of the primes miniRMS-U tuning of 12-ET would be [12 19 28⟩. Don't mistake that for the 12-ET map ⟨12 19 28]; that's the prime-count vector you get from transposing it! That interval, while rational and thus theoretically JI, could not be heard directly by humans, considering that [math]\displaystyle{ 2^{12}3^{19}5^{28} }[/math] is over 107 octaves above unison and would typically call for scientific notation to express; it's 128553.929 ¢, which is exactly 1289 ([math]\displaystyle{ = 12^2+19^2+28^2 }[/math]) iterations of the 99.732 ¢ generator for this tuning.

Example

Let's refer back to the example given in Dave Keenan & Douglas Blumeyer's guide to RTT/Tuning computation#Plugging back in, picking up from this point:

[math]\displaystyle{

\scriptsize

𝒈 =

\begin{array} {ccc}

𝒋 \\

\left[ \begin{array} {rrr}

1200.000 & 1901.955 & 2786.314 \\

\end{array} \right]

\end{array}

\begin{array} {ccc}

\mathrm{T}C \\

\left[ \begin{array} {r|r|r|r|r|r|r|r}

\;\;1.000 & \;\;\;0.000 & {-2.585} & 7.170 & {-3.322} & 0.000 & {-8.644} & 4.907 \\

0.000 & 1.585 & 2.585 & {-3.585} & 0.000 & {-3.907} & 0.000 & 4.907 \\

0.000 & 0.000 & 0.000 & 0.000 & 3.322 & 3.907 & 4.322 & {-4.907} \\

\end{array} \right]

\end{array}

\begin{array} {ccc}

(M\mathrm{T}C)^\mathsf{T} \\

\left[ \begin{array} {rrr}

1.000 & 0.000 \\ \hline

3.170 & {-4.755} \\ \hline

2.585 & {-7.755} \\ \hline

0.000 & 10.755 \\ \hline

6.644 & {-16.610} \\ \hline

3.907 & {-7.814} \\ \hline

4.322 & {-21.610} \\ \hline

0.000 & 9.814 \\

\end{array} \right]

\end{array}

\begin{array} {ccc}

(M\mathrm{T}C(M\mathrm{T}C)^\mathsf{T})^{-1} \\

\left[ \begin{array} {rrr}

0.0336 & 0.00824 \\

0.00824 & 0.00293 \\

\end{array} \right]

\end{array}

}[/math]

In the original article, we simply multiplied through the entire right half of this expression. But what if we stopped before multiplying in the [math]\displaystyle{ 𝒋 }[/math] part, instead?

[math]\displaystyle{

𝒈 =

\begin{array} {ccc}

𝒋 \\

\left[ \begin{array} {rrr}

1200.000 & 1901.955 & 2786.314 \\

\end{array} \right]

\end{array}

\begin{array} {ccc}

\mathrm{T}C(M\mathrm{T}C)^\mathsf{T}(M\mathrm{T}C(M\mathrm{T}C)^\mathsf{T})^{-1} \\

\left[ \begin{array} {rrr}

1.003 & 0.599 \\

{-0.016} & 0.007 \\

0.010 & {-0.204} \\

\end{array} \right]

\end{array}

}[/math]

The matrices with shapes [math]\displaystyle{ (3, 8)(8, 2)(2, 2) }[/math] led us to a [math]\displaystyle{ (3, \cancel{8})(\cancel{8}, \cancel{2})(\cancel{2}, 2) = (3, 2) }[/math]-shaped matrix, and that's just what we want in a [math]\displaystyle{ G }[/math] here. Specifically, we want a [math]\displaystyle{ (d, r) }[/math]-shaped matrix, one that will convert [math]\displaystyle{ (r, 1) }[/math]-shaped generator-count vectors—those that are results of mapping [math]\displaystyle{ (d, 1) }[/math]-shaped prime-count vectors by the temperament mapping matrix—back into [math]\displaystyle{ (d, 1) }[/math]-shaped prime-count vectors, but now representing the intervals as they sound under this tuning of this temperament.

And so we've found what we were looking for, [math]\displaystyle{ G = \mathrm{T}C(M\mathrm{T}C)^\mathsf{T}(M\mathrm{T}C(M\mathrm{T}C)^\mathsf{T})^{-1} }[/math].

At first glance, this might seem surprising or crazy, that we find ourselves looking at musical intervals described by raising prime harmonics to powers that are precise fractions. But they do, in fact, work out to reasonable interval sizes. Let's check by actually working these generators out through their decimal powers.

This generator embedding [math]\displaystyle{ G }[/math] is telling us that the tuning of our first generator may be represented by the prime-count vector [1.003 -0.016 0.010⟩, or in other words, it's the interval [math]\displaystyle{ 2^{1.003}3^{-0.016}5^{0.010} }[/math], which is equal to [math]\displaystyle{ 2.00018 }[/math], or 1200.159 ¢. As for the second generator, then, we find that [math]\displaystyle{ 2^{0.599}3^{0.007}5^{-0.205} = 1.0985 }[/math], or 162.664 ¢. By checking the porcupine article we can see that these are both reasonable generator sizes.

What we've just worked out with this sanity check is our generator tuning map, [math]\displaystyle{ 𝒈 }[/math]. In general we can find these by left-multiplying the generators [math]\displaystyle{ G }[/math] by [math]\displaystyle{ 𝒋 }[/math]:

[math]\displaystyle{

\begin{array} {ccc}

\begin{array} {ccc}

𝒋 \\

\left[ \begin{array} {rrr}

1200.000 & 1901.955 & 2786.314 \\

\end{array} \right]

\end{array}

\begin{array} {ccc}

G \\

\left[ \begin{array} {rrr}

1.003 & 0.599 \\

{-0.016} & 0.007 \\

0.010 & {-0.204} \\

\end{array} \right]

\end{array}

=

\begin{array} {ccc}

\mathbf{g} \\

\left[ \begin{array} {rrr}

1200.159 & 162.664 \\

\end{array} \right]

\end{array}

\end{array}

}[/math]

Pseudoinverse: The "how"

Here we will investigate how, mechanically speaking, the pseudoinverse almost magically takes us straight to that answer we want.

Like an inverse

As you might suppose—given a name like pseudoinverse—this thing is like a normal matrix inverse, but not exactly. True inverses are only defined for square matrices, so the pseudoinverse is essentially a way to make something similar available for non-square i.e. rectangular matrices. This is useful for RTT because the [math]\displaystyle{ M\mathrm{T}W }[/math] matrices we use it on are usually rectangular; they are always [math]\displaystyle{ (r, k) }[/math]-shaped matrices.

But why would we want to take the inverse of [math]\displaystyle{ M\mathrm{T}W }[/math] in the first place, though? To understand this, it will help to first simplify the problem.

- Our first simplification will be to use unity-weight damage, meaning that the weight on each of the target-intervals is the same, and may as well be 1. This makes our weight matrix [math]\displaystyle{ W }[/math] a matrix of all zeros with 1's running down the main diagonal, or in other words, it makes [math]\displaystyle{ W = I }[/math]. So we can eliminate it.

- Our second simplification is to consider the case where the target-interval set [math]\displaystyle{ \mathrm{T} }[/math] is the primes. This makes [math]\displaystyle{ \mathrm{T} }[/math] also equal to [math]\displaystyle{ I }[/math], so we can eliminate it as well.

At this point we're left with simply [math]\displaystyle{ M }[/math]. And this is still a rectangular matrix; it's [math]\displaystyle{ (r, d) }[/math]-shaped. So if we want to invert it, we'll only be able to pseudoinvert it. But we're still in the dark about why we would ever want to invert it.

To finally get to understanding why, let's look to an expression discussed here: Basic algebraic setup:

[math]\displaystyle{

GM \approx G_{\text{j}}M_{\text{j}}

}[/math]

This expression captures the idea that a tuning based on [math]\displaystyle{ G }[/math] of a temperament [math]\displaystyle{ M }[/math] (the left side of this) is intended to approximate just intonation, where both [math]\displaystyle{ G_{\text{j}} = I }[/math] and [math]\displaystyle{ M_{\text{j}} = I }[/math] (the right side of this).

So given some mapping [math]\displaystyle{ M }[/math], which [math]\displaystyle{ G }[/math] makes that happen? Well, based on the above, it should be the inverse of [math]\displaystyle{ M }[/math]! That's because anything times its own inverse equals an identity, i.e. [math]\displaystyle{ M^{-1}M = I }[/math].

Definition of inverse

Multiplying by something to give an identity is, in fact, the very definition of "inverse". To illustrate, here's an example of a true inverse, in the case of [math]\displaystyle{ (2, 2) }[/math]-shaped matrices:

[math]\displaystyle{

\begin{array} {c}

A^{-1} \\

\left[ \begin{array} {rrr}

1 & \frac23 \\

0 & {-\frac13} \\

\end{array} \right]

\end{array}

\begin{array} {c}

A \\

\left[ \begin{array} {rrr}

1 & 2 \\

0 & {-3} \\

\end{array} \right]

\end{array}

\begin{array} {c} \\ = \end{array}

\begin{array} {c}

I \\

\left[ \begin{array} {rrr}

1 & 0 \\

0 & 1 \\

\end{array} \right]

\end{array}

}[/math]

So the point is, if we could plug [math]\displaystyle{ M^{-1} }[/math] in for [math]\displaystyle{ G }[/math] here, we'd get a reasonable approximation of just intonation, i.e. an identity matrix [math]\displaystyle{ I }[/math].

But the problem is, as we know already, that [math]\displaystyle{ M^{-1} }[/math] doesn't exist, because [math]\displaystyle{ M }[/math] is a rectangular matrix. That's why we use its pseudoinverse [math]\displaystyle{ M^{+} }[/math] instead. Or to be absolutely clear, we choose our generator embedding [math]\displaystyle{ G }[/math] to be [math]\displaystyle{ M^{+} }[/math].

Sometimes an inverse

Now to be completely accurate, when we multiply a rectangular matrix by its pseudoinverse, we can also get an identity matrix, but only if we do it a certain way. (And this fact that we can get an identity matrix at all is a critical example of the way how the pseudoinverse provides inverse-like powers for rectangular matrices.) But there are still a few key differences between this situation and the situation of a square matrix and its true inverse:

- The first big difference is that in the case of square matrices, as we saw a moment ago, all the matrices have the same shape. However, for a non-square (rectangular) matrix with shape [math]\displaystyle{ (m, n) }[/math], it will have a pseudoinverse with shape [math]\displaystyle{ (n, m) }[/math]. This difference perhaps could have gone without saying.

- The second big difference is that in the case of square matrices, the multiplication order is irrelevant: you can either left-multiply the original matrix by its inverse or right-multiply it, and either way, you'll get the same identity matrix. But there's no way you could get the same identity matrix in the case of a rectangular matrix and its pseudoinverse; an [math]\displaystyle{ (m, n) }[/math]-shaped matrix times an [math]\displaystyle{ (n, m) }[/math]-shaped matrix gives an [math]\displaystyle{ (m, m) }[/math]-shaped matrix, while an [math]\displaystyle{ (n, m) }[/math]-shaped matrix times an [math]\displaystyle{ (m, n) }[/math]-shaped matrix gives an [math]\displaystyle{ (n, n) }[/math]-shaped matrix (the inner height and width always have to match, and the resulting matrix always has shape matching the outer width and height). So: either way we will get a square matrix, but one way we get an [math]\displaystyle{ (m, m) }[/math] shape, and the other way we get an [math]\displaystyle{ (n, n) }[/math] shape.

- The third big difference—and this is probably the most important one, but we had to build up to it by looking at the other two big differences first—is that only one of those two possible results of multiplying a rectangular matrix by its pseudoinverse will actually even give an identity matrix! It will be the one of the two that gives the smaller square matrix.

Example of when the pseudoinverse behaves like a true inverse

Here's an example with meantone temperament as [math]\displaystyle{ M }[/math]. Its pseudoinverse [math]\displaystyle{ M^{+} = M^\mathsf{T}(MM^\mathsf{T})^{-1} }[/math] is {[17 16 -4⟩ [16 17 4⟩]/33. First, we'll look at the multiplication order that gives an identity matrix, when the [math]\displaystyle{ (2, 3) }[/math]-shaped rectangular matrix right-multiplied by its [math]\displaystyle{ (3, 2) }[/math]-shaped rectangular pseudoinverse gives a [math]\displaystyle{ (2, 2) }[/math]-shaped square identity matrix:

[math]\displaystyle{

\begin{array} {c}

M \\

\left[ \begin{array} {r}

1 & 0 & {-4} \\

0 & 1 & 4 \\

\end{array} \right]

\end{array}

\begin{array} {c}

M^{+} \\

\left[ \begin{array} {c}

\frac{17}{33} & \frac{16}{33} \\

\frac{16}{33} & \frac{17}{33} \\

{-\frac{4}{33}} & \frac{4}{33} \\

\end{array} \right]

\end{array}

\begin{array} {c} \\ = \end{array}

\begin{array} {c}

I \\

\left[ \begin{array} {rrr}

1 & 0 \\

0 & 1 \\

\end{array} \right]

\end{array}

}[/math]

Let's give an RTT way to interpret this first result. Basically it tells us that [math]\displaystyle{ M^{+} }[/math] might be a reasonable generator embedding [math]\displaystyle{ G }[/math] for this temperament. First of all, let's note that [math]\displaystyle{ M }[/math] was not specifically designed to handle non-JI intervals like those represented by the prime-count vector columns of [math]\displaystyle{ M^{+} }[/math], like we are making it do here. But we can get away with it anyway. And in this case, [math]\displaystyle{ M }[/math] maps the first column of [math]\displaystyle{ M^{+} }[/math] to the generator-count vector [1 0}, and its second column to the generator-count vector [0 1}; we can find these two vectors as the columns of the identity matrix [math]\displaystyle{ I }[/math].

Now, one fact we can take from this is that the first column of [math]\displaystyle{ M^{+} }[/math]—the non-JI vector [[math]\displaystyle{ \frac{17}{33} }[/math] [math]\displaystyle{ \frac{16}{33} }[/math] [math]\displaystyle{ \frac{-4}{33} }[/math]⟩—shares at least one thing in common with other JI intervals such as [math]\displaystyle{ \frac21 }[/math] [1 0 0⟩, [math]\displaystyle{ \frac{81}{40} }[/math] [-3 4 -1⟩, and [math]\displaystyle{ \frac{160}{81} }[/math] [5 -4 1⟩: they all get mapped to [1 0} by this meantone mapping matrix [math]\displaystyle{ M }[/math]. Note that this is no guarantee that [[math]\displaystyle{ \frac{17}{33} }[/math] [math]\displaystyle{ \frac{16}{33} }[/math] [math]\displaystyle{ \frac{-4}{33} }[/math]⟩ is close to these intervals (in theory, we can add or subtract an indefinite number of temperament commas from an interval without altering what it maps to!), but it at least suggests that it's reasonably close to them, i.e. that it's about an octave in size.

And a similar statement can be made about the second column vector of [math]\displaystyle{ M^{+} }[/math], [[math]\displaystyle{ \frac{16}{33} }[/math] [math]\displaystyle{ \frac{17}{33} }[/math] [math]\displaystyle{ \frac{4}{33} }[/math]⟩, with respect to [math]\displaystyle{ \frac31 }[/math] [0 1 0⟩ and [math]\displaystyle{ \frac{80}{27} }[/math] [4 -3 1⟩, etc.: they all map to [0 1}, and so [[math]\displaystyle{ \frac{16}{33} }[/math] [math]\displaystyle{ \frac{17}{33} }[/math] [math]\displaystyle{ \frac{4}{33} }[/math]⟩ is probably about a perfect twelfth in size like the rest of them.

(In this case, both likelihoods are indeed true: our two tuned generators are 1202.607 ¢ and 696.741 ¢ in size.)

Example of when the pseudoinverse does not behave like a true inverse

Before we get to that, we should finish what we've got going here, and show for contrast what happens when we flip-flop [math]\displaystyle{ M }[/math] and [math]\displaystyle{ M^{+} }[/math], so that the [math]\displaystyle{ (3, 2) }[/math]-shaped rectangular pseudoinverse times the original [math]\displaystyle{ (2, 3) }[/math]-shaped rectangular matrix leads to a [math]\displaystyle{ (3, 3) }[/math]-shaped matrix which is not an identity matrix:

[math]\displaystyle{

\begin{array} {c}

M^{+} \\

\left[ \begin{array} {c}

\frac{17}{33} & \frac{16}{33} \\

\frac{16}{33} & \frac{17}{33} \\

-{\frac{4}{33}} & \frac{4}{33} \\

\end{array} \right]

\end{array}

\begin{array} {c}

M \\

\left[ \begin{array} {r}

1 & 0 & {-4} \\

0 & 1 & 4 \\

\end{array} \right]

\end{array}

\begin{array} {c} \\ = \end{array}

\begin{array} {c}

M^{+}M \\

\left[ \begin{array} {c}

\frac{17}{33} & \frac{16}{33} & {-\frac{4}{33}} \\

\frac{16}{33} & \frac{17}{33} & \frac{4}{33} \\

{-\frac{4}{33}} & \frac{4}{33} & \frac{32}{33} \\

\end{array} \right]

\end{array}

}[/math]

While this matrix [math]\displaystyle{ M^{+}M }[/math] clearly isn't an identity matrix, since it's not all zeros except for ones running along its main diagonal, and it doesn't really look anything like an identity matrix from a superficial perspective—just judging by the numbers we can read off its entries—it turns out that behavior-wise this matrix does actually work out to be as "close" to an identity matrix as we can get, at least in a certain sense. And since our goal with tuning this temperament was to approximate JI as closely as possible, from this certain mathematical perspective, this is the matrix that accomplishes that. But again, we'll get to why exactly this matrix is the one that accomplishes that in a little bit.

Un-simplifying

First, to show how we can un-simplify things. The insight leading to this choice of [math]\displaystyle{ G = M^{+} }[/math] was made under the simplifying circumstances of [math]\displaystyle{ W = I }[/math] (unity-weight damage) and [math]\displaystyle{ \mathrm{T} = \mathrm{T}_{\text{p}} = I }[/math] (primes as target-intervals). But nothing about those choices of [math]\displaystyle{ W }[/math] or [math]\displaystyle{ \mathrm{T} }[/math] affect how this method works; setting them to [math]\displaystyle{ I }[/math] was only to help us humans see the way forward. There's nothing stopping us now from using any other weights and target-intervals for [math]\displaystyle{ W }[/math] and [math]\displaystyle{ \mathrm{T} }[/math]; the concept behind this method holds. Choosing [math]\displaystyle{ G = \mathrm{T}W(M\mathrm{T}W)^{+} }[/math], that is, still finds for us the [math]\displaystyle{ p = 2 }[/math] optimization for the problem.

Demystifying the formula

One way to think about what's happening in the formula of the pseudoinverse uses a technique we might call the "transform-act-antitransform technique": we want to take some action, but we can't do it in the current state, so we transform into a state where we can, then we take the action, and we finish off by performing the opposite of the initial transformation so that we get back to more of a similar state to the one we began with, yet having accomplished the action we intended.

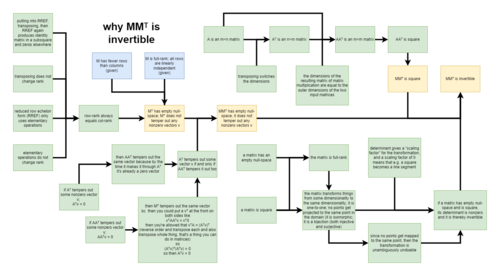

In the case of the pseudoinverse, the action we want to take is inverting a matrix. But we can't exactly invert it, because [math]\displaystyle{ A }[/math] is rectangular (to understand why, you can review the inversion process here: matrix inversion by hand). We happen to know that a matrix times its transpose is invertible, though (more on that in a moment), so:

- Multiplying by the matrix's transpose, finding [math]\displaystyle{ AA^\mathsf{T} }[/math], becomes our "transform" step.

- Then we invert like we wanted to do originally, so that's the "act" step: [math]\displaystyle{ (AA^\mathsf{T})^{-1} }[/math].

- Finally, we might think that we should multiply by the inverse of the matrix's transpose in order to undo our initial transformation step; however, we actually simply repeat the same thing, that is, we multiply by the transpose again! This is because we've put the matrix into an inverted state, so actually multiplying by the original's transpose here is essentially the opposite transformation. So that's the whole formula, then: [math]\displaystyle{ A^\mathsf{T}(AA^\mathsf{T})^{-1} }[/math].

Now, as for why we know a matrix times its own transpose is invertible: there's a ton of little linear algebra facts that all converge to guarantee that this is so. Please consider the following diagram which lays all these facts all out at once.

Pseudoinverse: The "why"

In the previous section we took a look at how, mechanically, the pseudoinverse gives the solution for optimization power [math]\displaystyle{ p = 2 }[/math]. As for why, conceptually speaking, the pseudoinverse gives us the minimum point for the RMS graph in tuning damage space, it's sort of just one of those seemingly miraculously useful mathematical results. But we can try to give a basic explanation here.

Derivative, for slope

First, let's briefly go over some math facts. For some readers, these will be review:

- The slope of a graph means its rate of change. When slope is positive, the graph is going up, and when negative, it's going down.

- Wherever a graph has a local minimum or maximum, the slope is 0. That's because that's the point where it changes direction, between going up or down.

- We can find the slope at every point of a graph by taking its derivative.

So, considering that we want to find the minimum of a graph, one approach should be to find the derivative of this graph, then find the point(s) where its value is 0, which is where the slope is 0. This means those are the possible points where we have a local minimum, which means therefore that those are the points where we maybe have a global minimum, which is what we're after.

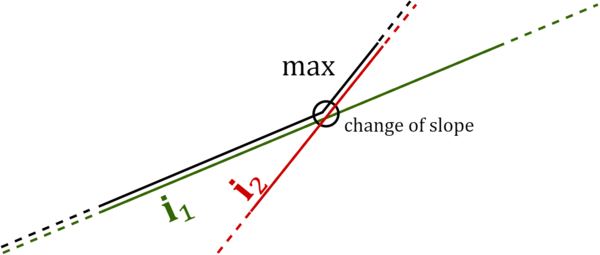

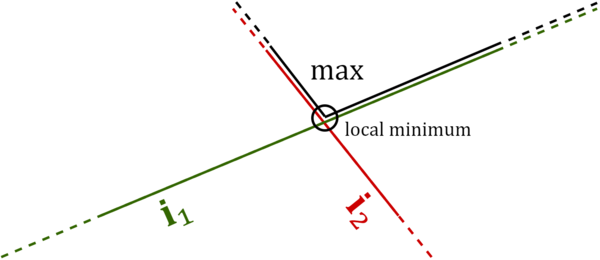

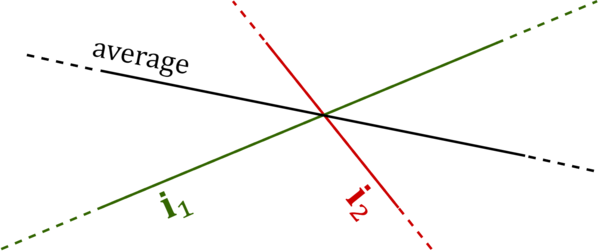

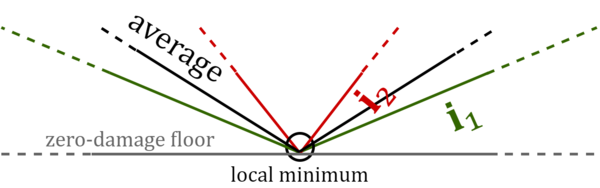

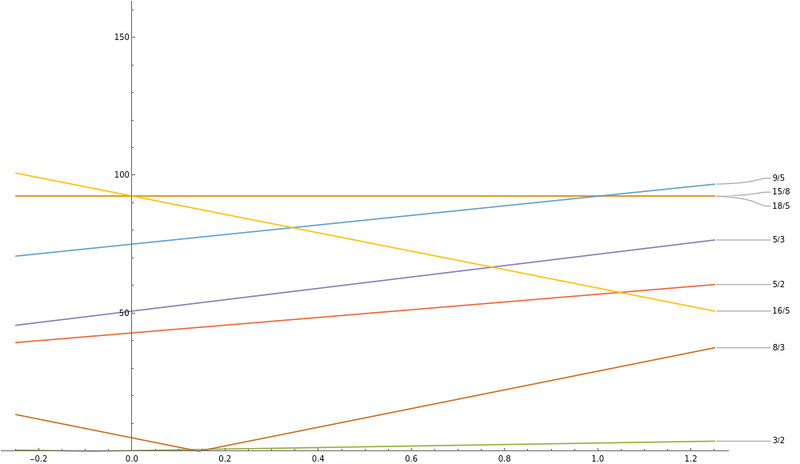

A unique minimum

As discussed in the tuning fundamentals article (in the section Non-unique tunings – power continuum), the graphs of mean damage and max damage—which are equivalent to the power means with powers [math]\displaystyle{ p = 1 }[/math] and [math]\displaystyle{ p = ∞ }[/math], respectively—consist of straight line segments connected by sharp corners, while all other optimization powers between [math]\displaystyle{ 1 }[/math] and [math]\displaystyle{ ∞ }[/math] form smooth curves. This is important because it is only for graphs with smooth curves that we can use its derivative to find the minimum point; the sharp corners of the other type of graph create discontinuities at those points, which in this context means points which have no definitive slope. The simple mathematical methods we use to find slope for smooth graphs get all confused and crash or give wrong results if we try to use them on these types of graphs.

So we can use the derivative slope technique for other powers [math]\displaystyle{ 1 \lt p \lt ∞ }[/math], but the pseudoinverse will only match the solution when [math]\displaystyle{ p = 2 }[/math].

And, spoiler alert: another key thing that's true about the [math]\displaystyle{ 2 }[/math]-mean graph whose minimum point we seek: it has only one point where the slope is equal 0, and it's our global minimum. Again, this is true of any of our curved [math]\displaystyle{ p }[/math]-mean graphs, but we only really care about it in the case of [math]\displaystyle{ p = 2 }[/math].

A toy example using the derivative

To get our feet on solid ground, let's just work through the math for an equal temperament example, i.e. one with only a single generator.

Kicking off with the setup discussed here, we have:

[math]\displaystyle{

\textbf{d} = 𝒋\,|\,(GM - G_{\text{j}}M_{\text{j}})\mathrm{T}W\,|

}[/math]

Let's rewrite this a tad, using the fact that [math]\displaystyle{ 𝒋G }[/math] is our generator tuning map [math]\displaystyle{ 𝒈 }[/math] and [math]\displaystyle{ 𝒋G_{\text{j}}M_{\text{j}} }[/math] is equivalent to simply [math]\displaystyle{ 𝒋 }[/math]:

[math]\displaystyle{

\textbf{d} = |\,(𝒈M - 𝒋)\mathrm{T}W\,|

}[/math]

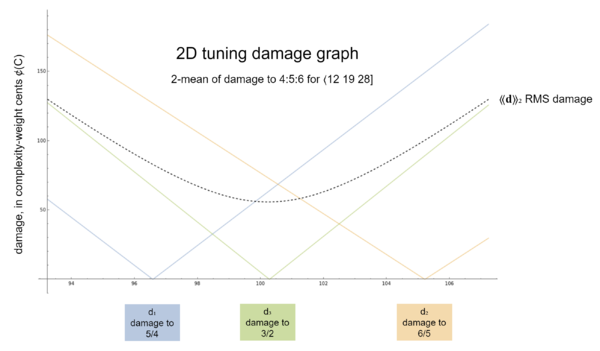

Let's say our rank-1 temperament is 12-ET, so our mapping [math]\displaystyle{ M }[/math] is ⟨12 19 28]. And our target-interval set is the otonal triad, so [math]\displaystyle{ \{ \frac54, \frac65, \frac32 \} }[/math]. And let's say we're complexity weighting, so [math]\displaystyle{ 𝒘 = \left[ \begin{array}{rrr} 4.322 & 4.907 & 2.585 \end{array} \right] }[/math], and [math]\displaystyle{ W }[/math] therefore is the diagonalized version of that (or [math]\displaystyle{ C }[/math] is the diagonlized version of [math]\displaystyle{ 𝒄 }[/math]). As for [math]\displaystyle{ 𝒈 }[/math], since this is a rank-1 temperament, being a [math]\displaystyle{ (1, r) }[/math]-shaped matrix, it's actually a [math]\displaystyle{ (1, 1) }[/math]-shaped matrix, and since we don't know what it is yet, it's single entry is the variable [math]\displaystyle{ g_1 }[/math]. This can be understood to represent the size of our ET generator in cents.

[math]\displaystyle{

\textbf{d} =

\Huge |

\normalsize

\begin{array} {ccc}

𝒈 \\

\left[ \begin{array} {rrr}

g_1 \\

\end{array} \right]

\end{array}

\begin{array} {ccc}

M \\

\left[ \begin{array} {rrr}

12 & 19 & 28 \\

\end{array} \right]

\end{array}

-

\begin{array} {ccc}

𝒋 \\

\left[ \begin{array} {rrr}

1200.000 & 1901.955 & 2786.314 \\

\end{array} \right]

\end{array}

\begin{array} {ccc}

\mathrm{T} \\

\left[ \begin{array} {r|r|r}

{-2} & 1 & {-1} \\

0 & 1 & 1 \\

1 & {-1} & 0 \\

\end{array} \right]

\end{array}

\begin{array} {ccc}

C \\

\left[ \begin{array} {rrr}

4.322 & 0 & 0 \\

0 & 4.907 & 0 \\

0 & 0 & 2.585 \\

\end{array} \right]

\end{array}

\Huge |

\normalsize

}[/math]

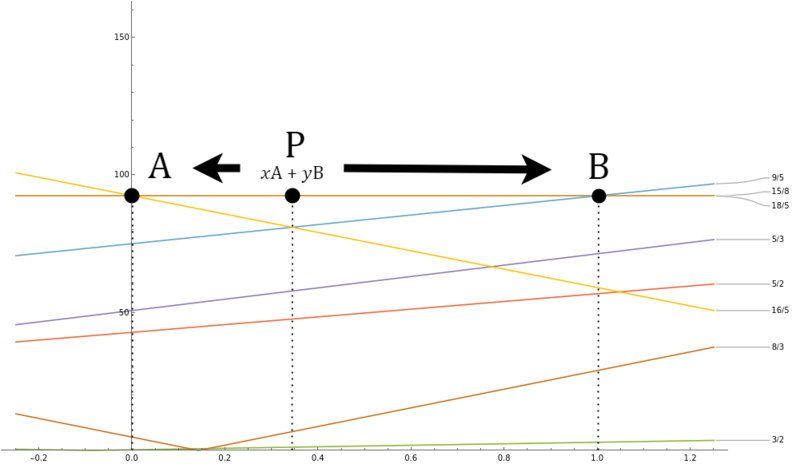

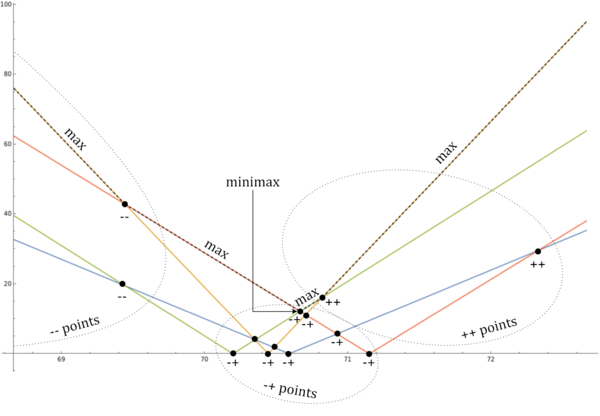

Here's what that looks like graphed:

As alluded to earlier, for rank-1 cases, it's pretty easy to read the value straight off the chart. Clearly we're expecting a generator size that's just a smidge bigger than 100 ¢. The point is here to understand the computation process.

So, let's simplify:

[math]\displaystyle{

\textbf{d} =

\Huge |

\normalsize

\begin{array} {ccc}

𝒈M = 𝒕 \\

\left[ \begin{array} {rrr}

12g_1 & 19g_1 & 28g_1 \\

\end{array} \right]

\end{array}

-

\begin{array} {ccc}

𝒋 \\

\left[ \begin{array} {rrr}

1200.000 & 1901.955 & 2786.314 \\

\end{array} \right]

\end{array}

\begin{array} {ccc}

\mathrm{T}C \\

\left[ \begin{array} {r|r|r}

{-8.644} & 4.907 & {-2.585} \\

0 & 4.907 & 2.585 \\

4.322 & {-4.907} & 0 \\

\end{array} \right]

\end{array}

\Huge |

\normalsize

}[/math]

Another pass:

[math]\displaystyle{ \textbf{d} = \Huge | \normalsize \begin{array} {ccc} 𝒕 - 𝒋 \\ \left[ \begin{array} {rrr} 12g_1 - 1200 & 19g_1 - 1901.955 & 28g_1 - 2786.31 \\ \end{array} \right] \end{array} \begin{array} {ccc} \mathrm{T}C \\ \left[ \begin{array} {r|r|r} {-8.644} & 4.907 & {-2.585} \\ 0 & 4.907 & 2.585 \\ 4.322 & {-4.907} & 0 \\ \end{array} \right] \end{array} \Huge | \normalsize }[/math]

And once more:

[math]\displaystyle{

\textbf{d} =

\Huge |

\normalsize

\begin{array} {ccc}

(𝒕 - 𝒋)\mathrm{T}C = 𝒓\mathrm{T}C = \textbf{e}C \\

\left[ \begin{array} {rrr}

17.288g_1 - 1669.605 &

14.721g_1 - 1548.835 &

18.095g_1 - 1814.526 \\

\end{array} \right]

\end{array}

\Huge |

\normalsize

}[/math]

And remember these bars are actually entry-wise absolute values, so we can put those on each entry. Though it actually won't matter much in a minute, since squaring things automatically causes positive values.

[math]\displaystyle{

\textbf{d} =

\begin{array} {ccc}

|\textbf{e}|C \\

\left[ \begin{array} {rrr}

|17.288g_1 - 1669.605| &

|14.721g_1 - 1548.835| &

|18.095g_1 - 1814.526| \\

\end{array} \right]

\end{array}

}[/math]

[math]\displaystyle{

% \slant{} command approximates italics to allow slanted bold characters, including digits, in MathJax.

\def\slant#1{\style{display:inline-block;margin:-.05em;transform:skew(-14deg)translateX(.03em)}{#1}}

% Latex equivalents of the wiki templates llzigzag and rrzigzag for double zigzag brackets.

\def\llzigzag{\hspace{-1.6mu}\style{display:inline-block;transform:scale(.62,1.24)translateY(.07em);font-family:sans-serif}{ꗨ\hspace{-3mu}ꗨ}\hspace{-1.6mu}}

\def\rrzigzag{\hspace{-1.6mu}\style{display:inline-block;transform:scale(-.62,1.24)translateY(.07em);font-family:sans-serif}{ꗨ\hspace{-3mu}ꗨ}\hspace{-1.6mu}}

}[/math]

Because what we're going to do now is change this to the formula for the SOS of damage, that is, [math]\displaystyle{ \llzigzag \textbf{d} \rrzigzag _2 }[/math]:

[math]\displaystyle{

\llzigzag \textbf{d} \rrzigzag _2 =

|17.288g_1 - 1669.605|^2 +

|14.721g_1 - 1548.835|^2 +

|18.095g_1 - 1814.526|^2

}[/math]

So we can get rid of those absolute value signs:

[math]\displaystyle{

\llzigzag \textbf{d} \rrzigzag _2 =

(17.288g_1 - 1669.605)^2 +

(14.721g_1 - 1548.835)^2 +

(18.095g_1 - 1814.526)^2

}[/math]

Then we're just going to work these out:

[math]\displaystyle{

\llzigzag \textbf{d} \rrzigzag _2 =

\small

(17.288g_1 - 1669.605)(17.288g_1 - 1669.605) +

(14.721g_1 - 1548.835)(14.721g_1 - 1548.835) +

(18.095g_1 - 1814.526)(18.095g_1 - 1814.526)

}[/math]

Distribute:

[math]\displaystyle{

\llzigzag \textbf{d} \rrzigzag _2 =

\small

(298.875g_1^2 - 57728.262g_1 - 2787580.856) +

(216.708g_1^2 - 45600.800g_1 - 2398889.857) +

(327.429g_1^2 - 65667.696g_1 - 3292504.605)

}[/math]

Combine like terms:

[math]\displaystyle{

\llzigzag \textbf{d} \rrzigzag _2 =

843.012g_1^2 - 168996.758g_1 - 8478975.318

}[/math]

At this point, we take the derivative. Basically, exponents decrease by 1 and what they were before turn into coefficients; we won't be doing a full review of this here, but good tutorials on that should be easy to find online.

[math]\displaystyle{

\dfrac{\partial}{\partial{g_1}}

\llzigzag \textbf{d} \rrzigzag _2 =

2×843.012g_1 - 168996.758

}[/math]

This is the formula for the slope of the graph, and we want to know where it's equal to zero.

[math]\displaystyle{

0 = 2×843.012g_1 - 168996.758

}[/math]

So we can now solve for [math]\displaystyle{ g_1 }[/math]:

[math]\displaystyle{

\begin {align}

0 &= 1686.024g_1 - 168996.758 \\[4pt]

168996.758 &= 1686.024g_1 \\[6pt]

\dfrac{168996.758}{1686.024} &= g_1 \\[6pt]

100.234 &= g_1 \\

\end {align}

}[/math]

Ta-da! There's our generator size: 100.234 ¢.[note 2]

Verifying the toy example with the pseudoinverse

Okay... but what the heck does this have to do with a pseudoinverse? Well, for a sanity check, let's double-check against our pseudoinverse method.

[math]\displaystyle{

G = \mathrm{T}W(M\mathrm{T}C)^{+} = \mathrm{T}C(M\mathrm{T}C)^\mathsf{T}(M\mathrm{T}C(M\mathrm{T}C)^\mathsf{T})^{-1}

}[/math]

We already know [math]\displaystyle{ \mathrm{T}C }[/math] from an earlier step above. And so [math]\displaystyle{ M\mathrm{T}C }[/math] is:

[math]\displaystyle{ \begin{array} {ccc} M \\ \left[ \begin{array} {rrr} 12 & 19 & 28 \\ \end{array} \right] \end{array} \begin{array} {ccc} \mathrm{T}C \\ \left[ \begin{array} {r|r|r} {-8.644} & 4.907 & {-2.585} \\ 0 & 4.907 & 2.585 \\ 4.322 & {-4.907} & 0 \\ \end{array} \right] \end{array} = \begin{array} {ccc} M\mathrm{T}C \\ \left[ \begin{array} {r|r|r} 17.288 & 14.721 & 18.095 \\ \end{array} \right] \end{array} }[/math]

So plugging these in we get:

[math]\displaystyle{

G =

\begin{array} {ccc}

\mathrm{T}C \\

\left[ \begin{array} {r|r|r}

{-8.644} & 4.907 & {-2.585} \\

0 & 4.907 & 2.585 \\

4.322 & {-4.907} & 0 \\

\end{array} \right]

\end{array}

\begin{array} {ccc}

(M\mathrm{T}C)^\mathsf{T} \\

\left[ \begin{array} {rrr}

17.288 \\ \hline

14.721 \\ \hline

18.095 \\

\end{array} \right]

\end{array}

(

\begin{array} {ccc}

M\mathrm{T}C \\

\left[ \begin{array} {r|r|r}

17.288 & 14.721 & 18.095 \\

\end{array} \right]

\end{array}

\begin{array} {ccc}

(M\mathrm{T}C)^\mathsf{T} \\

\left[ \begin{array} {rrr}

17.288 \\ \hline

14.721 \\ \hline

18.095 \\

\end{array} \right]

\end{array}

)^{-1}

}[/math]

Which works out to:

[math]\displaystyle{

G =

\begin{array} {ccc}

\mathrm{T}C(M\mathrm{T}C)^\mathsf{T} \\

\left[ \begin{array} {rrr}

527.565 \\

608.962 \\

642.322 \\

\end{array} \right]

\end{array}

(

\begin{array} {ccc}

M\mathrm{T}C(M\mathrm{T}C)^\mathsf{T} \\

\left[ \begin{array} {rrr}

842.983

\end{array} \right]

\end{array}

)^{-1}

}[/math]

Then take the inverse (interestingly, since this is a [math]\displaystyle{ (1, 1) }[/math]-shaped matrix, this is equivalent to the reciprocal, that is, we're just finding [math]\displaystyle{ \frac{1}{842.983} = 0.00119 }[/math]:

[math]\displaystyle{

G =

\begin{array} {ccc}

\mathrm{T}C(M\mathrm{T}C)^\mathsf{T} \\

\left[ \begin{array} {rrr}

{-123.974} \\

119.007 \\

2.484 \\

\end{array} \right]

\end{array}

\begin{array} {ccc}

(M\mathrm{T}C(M\mathrm{T}C)^\mathsf{T})^{-1} \\

\left[ \begin{array} {rrr}

0.00119

\end{array} \right]

\end{array}

}[/math]

And finally multiply:

[math]\displaystyle{

G =

\begin{array} {ccc}

\mathrm{T}C(M\mathrm{T}C)^\mathsf{T}(M\mathrm{T}C(M\mathrm{T}C)^\mathsf{T})^{-1} \\

\left[ \begin{array} {rrr}

{-0.147066} \\

0.141174 \\

0.002946 \\

\end{array} \right]

\end{array}

}[/math]

To compare with our 100.234 ¢ value, we'll have to convert this [math]\displaystyle{ G }[/math] to a [math]\displaystyle{ 𝒈 }[/math], but that's easy enough. As we demonstrated earlier, simply multiply by [math]\displaystyle{ 𝒋 }[/math]:

[math]\displaystyle{

𝒈 =

\begin{array} {ccc}

𝒋 \\

\left[ \begin{array} {rrr}

1200.000 & 1901.955 & 2786.314 \\

\end{array} \right]

\end{array}

\begin{array} {ccc}

G \\

\left[ \begin{array} {rrr}

{-0.147066} \\

0.141174 \\

0.002946 \\

\end{array} \right]

\end{array}

}[/math]

When we work through that, we get 100.236 ¢. Close enough (shrugging off rounding errors). So we've sanity-checked at least.

But if we really want to see the connection between the pseudoinverse and the finding the zero of the derivative—how they both find the point where the slope of the RMS graph is zero and therefore it is at its minimum—we're going to have to upgrade from an equal temperament (rank-1 temperament) to a rank-2 temperament. In other words, we need to address tunings with more than one generator, ones can't be represented by a simple scalar anymore, but instead need to be represented with a vector.

A demonstration using matrix calculus

Technically speaking, even with two generators, meaning two variables, we could take the derivative with respect to one, and then take the derivative with respect to the other. And with three generators we could take three derivatives. But this gets out of hand. And there's a cleverer way we can think about the problem, which involves treating the vector containing all the generators as a single variable. We can do that! But it involves matrix calculus. And in this section we'll work through how.

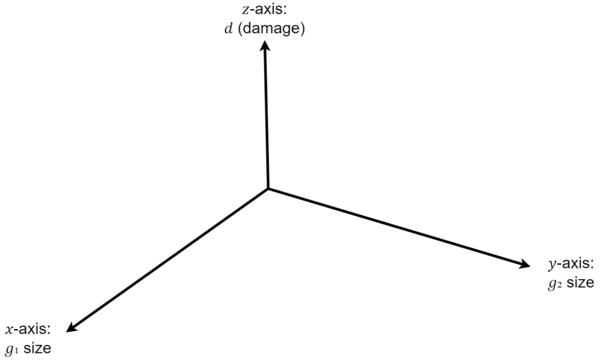

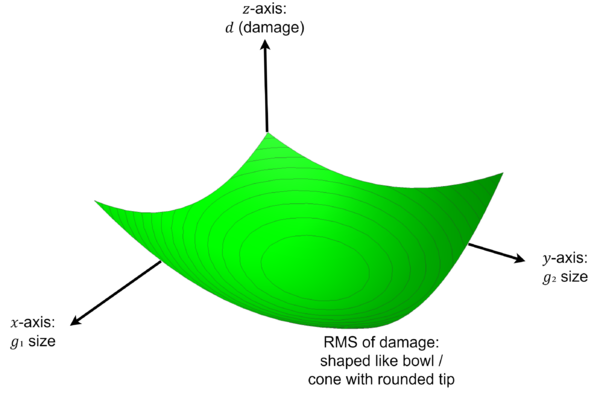

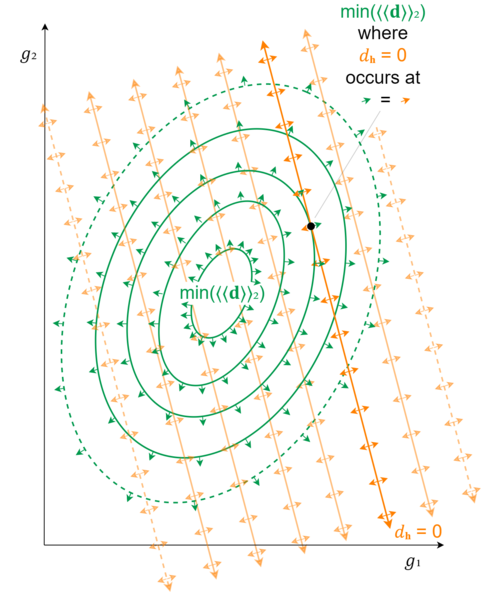

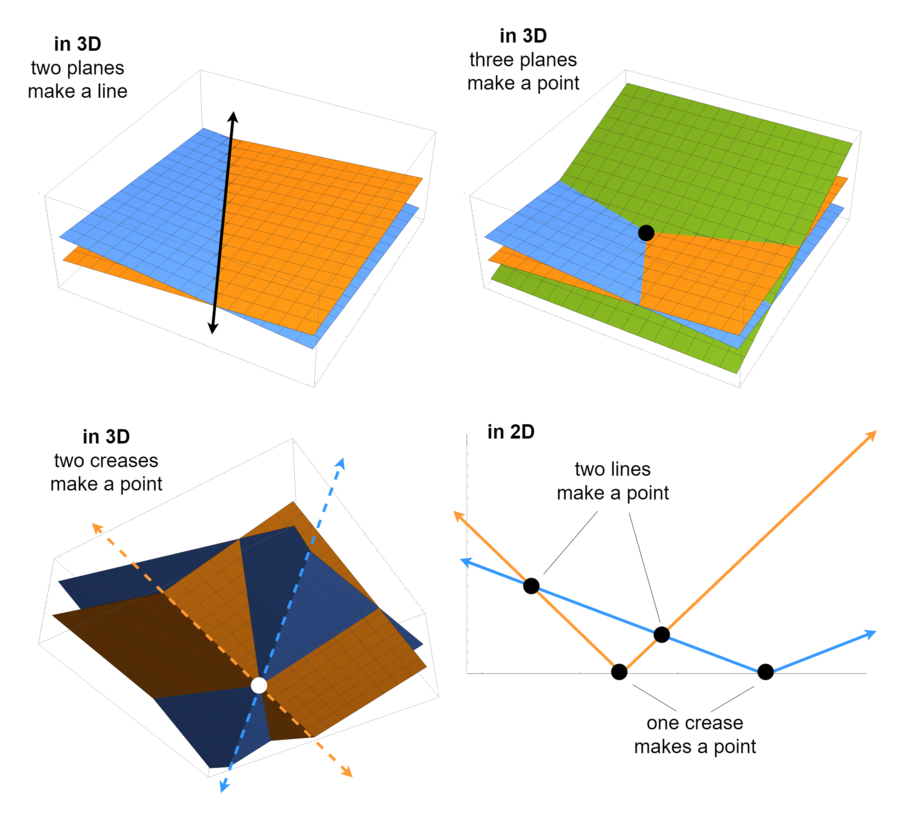

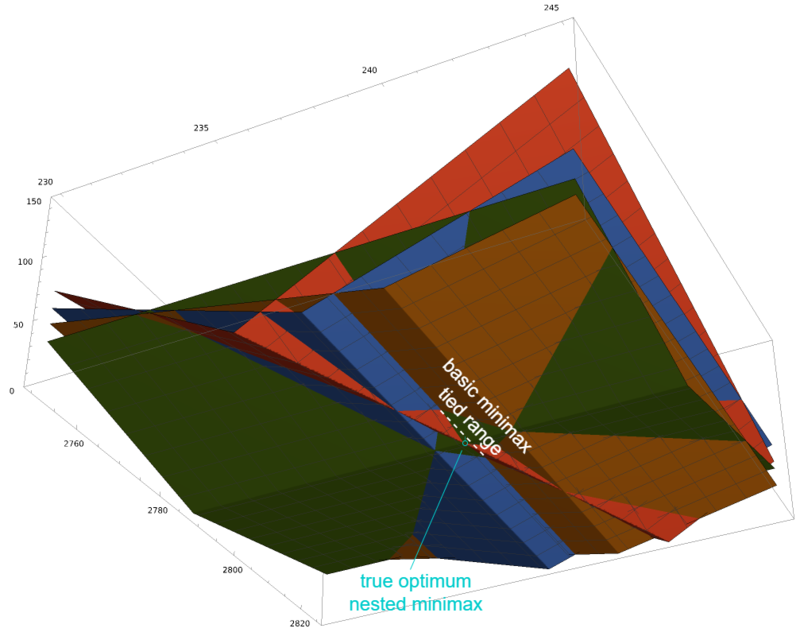

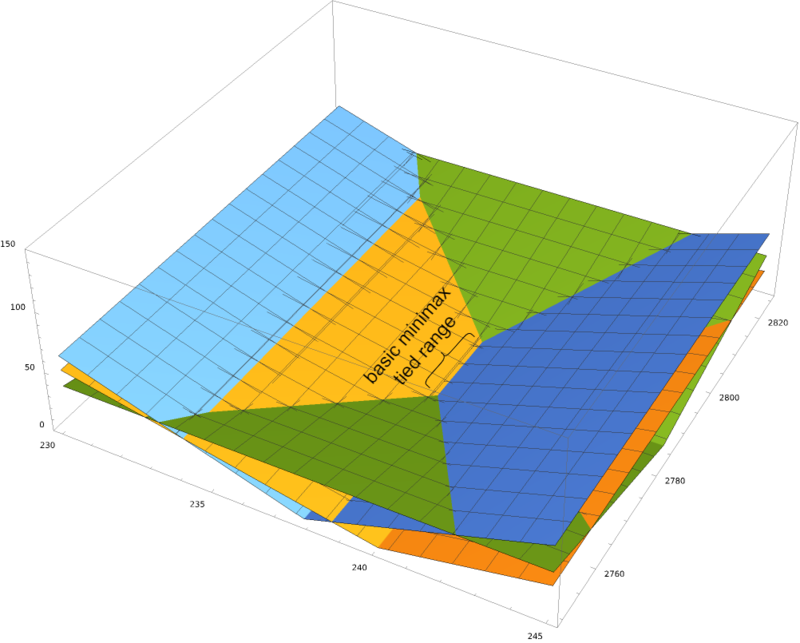

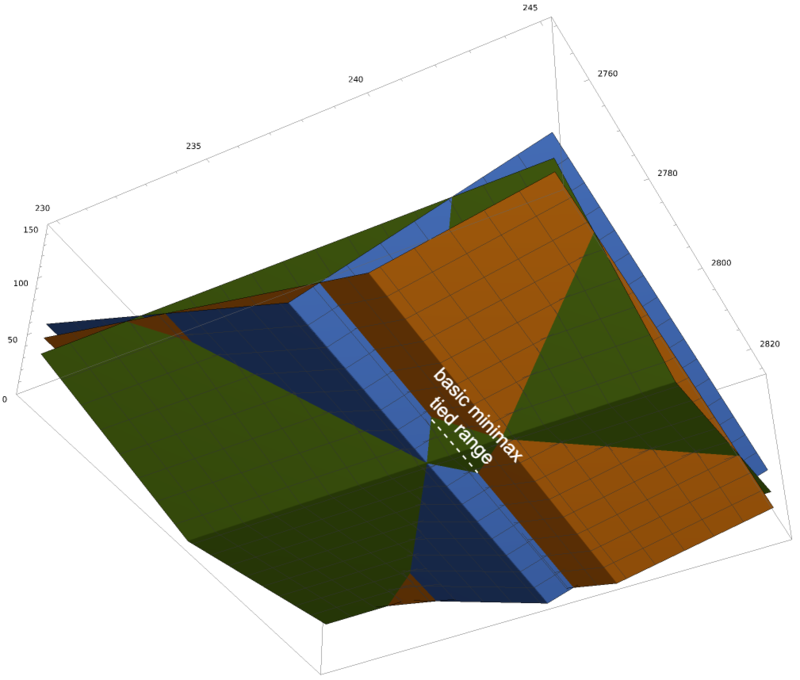

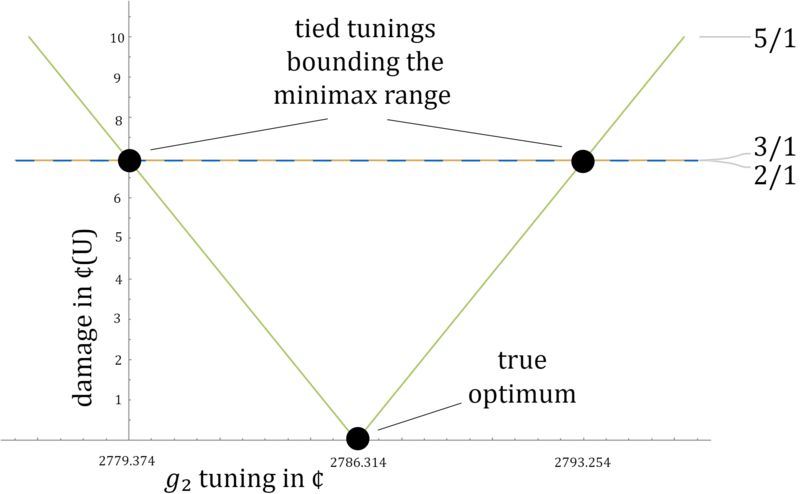

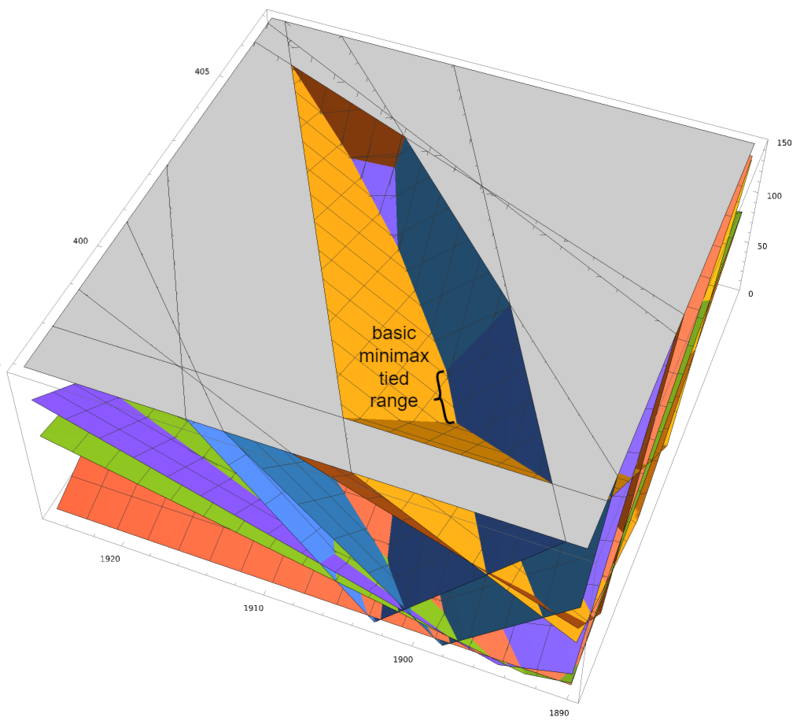

Graphing damage for a rank-2 temperament, as we've seen previously, means we'll be looking at 3D tuning damage space, with the [math]\displaystyle{ x }[/math] and [math]\displaystyle{ y }[/math] axes in perpendicular directions across the floor, and the [math]\displaystyle{ z }[/math]-axis coming up out of the floor, where the [math]\displaystyle{ x }[/math]-axis gives the tuning of one generator, the [math]\displaystyle{ y }[/math]-axis gives the tuning of the other generator, and the [math]\displaystyle{ z }[/math]-axis gives the temperament's damage as a function of those two generator tunings.

And while in 2D tuning damage space the RMS graph made something like a V-shape but with the tip rounded off, here it makes a cone, again with its tip rounded off.

Remember that although we like to think of it, and visualize it as minimizing the [math]\displaystyle{ 2 }[/math]-mean of damage, it's equivalent, and simpler computationally, to minimize the [math]\displaystyle{ 2 }[/math]-sum. So here's our function:

[math]\displaystyle{ f(x, y) = \llzigzag \textbf{d} \rrzigzag _2 }[/math]

Which is the same as:

[math]\displaystyle{ f(x, y) = \textbf{d}\textbf{d}^\mathsf{T} }[/math]

Because:

[math]\displaystyle{

\textbf{d}\textbf{d}^\mathsf{T} = \\

\textbf{d}·\textbf{d} = \\

\mathrm{d}_1·\mathrm{d}_1 + \mathrm{d}_2·\mathrm{d}_2 + \mathrm{d}_3·\mathrm{d}_3 = \\

\mathrm{d}_1^2 + \mathrm{d}_2^2 + \mathrm{d}_3^2

}[/math]

Which is the same thing as the [math]\displaystyle{ 2 }[/math]-sum: it's the sum of entries to the 2nd power.

Alright, but I can expect you may be concerned: [math]\displaystyle{ x }[/math] and [math]\displaystyle{ y }[/math] do not even appear in the body of the formula! Well, we can fix that.

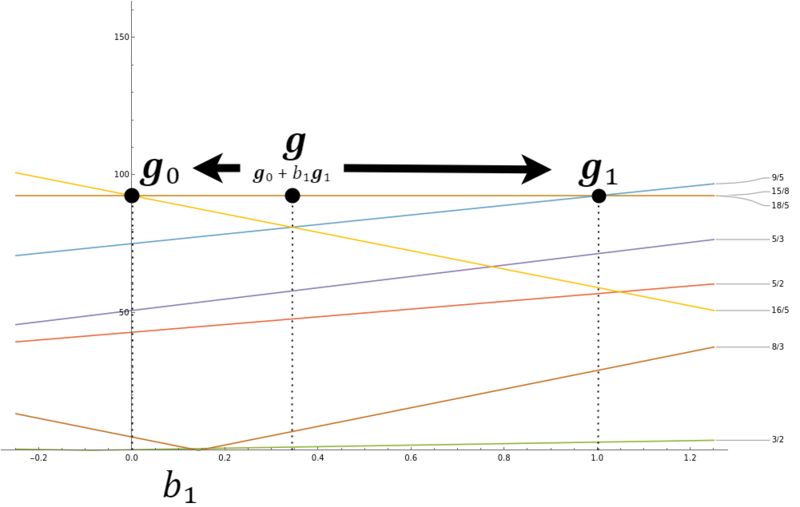

As a first step toward resolving this problem, let's choose some better variable names. We had only chosen [math]\displaystyle{ x }[/math] and [math]\displaystyle{ y }[/math] because those are the most generic variable names available. They're very typically used when graphing things in Euclidean space like this. But we can definitely do better than those names, if we bring in some information more specific to our problem.

One thing we know is that these [math]\displaystyle{ x }[/math] and [math]\displaystyle{ y }[/math] variables are supposed to represent the tunings of our two generators. So let's call them [math]\displaystyle{ g_1 }[/math] and [math]\displaystyle{ g_2 }[/math] instead:

[math]\displaystyle{ f(g_1, g_2) = \textbf{d}\textbf{d}^\mathsf{T} }[/math]

But we can do even better than this. We're in a world of vectors, so why not express [math]\displaystyle{ g_1 }[/math] and [math]\displaystyle{ g_2 }[/math] together as a vector, [math]\displaystyle{ 𝒈 }[/math]. In other words, they're just a generator tuning map.

[math]\displaystyle{ f(𝒈) = \textbf{d}\textbf{d}^\mathsf{T} }[/math]

You may not be comfortable with the idea of a function of a vector (Douglas: I certainly wasn't when I first saw this!) but after working through this example and meditating on it for a while, you may be surprised to find it ceasing to seem so weird after all.

So we're still trying to connect the left and right sides of this equation by showing explicitly how this is a function of [math]\displaystyle{ 𝒈 }[/math], i.e. how [math]\displaystyle{ \textbf{d} }[/math] can be expressed in terms of [math]\displaystyle{ 𝒈 }[/math]. And we promise, we will get there soon enough.

Next, let's substitute in [math]\displaystyle{ (𝒕 - 𝒋)\mathrm{T}W }[/math] for [math]\displaystyle{ \textbf{d} }[/math]. In other words, the target-interval damage list is the difference between how the tempered-prime tuning map and the just-prime tuning map tune our target-intervals, absolute valued, and weighted by each interval's weight. But the amount of symbols necessary to represent this equation is going to get out of hand if we do exactly like this, so we're actually going to distribute first, finding [math]\displaystyle{ 𝒕\mathrm{T}W - 𝒋\mathrm{T}W }[/math], and then we're going to start following a pattern here of using Fraktur-style letters to represent matrices that are multiplied by [math]\displaystyle{ \mathrm{T}W }[/math], so that in our case [math]\displaystyle{ 𝖙 = 𝒕\mathrm{T}W }[/math] and [math]\displaystyle{ 𝖏 = 𝒋\mathrm{T}W }[/math]:

[math]\displaystyle{ f(𝒈) = (𝖙 - 𝖏)(𝖙^\mathsf{T} - 𝖏^\mathsf{T}) }[/math]

Now let's distribute these two binomials (you know, the old [math]\displaystyle{ (a + b)(c + d) = ac + ad + bc + bd }[/math] trick, AKA "FOIL" = first, outer, inner, last).

[math]\displaystyle{ f(𝒈) = 𝖙𝖙^\mathsf{T} - 𝖙𝖏^\mathsf{T} - 𝖏𝖙^\mathsf{T} + 𝖏𝖏^\mathsf{T} }[/math]

Because both [math]\displaystyle{ 𝖙𝖏^\mathsf{T} }[/math] and [math]\displaystyle{ 𝖏𝖙^\mathsf{T} }[/math] correspond to the dot product of [math]\displaystyle{ 𝖙 }[/math] and [math]\displaystyle{ 𝖏 }[/math], we can consolidate the two inner terms. Let's change [math]\displaystyle{ 𝖙𝖏^\mathsf{T} }[/math] into [math]\displaystyle{ 𝖏𝖙^\mathsf{T} }[/math], so that we will end up with [math]\displaystyle{ 2𝖏𝖙^\mathsf{T} }[/math] in the middle:

[math]\displaystyle{ f(𝒈) = 𝖙𝖙^\mathsf{T} - 2𝖏𝖙^\mathsf{T} + 𝖏𝖏^\mathsf{T} }[/math]

Alright! We're finally ready to surface [math]\displaystyle{ 𝒈 }[/math]. It's been hiding in [math]\displaystyle{ 𝒕 }[/math] all along; the tuning map is equal to the generator tuning map times the mapping, i.e. [math]\displaystyle{ 𝒕 = 𝒈M }[/math]. So we can just substitute that in everywhere. Exactly what we'll do is [math]\displaystyle{ 𝖙 = 𝒕\mathrm{T}W = (𝒈M)\mathrm{T}W = 𝒈(M\mathrm{T}W) = 𝒈𝔐 }[/math], that last step introducing a new Fraktur-style symbol.[note 3]

[math]\displaystyle{ f(𝒈) = (𝒈𝔐)(𝒈𝔐)^\mathsf{T} - 2𝖏(𝒈𝔐)^\mathsf{T} + 𝖏𝖏^\mathsf{T} }[/math]

And that gets sort of clunky, so let's execute some of those transposes. Note that when we transpose, the order of things reverses, so [math]\displaystyle{ (𝒈𝔐)^\mathsf{T} = 𝔐^\mathsf{T}𝒈^\mathsf{T} }[/math]:

[math]\displaystyle{ f(𝒈) = 𝒈𝔐𝔐^\mathsf{T}𝒈^\mathsf{T} - 2𝖏𝔐^\mathsf{T}𝒈^\mathsf{T} + 𝖏𝖏^\mathsf{T} }[/math]

And now, we're finally ready to take the derivative!

[math]\displaystyle{ \dfrac{\partial}{\partial𝒈}f(𝒈) = \dfrac{\partial}{\partial𝒈}(𝒈𝔐𝔐^\mathsf{T}𝒈^\mathsf{T} - 2𝖏𝔐^\mathsf{T}𝒈^\mathsf{T} + 𝖏𝖏^\mathsf{T}) }[/math]

And remember, we want to find the place where this function is equal to zero. So let's drop the [math]\displaystyle{ \dfrac{\partial}{\partial𝒈}f(𝒈) }[/math] part on the left, and show the [math]\displaystyle{ = \textbf{0} }[/math] part on the right instead (note the boldness of the [math]\displaystyle{ \textbf{0} }[/math]; this indicates that this is not simply a single zero, but a vector of all zeros, one for each generator).

[math]\displaystyle{ \dfrac{\partial}{\partial𝒈}(𝒈𝔐𝔐^\mathsf{T}𝒈^\mathsf{T} - 2𝖏𝔐^\mathsf{T}𝒈^\mathsf{T} + 𝖏𝖏^\mathsf{T}) = \textbf{0} }[/math]

Well, now we've come to it. We've run out of things we can do without confronting the question: how in the world do we take derivatives of matrices? This next part is going to require some of that matrix calculus we warned about. Fortunately, if one is previously familiar with normal algebraic differentiation rules, these will not seem too wild:

- The last term, [math]\displaystyle{ 𝖏𝖏^\mathsf{T} }[/math], is going to vanish, because with respect to [math]\displaystyle{ 𝒈 }[/math], it's a constant; there's no factor of [math]\displaystyle{ 𝒈 }[/math] in it.

- The middle term, [math]\displaystyle{ -2𝖏𝔐^\mathsf{T}𝒈^\mathsf{T} }[/math], has a single factor of [math]\displaystyle{ 𝒈 }[/math], so it will remain but with that factor gone. (Technically it's a factor of [math]\displaystyle{ 𝒈^\mathsf{T} }[/math], but for reasons that would probably require a deeper understanding of the subtleties of matrix calculus than the present author commands, it works out this way anyway. Perhaps we should have differentiated instead with respect to [math]\displaystyle{ 𝒈^\mathsf{T} }[/math], rather than [math]\displaystyle{ 𝒈 }[/math]?)[note 4]

- The first term, [math]\displaystyle{ 𝒈𝔐𝔐^\mathsf{T}𝒈^\mathsf{T} }[/math], can in a way be seen to have a [math]\displaystyle{ 𝒈^2 }[/math], because it contains both a [math]\displaystyle{ 𝒈 }[/math] as well as a [math]\displaystyle{ 𝒈^\mathsf{T} }[/math] (and we demonstrated earlier how for a vector [math]\displaystyle{ \textbf{v} }[/math], there is a relationship between itself squared and it times its transpose); so, just as an [math]\displaystyle{ x^2 }[/math] differentiates to a [math]\displaystyle{ 2x }[/math], that is, the power is reduced by 1 and multiplies into any existing coefficient, this term becomes [math]\displaystyle{ 2𝒈𝔐𝔐^\mathsf{T} }[/math].

And so we find:

[math]\displaystyle{ 2𝒈𝔐𝔐^\mathsf{T} - 2𝖏𝔐^\mathsf{T} = \textbf{0} }[/math]

That's much nicer to look at, huh. Well, what next? Our goal is to solve for [math]\displaystyle{ 𝒈 }[/math], right? Then let's isolate the solitary remaining term with [math]\displaystyle{ 𝒈 }[/math] as a factor on one side of the equation:

[math]\displaystyle{ 2𝒈𝔐𝔐^\mathsf{T} = 2𝖏𝔐^\mathsf{T} }[/math]

Certainly we can cancel out the 2's on both sides; that's easy:

[math]\displaystyle{ 𝒈𝔐𝔐^\mathsf{T} = 𝖏𝔐^\mathsf{T} }[/math]

And, as we proved in the earlier section "Demystifying the formula", [math]\displaystyle{ AA^\mathsf{T} }[/math] is invertible, so we cancel that out on the left by multiplying both sides of the equation by [math]\displaystyle{ (𝔐𝔐^\mathsf{T})^{-1} }[/math]:

[math]\displaystyle{

𝒈𝔐𝔐^\mathsf{T}(𝔐𝔐^\mathsf{T})^{-1} = 𝖏𝔐^\mathsf{T}(𝔐𝔐^\mathsf{T})^{-1} \\

𝒈\cancel{𝔐𝔐^\mathsf{T}}\cancel{(𝔐𝔐^\mathsf{T})^{-1}} = 𝖏𝔐^\mathsf{T}(𝔐𝔐^\mathsf{T})^{-1} \\

𝒈 = 𝖏𝔐^\mathsf{T}(𝔐𝔐^\mathsf{T})^{-1}

}[/math]

Finally, remember that [math]\displaystyle{ 𝒈 = 𝒋G }[/math] and [math]\displaystyle{ 𝒋 = 𝒋G_{\text{j}}M_{\text{j}} }[/math], so we can replace those and cancel out some more stuff (also remember that [math]\displaystyle{ 𝖏 = 𝒋\mathrm{T}W }[/math]):

[math]\displaystyle{

(𝒋G) = (𝒋G_{\text{j}}M_{\text{j}})\mathrm{T}W𝔐^\mathsf{T}(𝔐𝔐^\mathsf{T})^{-1} \\

\cancel{𝒋}G = \cancel{𝒋}\cancel{I}\cancel{I}\mathrm{T}W𝔐^\mathsf{T}(𝔐𝔐^\mathsf{T})^{-1}

}[/math]

And that part on the right looks pretty familiar...

[math]\displaystyle{

G = \mathrm{T}W𝔐^\mathsf{T}(𝔐𝔐^\mathsf{T})^{-1} \\

G = \mathrm{T}W𝔐^{+} \\

G = \mathrm{T}W(M\mathrm{T}W)^{+}

}[/math]

Voilà! We've found our pseudoinverse-based [math]\displaystyle{ G }[/math] formula, finding it to be the [math]\displaystyle{ G }[/math] that gives the point of zero slope, i.e. the minimum point of the RMS damage graph.

If you're hungry for more information on these concepts, or even just another take on it, please see User:Sintel/Generator optimization#Least squares method.

With held-intervals

The pseudoinverse method can be adapted to handle tuning schemes which have held-intervals. The basic idea here is that we can no longer simply grab the tuning found as the point at the bottom of the tuning damage graph bowl hovering above the floor, because that tuning probably doesn't also happen to be one that leaves the requested interval unchanged. We can imagine an additional feature in our tuning damage space: the line across this bowl which connects every point where the generator tunings work out such that our interval is indeed unchanged. Again, this line probably doesn't straight through the bottommost point of our RMS-damage graph. But that's okay. That just means we could still decrease the overall damage further if we didn't hold the interval unchanged. But assuming we're serious about holding this interval unchanged, we've simply modified the problem a bit. Now we're looking for the point along this new held-interval line which is closest to the floor. Simple enough to understand, in concept! The rest of this section is dedicated to explaining how, mathematically speaking, we're able to identify that point. It still involves matrix calculus—derivatives of vectors, and such—but now we also pull in some additional ideas. We hope you dig it.[note 5]

We'll be talking through this problem assuming a three-dimensional tuning damage graph, which is to say, we're dealing with a rank-2 temperament (the two generator dimensions across the floor, and the damage dimension up from the floor). If we asked for more than one interval to be held unchanged, then we'd flip over to the "only held-intervals" method discussed later, because at that point there's only a single possible tuning. And if we asked for less than one interval to be held unchanged, then we'd be back to the ordinary pseudoinverse method which you've already learned. So for this extended example we'll be assuming one held-interval. But the principles discussed here generalize to higher dimensions of temperaments and more held-intervals, if the dimensionality supports them. These higher dimensional examples are more difficult to visualize, though, of course, and so we've chosen the simplest possibility that sufficiently demonstrates the ideas we need to learn.

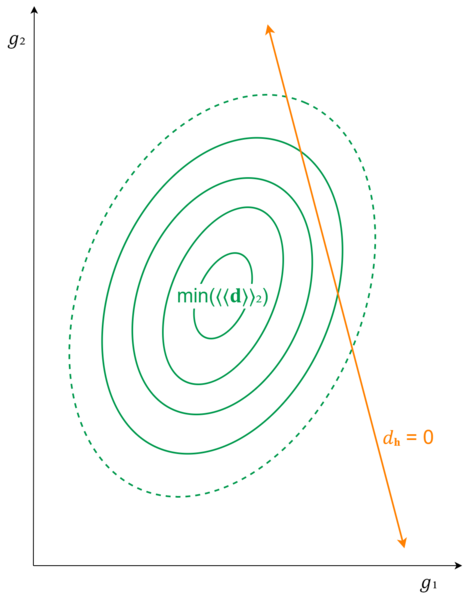

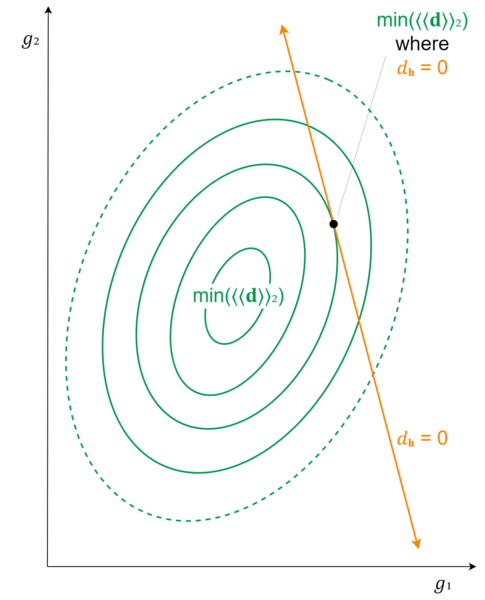

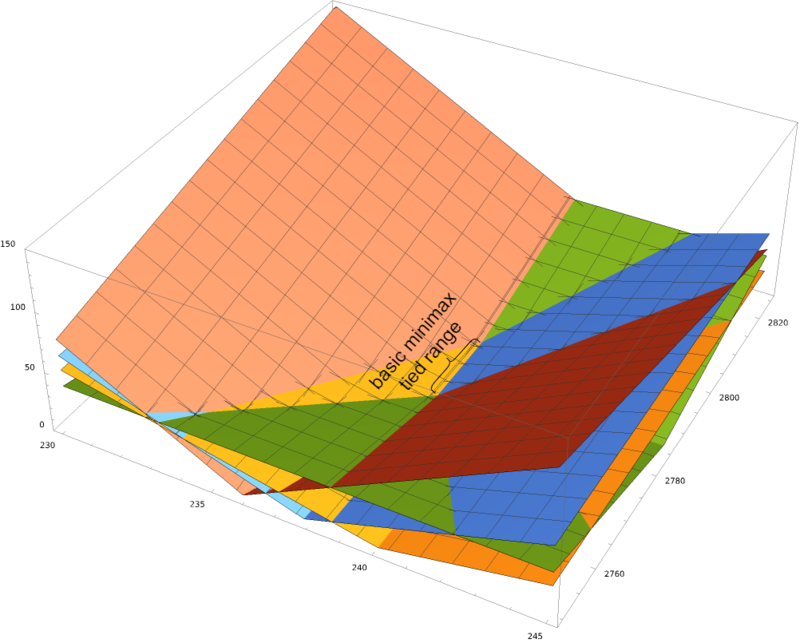

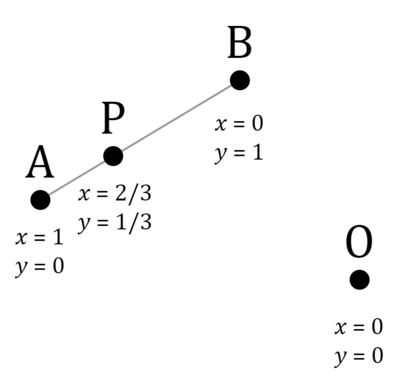

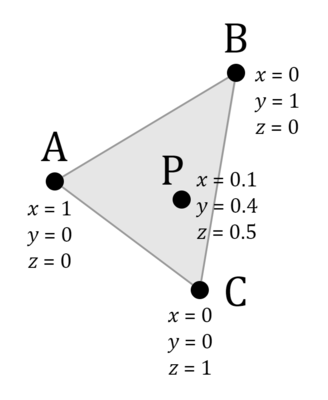

Topographic view

[math]\displaystyle{ % Latex equivalents of the wiki templates llzigzag and rrzigzag for double zigzag brackets. % Annoyingly, we need slightly different Latex versions for the different Latex sizes. \def\smallLLzigzag{\hspace{-1.4mu}\style{display:inline-block;transform:scale(.62,1.24)translateY(.05em);font-family:sans-serif}{ꗨ\hspace{-2.6mu}ꗨ}\hspace{-1.4mu}} \def\smallRRzigzag{\hspace{-1.4mu}\style{display:inline-block;transform:scale(-.62,1.24)translateY(.05em);font-family:sans-serif}{ꗨ\hspace{-2.6mu}ꗨ}\hspace{-1.4mu}} \def\llzigzag{\hspace{-1.6mu}\style{display:inline-block;transform:scale(.62,1.24)translateY(.07em);font-family:sans-serif}{ꗨ\hspace{-3mu}ꗨ}\hspace{-1.6mu}} \def\rrzigzag{\hspace{-1.6mu}\style{display:inline-block;transform:scale(-.62,1.24)translateY(.07em);font-family:sans-serif}{ꗨ\hspace{-3mu}ꗨ}\hspace{-1.6mu}} \def\largeLLzigzag{\hspace{-1.8mu}\style{display:inline-block;transform:scale(.62,1.24)translateY(.09em);font-family:sans-serif}{ꗨ\hspace{-3.5mu}ꗨ}\hspace{-1.8mu}} \def\largeRRzigzag{\hspace{-1.8mu}\style{display:inline-block;transform:scale(-.62,1.24)translateY(.09em);font-family:sans-serif}{ꗨ\hspace{-3.5mu}ꗨ}\hspace{-1.8mu}} \def\LargeLLzigzag{\hspace{-2.5mu}\style{display:inline-block;transform:scale(.62,1.24)translateY(.1em);font-family:sans-serif}{ꗨ\hspace{-4.5mu}ꗨ}\hspace{-2.5mu}} \def\LargeRRzigzag{\hspace{-2.5mu}\style{display:inline-block;transform:scale(-.62,1.24)translateY(.1em);font-family:sans-serif}{ꗨ\hspace{-4.5mu}ꗨ}\hspace{-2.5mu}} }[/math] Back in the fundamentals article, we briefly demonstrated a special way to visualize a 3-dimensional tuning damage 2-dimensionally: in a topographic view, where the [math]\displaystyle{ z }[/math]-axis is pointing straight out of the page, and represented by contour lines tracing out the shapes of points which share the same [math]\displaystyle{ z }[/math]-value. In the case of a tuning damage graph, then, this will show concentric rings (not necessarily circles) around the lowest point of our damage bowl, representing how damage increases smoothly in any direction you take away from that minimum point. So far we haven't made much use of this visualization approach, but for tuning schemes with [math]\displaystyle{ p=2 }[/math] and at least one held-interval, it's the perfect tool for the job.

So now we draw our held-interval line across this topographic view.

Our first guess at the lowest point on this line might be the point closest to the actual minimum damage. Good guess, but not necessarily true. It would be true if the rings were exactly circles. But they're not necessarily; they might be oblong, and the skew may not be in an obvious angle with respect to the held-interval line. So for a generalized means of finding the lowest point on the held-interval line, we need to think a bit deeper about the problem.

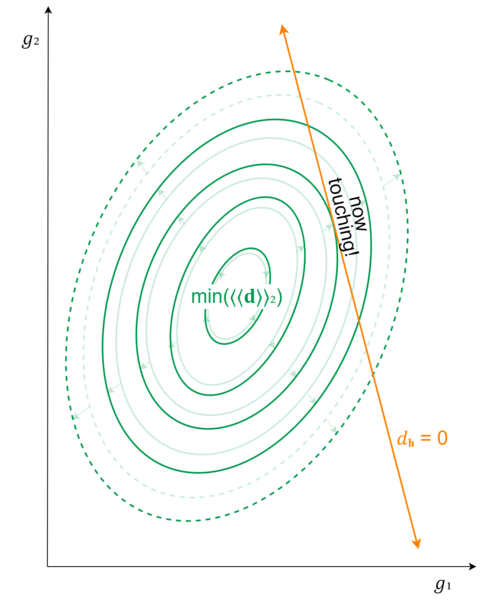

The first step to understanding better is to adjust our contour lines. The obvious place to start was at increments of 1 damage. But we're going to want to rescale so that one of our contour lines exactly touches the held-interval line. To be clear, we're not changing the damage graph at all; we're simply changing how we visualize it on this topographic view.

The point where this contour line touches the held-interval line, then, is the lowest point on the held-interval line, that is, the point among all those where the held-interval is indeed unchanged where the overall damage to the target-intervals is the least. This should be easy enough to see, because if you step just an infinitesimally small amount in either direction along the held-interval line, you will no longer be touching the contour line, but rather you will be just outside of it, which means you have slightly higher damage than whatever constant damage amount that contour traces.

Next, we need to figure out how to identify this point. It may seem frustrating, because we're looking right at it! But we don't already have formulas for these contour lines.

Matching slopes

In order to identify this point, it's going to be more helpful to look at the entire graph of our held-interval's error. That is, rather than only drawing the line where it's zero:

[math]\displaystyle{

𝒕\mathrm{H} - 𝒋\mathrm{H} = 0

}[/math]

We'll draw the whole thing:

[math]\displaystyle{

𝒕\mathrm{H} - 𝒋\mathrm{H}

}[/math]

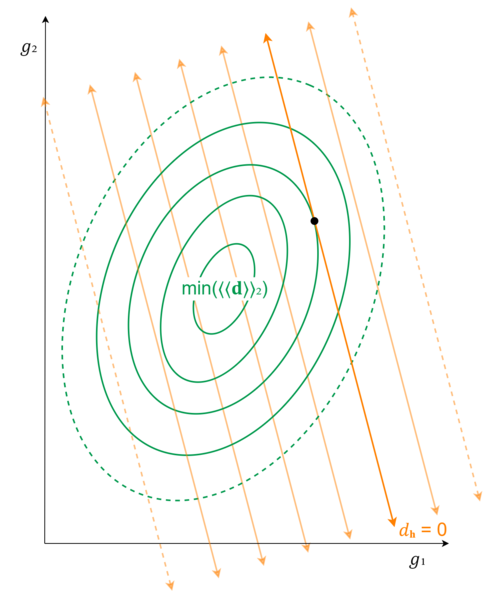

If the original graph was like a line drawn diagonally across the floor, the full graph looks like this but with a plane running through it, tilted, on one side ascending up and out from the floor, on the other side descending down and into the floor. In the topographic view, then, this graph will appear as equally-spaced parallel lines to the original line, emanating outwards in both directions from it.

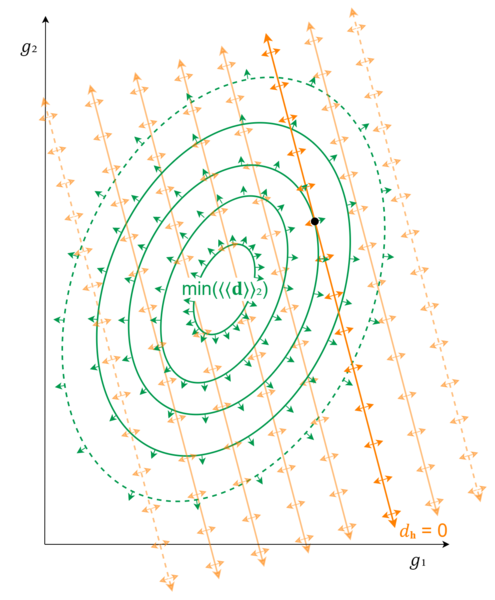

The next thing we want to see are some little arrows along all of these contour lines, both for the damage graph and for the held-interval graph, which point perpendicularly to them.

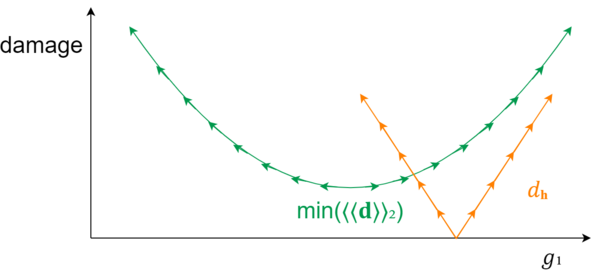

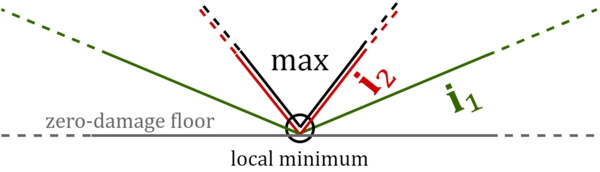

What these little arrows represent are the derivatives of these graphs at those points, or in other words, the slope. If this isn't clear, it might help to step back for a moment to 2D, and draw little arrows in a similar fashion:

In higher dimensions, the generalized way to think about slope is that it's the vector pointing in the direction of steepest slope upwards from the given point.

Now, we're not attempting to distinguish the sizes of these slopes here. We could do that, perhaps by changing the relative scale of the arrows. But that's particularly important for our purposes. We only need to notice the different directions these slopes point.

You may recall that in the simpler case—with no held-intervals—we identified the point at the bottom of the bowl using derivatives; this point is where the derivative (slope) is equal to zero. Well, what can we notice about the point we're seeking to identify? It's where the slopes of the RMS damage graph for the target-intervals and the error of the held-interval match!

So, our first draft of our goal might look something like this:

[math]\displaystyle{

\dfrac{\partial}{\partial{𝒈}}( \llzigzag \textbf{d} \rrzigzag _2) = \dfrac{\partial}{\partial{𝒈}}(𝒓\mathrm{H})

}[/math]

But that's not quite specific enough. To ensure we grab grab a point satisfying that condition, but also ensure that it's on our held-interval line, we could simply add another equation:

[math]\displaystyle{

\begin{align}

\dfrac{\partial}{\partial{𝒈}}( \llzigzag \textbf{d} \rrzigzag _2) &= \dfrac{\partial}{\partial{𝒈}}(𝒓\mathrm{H}) \\[12pt]

𝒓\mathrm{H} &= 0

\end{align}

}[/math]