Dave Keenan & Douglas Blumeyer's guide to RTT/Tuning fundamentals

This is article 3 of 9 in Dave Keenan & Douglas Blumeyer's guide to RTT, or "D&D's guide" for short. In this article, we'll be explaining fundamental concepts behind the various schemes that have been proposed for tuning temperaments. Our explanations here will not assume much prior mathematical knowledge.

A tuning, in general xenharmonics, is simply a set of pitches for making music. This is similar to a scale, but without necessarily being a melodic sequence; a tuning may contain several scales. In regular temperament theory (RTT), however, tuning has a specialized meaning as the exact number of cents for each generator of a regular temperament, such as the octave and fifth of the typical form of the quarter-comma tuning of meantone temperament (it can also refer to a single such generator, as in "what is the tuning of quarter-comma meantone's fifth?"). This RTT meaning of "tuning" is slightly different from the general xenharmonic meaning of "tuning", because generator sizes alone do not completely specify a pitch set; we still have to decide which pitches to generate with these generators.

If you already know which tuning schemes you prefer and why, our RTT library in Wolfram Language supports computing them (as well as many other tuning schemes whose traits are logical extensions or recombinations of the previously identified schemes' traits), and it can be run for free in your web browser.

Douglas's introduction to regular temperament tuning

If you've been around RTT much, you've probably encountered some of its many named tuning schemes. Perhaps you've read Paul Erlich's seminal paper A Middle Path, which—among other major contributions to the field—introduced an important tuning scheme he called "TOP". Or perhaps you've used Graham Breed's groundbreaking web app [1], which lets one explore the wide world of regular temperaments, providing their "TE" and "POTE" tunings along the way. Or perhaps you've browsed the Xenharmonic wiki, and encountered temperaments whose info boxes include their "CTE" tuning or still others.

When I (Douglas) initially set out to teach myself about RTT tuning, it seemed like the best place to start was to figure out the motivations for these tuning schemes, as well as how to compute them. Dave later told me that I had gone about the whole endeavor backwards. I had jumped into the deep end before learning to swim (in this metaphor, I suppose, Dave would be the lifeguard who saved me from drowning).

At that time, not much had been written about the fundamentals of tuning methodology (at least not much had been written in a centralized place; there's certainly tons of information littered about on the Yahoo! Groups tuning lists and Facebook). In lieu of such materials, what I probably should have done is just sat down with a temperament or three and got my feet wet by trying to tune it myself, discovering the most basic problems of tuning directly, at my own pace and in my own way.

This is what folks like Paul, Graham, and Dave had to do in the earlier days of RTT. No one had been there yet. No papers or wiki pages had already been written about the sorts of advanced solutions as they would eventually come to develop, building up to them gradually, tinkering with tuning ideas over decades. I should have started at the beginning like they did—with the simple and obvious ideas like damage, target-intervals, and optimization—and worked my way up to those intermediate concepts like "TOP and "POTE" tuning schemes. But I didn't. I got distracted by the tricky stuff too early on, and got myself badly lost in the process.

And so Dave and I have written this article to help spare other beginners from a fate similar to the one that befell me. This article is meant to fill that aforementioned void of materials about the fundamentals of tuning, the stuff that was perhaps taken for granted by the RTT tuning pioneers once they'd reached the point of publishing their big theoretical achievements. If you consider yourself more of a practical musician than a theoretical one, these fundamentals are the most important things to learn. Those generalizable tuning schemes that theorists have given names have great value, such as for being relatively easy for computers to calculate, and for consistently and reasonably documenting temperaments' tunings, but they are not necessarily the tunings that you'll actually most want to use in your next piece of music. By the end of this article, you'll know about the different tuning effects you might care about, enough to know what to ask a computer for to get the generator tunings you want.

And if you're anything like I was when I was beginning to learn about regular temperament generators, while you are keen to learn how to find the optimum tunings for generators (such as this article and the rest of the series are primarily concerned with), you may also be keen to learn how to find good choices of which JI interval(s) to think of each generator as representing. In that case, what you are looking for is called a generator detempering, so please check that out. I would also like to impress upon you that generator detemperings are very different from and should not be confused with optimum generator tunings; it took Dave quite some time and effort to disabuse me of this conflation.

Initial definitions

Before digging into anything in detail, let's first define seven core concepts: tuning itself, damage, error, weight, target-intervals, held-intervals, and optimization.

Tuning

A common point of confusion among newcomers to RTT is the difference between tuning and temperament. So, let's clear that distinction up right away.

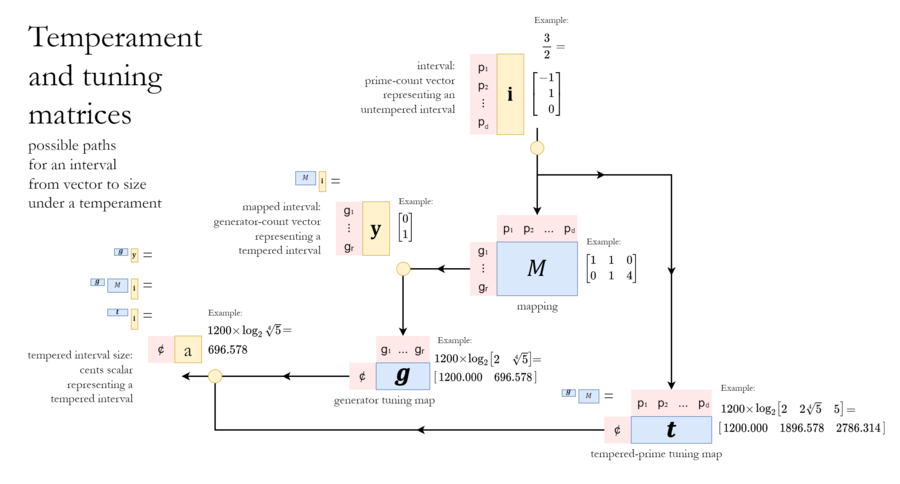

A regular temperament (henceforth simply "temperament") is not a finalized pitch system. It is only an abstract set of rules that a pitch system needs to follow. A temperament merely describes one way to approximate prime harmonics by using a smaller set of generating intervals, and it can be encapsulated in its entirety by a matrix of integers such as the following:

[math]\displaystyle{ \left[ \begin{matrix} 1 & 1 & 0 \\ 0 & 1 & 4 \\ \end{matrix} \right] }[/math]

In this case, the temperament tells us—one column at a time—that we reach prime 2 using 1 of the first generator, prime 3 using 1 each of the two generators, and prime 5 using 4 of the second generator. You may recognize this as the mapping for meantone temperament, which is an important historical temperament.

As we can see from the above example, temperaments do not make any specifications about the sizes of their generators. With a bit of training, one can deduce from the six numbers of this matrix that the first generator should be around an octave in size, and that the second generator should be around a perfect fifth in size—that is, assuming that we want this temperament to closely approximate these three prime harmonics 2, 3, and 5. But the exact sizes of these generators are left as an open question; no cents values are in sight here.

For one classic example of a tuning of meantone, consider quarter-comma tuning. Here, the octave is left pure, and the ~3/2 is set to [math]\displaystyle{ \small{696.578} \, \mathsf{¢} }[/math].[note 1] Another classic example of a meantone tuning would be fifth-comma, where the octave is also left pure, but the ~3/2 is instead set to [math]\displaystyle{ \small{697.654} \, \mathsf{¢} }[/math]. And if you read Paul Erlich's early RTT paper A Middle Path, he'll suggest that you temper your octaves (please!)[note 2] and for meantone, then, to tune your octave to [math]\displaystyle{ \small{1201.699} \, \mathsf{¢} }[/math] and your fifth to [math]\displaystyle{ \small{697.564} \, \mathsf{¢} }[/math]. So any piece of music that wishes to specify both its temperament as well as its tuning could provide the temperament information in the form of a matrix of integers such as featured above (or perhaps the temperament's name, if it is well-known enough), followed by its tuning information in the form of a list of generator tunings (in units of cents per generator) such as those noted here, which we'd call a generator tuning map. Here's how the three tunings we mentioned in this paragraph look set next to the temperament:

[math]\displaystyle{ \begin{array} {c} \text{tuning:} \\ \text{quarter-comma} \\ \left[ \begin{matrix} 1200.000 & 696.578 \end{matrix} \right] \end{array} \begin{array} {c} \text{temperament:} \\ \text{meantone} \\ \left[ \begin{matrix} 1 & 1 & 0 \\ 0 & 1 & 4 \\ \end{matrix} \right] \end{array} }[/math]

[math]\displaystyle{ \begin{array} {c} \text{tuning:} \\ \text{fifth-comma} \\ \left[ \begin{matrix} 1200.000 & 697.654 \end{matrix} \right] \end{array} \begin{array} {c} \text{temperament:} \\ \text{meantone} \\ \left[ \begin{matrix} 1 & 1 & 0 \\ 0 & 1 & 4 \\ \end{matrix} \right] \end{array} }[/math]

[math]\displaystyle{ \begin{array} {c} \text{tuning:} \\ \text{Middle Path} \\ \left[ \begin{matrix} 1201.699 & 697.564 \end{matrix} \right] \end{array} \begin{array} {c} \text{temperament:} \\ \text{meantone} \\ \left[ \begin{matrix} 1 & 1 & 0 \\ 0 & 1 & 4 \\ \end{matrix} \right] \end{array} }[/math]

This open-endedness of tuning is not a bug in the design of temperaments, by the way. It's definitely a feature. Being able to speak about harmonic structure at the level of abstraction that temperaments provide for us is certainly valuable. (And we should note here that even after choosing a temperament's tuning, you're still in the realm of abstraction; you won't have a fully-formed pitch system ready to make music with until you choose how many pitches and which pitches exactly to generate with your generators!)

But why should we care about the tuning of a regular temperament, or—said another way—what makes some generator tunings better than others? The plain answer to this question is: some sound better. And while there may be no universal best tuning for each temperament, we could at least make cases for certain types of music—or specific pieces of music, even—sounding better in one tuning over another.

Now, an answer like that suggests that RTT tuning could be done by ear. Well, it certainly can. And for some people, that might be totally sufficient. But other people will seek a more objective answer to the question of how to tune their temperament. For people of the latter type: we hope you will get a lot out of this article.

And perhaps this goes without saying, but another important reason to develop objective and generalized schemes for tuning temperaments is to be able to empower synths and other microtonal software with intelligent default behavior. Computers aren't so good with the whole tuning-by-ear thing. (Though to help this article age a bit more gracefully, we should say they are getting better at it every day!)

So to tie up this temperament vs. tuning issue[note 3], maybe we could get away with this analogy: if temperament is like a color scheme, then tuning is like the exact hex values of your colors. Or maybe we could think of tuning as the fine tuning of a temperament. Either way, tuning temperaments is a good mix of science and art—like any other component of music—and we hope you'll find it as interesting to tinker with as we have.

Damage, error, and weight

When seeking an objectively good tuning of a chosen temperament, what we can do to begin with is find a way to quantify how good a tuning sounds. But it turns out to be easier to quantify how bad it sounds. We accomplish this using a quantity called damage.

We remind you that the purpose of temperament is to give us the consonances we want, without needing as many pitches as we would need with just intonation, and allowing more modulation due to more regular step sizes than just intonation. The price we pay for this is some audible damage to the quality of the intervals due to their cent errors.

The simplest form of damage is how many cents[note 4] off an interval is from its just or pure tuning; in this case, damage is equivalent to the absolute value of the error, where the error means simply the difference in cents between the interval under this tuning of this temperament and the interval in just intonation.[note 5]

For example, in 12-ET, the approximation of the perfect fifth ~3/2 is 700 ¢, which is tuned narrow compared with the just [math]\displaystyle{ \frac{3}{2} }[/math] interval which is 701.955 ¢, so the error here is −1.955 ¢ (negative), while the damage is 1.955 ¢ (positive, as always).

Theoreticians and practitioners frequently choose to weight these absolute errors, in order to capture how some intervals are more important to tune accurately than others. A variety of damage weight approaches are available.

We'll discuss the basics of damage and weighting in the damage section, but we consider the various types of weighting to be advanced concepts and so do not discuss those here (you can read about them in our dedicated article for them).

Target-intervals

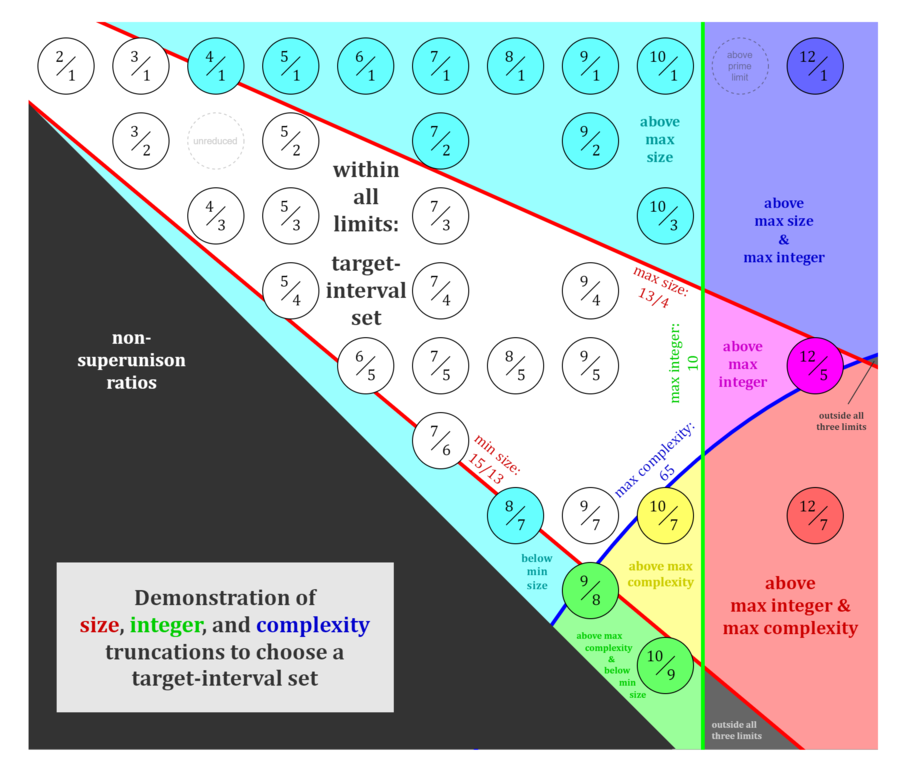

In order to quantify damage, we need to know what we're quantifying damage to. These are a set of intervals of our choosing, whose damage we seek to minimize, and we can call them our target-intervals. Typically these are consonant intervals, and in particular they are the consonances that are most likely to be used in the music which the given temperament is being tuned in order to perform; hence our interest in minimizing the damage to them.

If you don't have a specific set of target-intervals in mind already, this article will give some good recommendations for default interval sets to target. To give you a basic idea of what one might look like, for a 5-limit temperament, you might want to use [math]\displaystyle{ \left\{\frac{2}{1}, \frac{3}{1}, \frac{3}{2}, \frac{4}{3}, \frac{5}{2}, \frac{5}{3}, \frac{5}{4}, \frac{6}{5} \right\} }[/math]. But we'll say more about choosing such sets later, in the target-intervals section.

There are even ways to avoid choosing a target-interval set at all, but those are an intermediate-level concept and we won't be getting to those until toward the end of this article.

Held-intervals

Sometimes targeting an interval to minimize its damage is not enough; we insist that absolutely zero damage be dealt to this interval. Most commonly when people want this, it's for the octave. Because we hold this interval to be unchanged, we call it a held-interval of the tuning. You can think of "held" in this context something like "the octave held true", "we held the octave fixed", or "we held onto the octave."

Optimization

With damage being the quantification of how off each of these target-intervals sounds under a given tuning of a given temperament, then optimization means to find the tuning that causes the least overall damage—for some definition of "least overall". Defining exactly what is meant by "least overall", then, is the first issue we will be tackling in detail in this article, coming up next.

Optimization

Just about any reasonable tuning scheme will optimize generator tunings in order to cause a minimal amount of damage to consonant intervals.[note 6] And we can see that tuning schemes may differ by how damage is weighted (if it is weighted at all), and also that they may differ by which intervals' errors are targeted to minimize damage to. But before we unpack those two types of differences, let's first answer this question: given multiple target-intervals whose damages we are all trying to minimize at the same time, how exactly should we define "least overall" damage?

The problem

"Least overall damage"

Consider the following two tunings of the same temperament, and the damages they deal to the same set of target-intervals [math]\displaystyle{ \textbf{i}_1 }[/math], [math]\displaystyle{ \textbf{i}_2 }[/math], and [math]\displaystyle{ \textbf{i}_3 }[/math]:

| [math]\displaystyle{ \textbf{i}_1 }[/math] | [math]\displaystyle{ \textbf{i}_2 }[/math] | [math]\displaystyle{ \textbf{i}_3 }[/math] | |

|---|---|---|---|

| Tuning A | 0 | 0 | 4 |

| Tuning B | 2 | 2 | 1 |

(In case you're wondering about what units these damage values are in, for simplicity's sake, we could assume these absolute errors are not weighted, and therefore quantified as cents. But the units do not really matter for purposes of this discussion, so we don't need to worry about them.)

Which tuning should we choose—Tuning A, or Tuning B?

- Tuning A does an average of [math]\displaystyle{ \dfrac{0 + 0 + 4}{3} = 1.\overline{3} }[/math] damage, while Tuning B does an average of [math]\displaystyle{ \dfrac{2 + 2 + 1}{3} = 1.\overline{6} }[/math] damage, so by that interpretation, [math]\displaystyle{ 1.\overline{3} \lt 1.\overline{6} }[/math] and Tuning A does the least damage.

- However, Tuning B does no more than [math]\displaystyle{ 2 }[/math] damage to any one interval, while Tuning A does all four damage to a single interval, so by that interpretation [math]\displaystyle{ 4 \gt 2 }[/math] and it's Tuning B which does the least damage.

This very simple example illustrates a core problem of tuning: there is more than one reasonable interpretation of "least overall" across a set of multiple target-intervals' damages.

Common interpretations

The previous section has also demonstrated two of the most common interpretations of "least overall" damage that theoreticians use:

- The first interpretation—the one which averaged the individual target-intervals' damages, then chose the least among them—is known as the "minimized average", or miniaverage of the damages.

- The second interpretation—the one which took the greatest from among the individual damages, then chose the least among them—is known as the "minimized maximum", which we shorten to minimax.

There is also a third interpretation of "least overall" damage which is quite common. Under this interpretation, each damage gets squared before averaging, and then that average has its square root taken. Using the above example, then, Tuning A would cause

[math]\displaystyle{ \sqrt{\strut \dfrac{0^2 + 0^2 + 4^2}{3}}= 2.309 }[/math]

damage, while Tuning B would cause

[math]\displaystyle{ \sqrt{\strut \dfrac{2^2 + 2^2 + 1^2}{3}} = 1.732 }[/math]

damage; so Tuning B would be preferred by this statistic too. This interpretation is called the "minimized root mean square", or miniRMS of the damages.

In general, these three interpretations of "least overall" damage will not lead to the same choice of tuning.

A terminological note before we proceed: the term "miniaverage" is not unheard of, though it is certainly less widespread than "minimax", and while "RMS" is well-established, its minimization is not well-known by "miniRMS" (more commonly you will find it referred to by other names, such as "least squares").[note 7] While we appreciate the pedagogical value of deferring to convention, we also appreciate the pedagogical value of consistency, and in this case we have chosen to prioritize consistency.

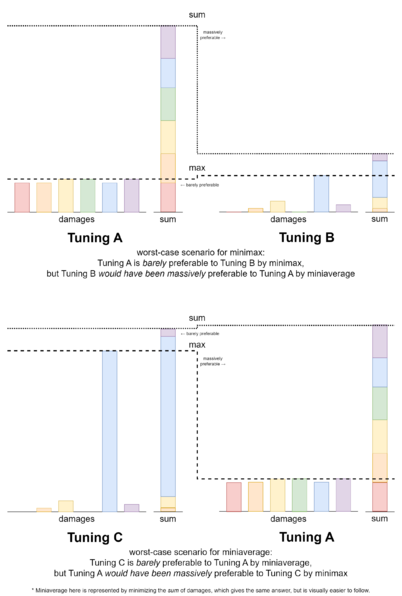

Rationales for choosing your interpretation

With three different common interpretations of "least overall" damage, you may already be starting to wonder: which interpretation is right for me, from one situation to another, and how would I decide that? To help you answer this for yourself, let's take a moment to talk through how each interpretation influences the end musical results.

We could think of the minimax interpretation as the "weakest link" approach, which is to say that it's based on the thinking that a tuning is only as strong as its weakest interval (the one which has incurred the most damage), so during the optimization procedure we'll be continuously working to improve whichever interval is the weakest at the time. To use another metaphor, we might say that the minimax interpretation is the "one bad apple spoils the bunch" interpretation. As Graham puts it, "A minimax isn't about measuring the total impact, but whether one interval has enough force to break the chord."

In the worst case scenario, a minimax tuning could lead to a relatively large amount of total damage, if it works out to be distributed very evenly across all the target-intervals. Sometimes that's what happens when we focus all of our efforts on minimizing the maximum damage amount.

At the other extreme, the miniaverage is based on the appreciably straightforward thinking that each and every target-interval's damage should be counted. Using this approach, it's sometimes possible to end up with a tuning where many intervals are tuned pure or near pure, while many other intervals are tuned pretty badly, if that's just how the miniaverage calculation works out. So while at a glance this might sound like a sort of communistic tuning where every interval gets its fair share of damage reduction, in fact this is more like a fundamentalist utilitarian society, where resources get distributed however is best for the collective. Finally, miniRMS offers a middle-of-the-road solution, halfway between the effects of minimax and miniaverage. Later on, we'll learn about the mathematical reason why it does this.

If you're unfamiliar with the miniRMS interpretation, it's likely that it seems a bit more arbitrary than the miniaverage and minimax interpretations at first; however, by the end of this article, you will understand that miniRMS actually occupies a special position too. For now, it will suffice to note that miniRMS is related to the formula used for calculating distances in physical space, and so one way to think about it is as a way to compare tunings against each other as if they were points in a multidimensional damage space (where there is one spatial dimension for each target) where "least overall" damage is determined by how close the points are to the origin (the point where no target has any damage, which corresponds to JI).

We note that all but one of the named tuning schemes described on the Xenharmonic wiki is a minimax tuning scheme. But we also think it's important to caution the reader against interpreting this fact as evidence that minimax is clearly the proper interpretation of "least overall" damage. This predominance of named tuning schemes that are minimax tuning schemes may be due to a historical proliferation of named tuning schemes which cleverly get around the need to specify a target-interval set (these were mentioned earlier, and we're still not ready to discuss them in detail), and it turns out that being a minimax tuning scheme is one of the two necessary conditions to achieve that effect. And as alluded to earlier, while these schemes with no specific target have value for consistent, reasonable, and easy-to-compute documentation, they do not necessarily result in the most practical or nicest-sounding tunings that you would want to actually use for your music.

Tuning space

Our introductory example was simple to the point of being artificial. It presented a choice between just two tunings, Tuning A and Tuning B, and this choice was served to us on a silver platter. Once we had decided upon our preferred interpretation of "least overall" damage, the choice of tuning was straightforward: all that remained to do at that point was to compute the damage for both of the tunings, and choose whichever one of the two caused less damage.

In actuality, choosing a tuning is rarely this simple, even after deciding upon the interpretation of "least overall" damage.

Typically, we find ourselves confronting way more than two tunings to choose between. Instead, we face an infinite continuum of possible tunings to check! We can call this range of possibilities our tuning space. If a temperament has one generator, i.e. it is rank-1, then this tuning space is one-dimensional—a line—somewhere along which we'll find the tuning causing the minimum damage, and at this point narrowing down the right place to look isn't too tricky. But if a temperament has two generators, though, the tuning space we need to search is therefore two-dimensional—an entire plane, infinity squared: for each of the infinitude of possibilities for one generator, we find an entire other infinitude of possibilities for the other generator. And for rank-3 temperaments the space becomes 3D—a volume, so now we're working with infinity cubed. And so on. We can see how the challenge could quickly get out of hand, if we didn't have the right tools ready.

Tuning damage space

Each one of these three interpretations of "least overall" damage involves minimizing a different damage statistic.

- The miniaverage interpretation seeks out the minimum value of the average of the damages, within the limitations of the temperament.

- The minimax interpretation seeks out the minimum value of the max of the damages, within the limitations of the temperament.

- The miniRMS interpretation seeks out the minimum value of the RMS of the damages, within the limitations of the temperament.

So, for example, when we seek a miniaverage tuning of a temperament, we essentially visit every point—every tuning—in our tuning space, evaluating the average damage at that point (computing the damage to each of the target-intervals, for that tuning, then computing the average of those damages), and then we choose whichever of those tunings gave the minimum such average. The same idea goes for the minimax with the max damage, and the miniRMS with the RMS damage.

We can imagine taking our tuning space and adding one extra dimension on which to graph the damage each tuning causes, whether that be to each target-interval individually, or a statistic across all target-intervals's damages like their average, max, or RMS, or several of these at once. This type of space we could call "tuning damage space".

So the tuning damage space for a rank-1 temperament is 2D: the generator tunings along the [math]\displaystyle{ x }[/math]-axis, say, and the damage along the [math]\displaystyle{ y }[/math]-axis.

And the tuning damage space for a rank-2 temperament is 3D: the generator tunings along the [math]\displaystyle{ x }[/math]- and [math]\displaystyle{ y }[/math]- axes, and the damage along the [math]\displaystyle{ z }[/math]-axis.

By the way, the damage space we mentioned earlier when explaining the RMS is a different type of space than tuning damage space. For damage space, every dimension is a damage dimension, and no dimensions are tuning dimensions; there, tunings are merely labels on points in space. So tuning damage space is much more closely related to tuning space than it is to damage space, because it's basically tuning space but with one single extra dimension added for the tunings' damages.

2D tuning damage graphs

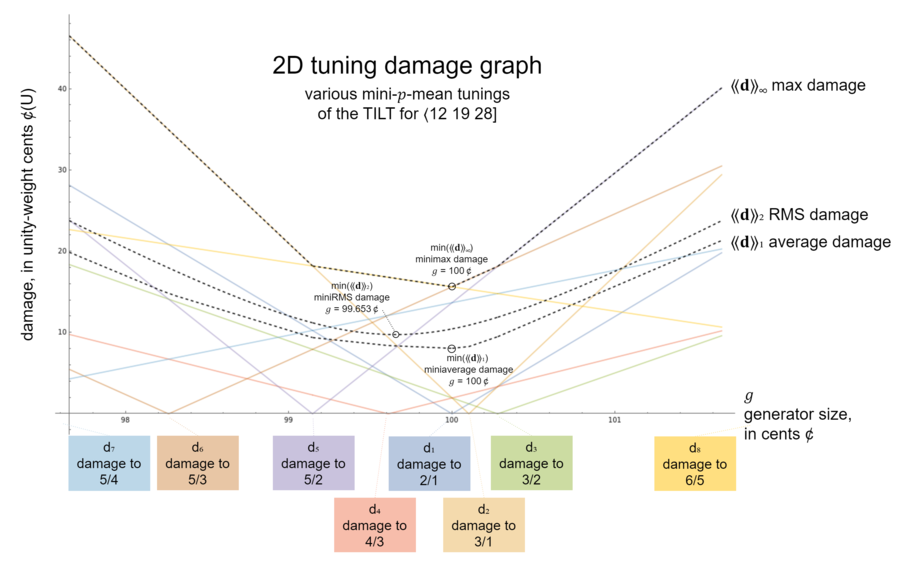

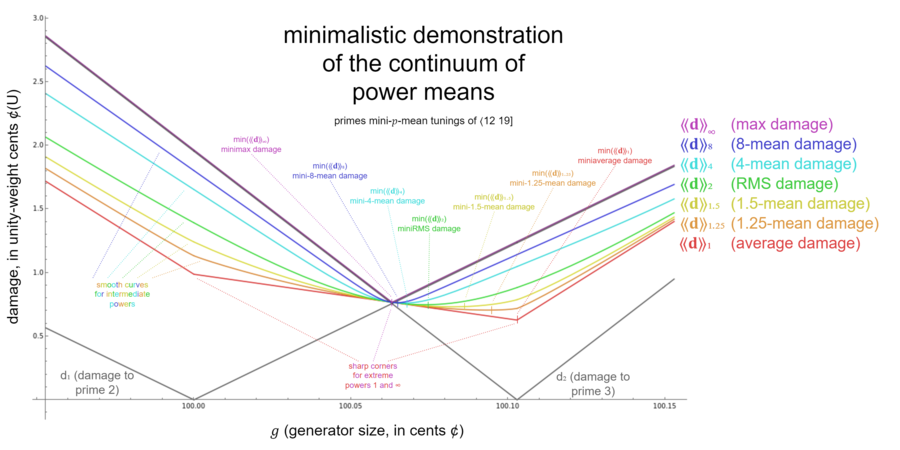

It is instructive to visualize the relationship between individual target-interval damages and these damage statistics that we're seeking to minimize. (Don't worry about the angle bracket notation we're using for the means here; we'll explain it a little later.)

This graph visualizes a simple introductory example of a rank-1 (equal) temperament, that is, a temperament which has only a single generator. So as we discussed previously, because we have only one generator that we need to tune, the entire problem—the tuning damage space—can be visualized on a 2D graph. The horizontal axis of this graph corresponds with a continuum of size for the generator: narrow on the left, wide on the right. The vertical axis of this graph shows how much damage is dealt to each interval given that generator tuning. The overall impression of the graph is that there's a generator tuning somewhere in the middle here which is best, and generator tunings off the left and right edges of the graph are of no interest to us, because the further you go in either direction the more damage gets piled on.

Firstly, note that each target-interval's damage makes a V-shaped graph. If you're previously familiar with the shape of the absolute value graph, this should come as no surprise. For each target-interval, we can find a generator tuning that divides into the target-interval's size exactly, with no remainder, and thus the target-interval is tuned pure there (the error is zero, and therefore it incurs zero damage). This is the tip of the V-shape, where it touches the horizontal axis. To the right of this point, the generator is slightly too wide, and so the interval is tuned wide; the error here is positive and thus it incurs some damage. And to the left of this point, the generator is slightly too narrow, and so the interval is tuned narrow; the error here is negative, and due to damage being the absolute value of the error, the damage is also positive.

For this example we have chosen to target eight intervals' damages to optimize for. These are [math]\displaystyle{ \frac{2}{1} }[/math], [math]\displaystyle{ \frac{3}{1} }[/math], [math]\displaystyle{ \frac{3}{2} }[/math], [math]\displaystyle{ \frac{4}{3} }[/math], [math]\displaystyle{ \frac{5}{2} }[/math], [math]\displaystyle{ \frac{5}{3} }[/math], [math]\displaystyle{ \frac{5}{4} }[/math], and [math]\displaystyle{ \frac{6}{5} }[/math]. (We call this set the "6-TILT" which is short for "truncated 6-integer-limit triangle".[note 8] TILTs, our preferred kind of target-interval set will be explained later.)

So each of these eight intervals makes its own V-shaped graph. The V-shapes do not line up exactly, because the intervals are not all tuned pure at the same generator tuning. So when we put them all together, we get a unique landscape of tuning damage, something like a valley among mountains.

Note that part of what makes this landscape unique is that the steepness differs from one V-shape to the next. This has to do with how many generators it takes to approximate each target-interval. If one target-interval is approximated by 2 steps of the generator and another target-interval is approximated by 6 steps of the generator, then the latter target-interval will be affected by damage to this generator 3 times as much as the former target-interval, and so its damage graph will be 3 times as steep. (If we were using a simplicity- or complexity- weight damage, the complexity of the interval would affect the slope, too.)

Also note that the minimax damage, miniaverage damage, and miniRMS damage are each represented by a single point on this graph, not a line. Each one is situated at the point of the minimum value along the graph of a different damage statistic—the max damage, average damage, and RMS damage, respectively.

We can see that the max damage sits just on top of this landscape, as if it were a layer of snow on the ground. It is a graph consisting of straight line segments and sharp corners, like the underlying V's themselves. The average damage also consists of straight line segments and sharp corners, but travels through the mountains. We may notice that the max damage line has a sharp corner wherever target-interval damage graphs cross up top above all others, while the average damage line has a sharp corner wherever target-interval damage graphs bounce off the bottom.

The RMS damage is special because it consists of a single long smooth curve, with no straight segments or corners to it at all. This should be unsurprising, though, if one is familiar with the hyperbolic shape of the graph [math]\displaystyle{ y = x^2 }[/math], and recognizes RMS as the sum of eight different offset copies of this graph (divided by a constant, and square rooted, but those parts don't matter so much). We also see that the RMS graph is found vertically in between the average and max graphs.

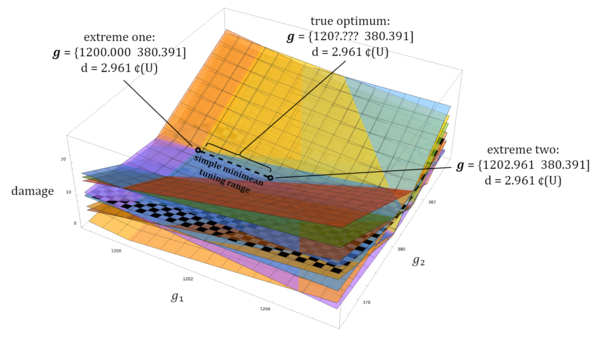

3D tuning damage graphs

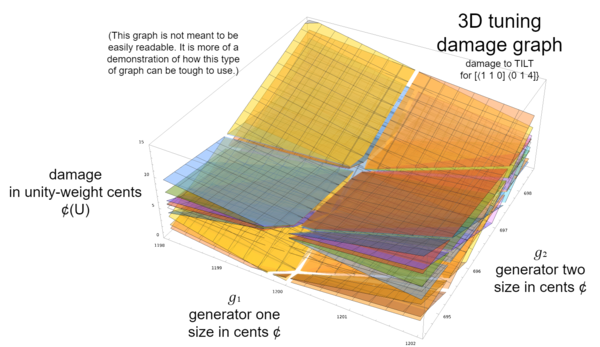

Before we move on, we should also at least glance at what tuning graphs look like for rank-2 temperaments. As we mentioned a bit earlier, when you have one dimension for each of two generators, then you need a third dimension to graph damage into. So a rank-2 temperament's tuning damage graph is 3D. Here's an example:

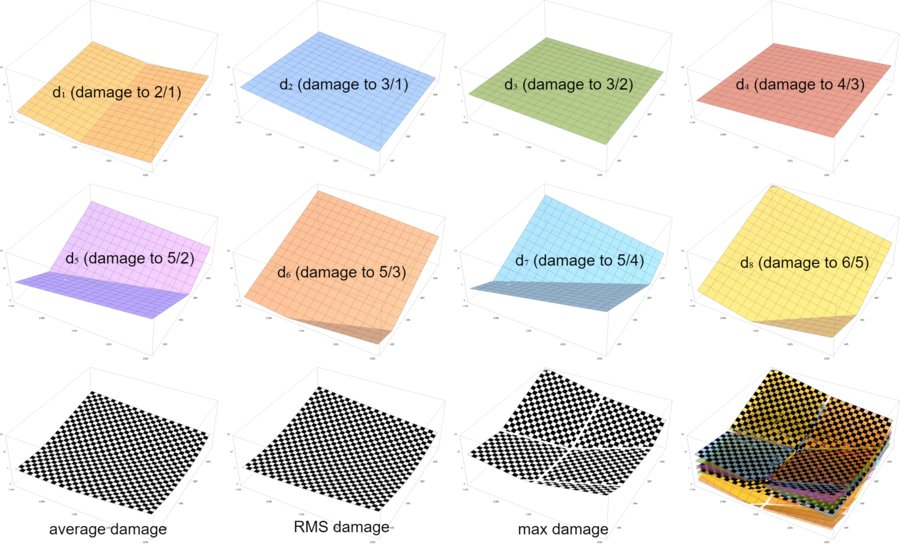

Even with the relatively small count of eight target-intervals, this graph appears as a tangled mess of bent-up-looking intersecting planes and curves. In order to see better what's going on, let's break it down into all of its individual graphs (and including some key average damage graphs as well):

Now we can actually see the shape of each of the target-intervals' graphs. Notice that it's still sort of a V, but a three-dimensional one. In general we could say that the shape of a target-interval's tuning damage graph is a "hyper-V". In 2D tuning damage space, that means just a V-shape, but in 3D tuning damage space like this, they look like V's that have been extruded infinitely in both directions along the axis which you would look at them straight on. In other words, it's just two planes coming down and toward each other to meet at a line angled across the ([math]\displaystyle{ x }[/math], [math]\displaystyle{ y }[/math])-floor (that line being the line along which the proportions of the two generators are constant, and tune the given target-interval purely). You could think of a hyper-V as a hyperplane with a single infinitely long crease in it, wherever it bounces off the pure-tuning floor, due to that taking of the absolute value involved in the damage calculation. In 4D and beyond it's difficult to visualize directly, but you can try to extrapolate this idea.

But what about the max, average, and RMS graphs? In 2D, they looked like V's too, just with rounded-off or crinkled-up tips. Do they look like hyper-V's in 3D too, just with rounded-off or crinkled-up tips? Well, no, actually! They do keep their rounded-off or crinkled-up tip aspect, but when they take to higher dimensions, they do so in the form not of a hyper-V, but in the form of a hyper-cone.

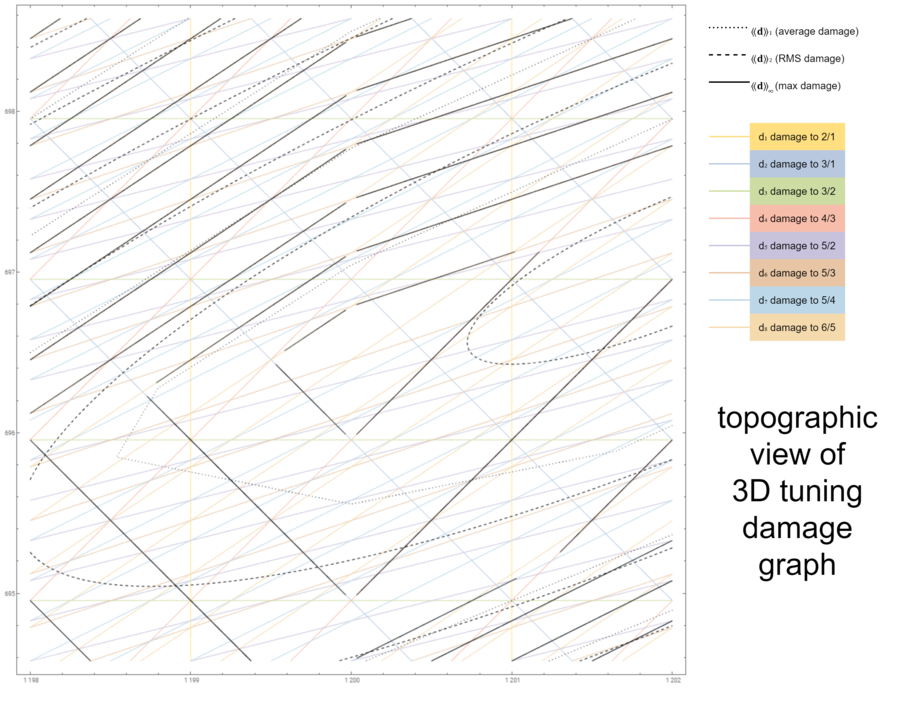

Topographic tuning damage graphs

If 3D graphs aren't your thing, there's another helpful way to visualize tuning damage for rank-2 temperaments: with a topographic graph. These are basically top-down views of the same information, with lines tracing along places where the damage is the same. If you've ever looked at a geographic map which showed elevation information with contours, then you'll immediately understand how these graphs work.

Here's an example, showing the same information as the chart in the previous section. Note that with this view, it's easier to see that the minima for the average and RMS damage may not even be in this sector! Whereas we could barely tell that when we were looking at the perspective view; we could only really discern the max damage minimum (the minimax) since it's on top, so much easier to see.

Power means

Each of these damage statistics we've been looking at—the average, the max, and the RMS—can be understood as a power mean, or [math]\displaystyle{ p }[/math]-mean. Let's take a look at these in more detail now.

Steps

All of these power means can be defined with a single unifying formula, which situates them along a continuum of possibilities, according to that power [math]\displaystyle{ p }[/math].

Here are the steps of a [math]\displaystyle{ p }[/math]-mean:

- We begin by raising each item to the [math]\displaystyle{ p }[/math]th power.

- We then sum up the items.

- Next, we divide the sum by [math]\displaystyle{ k }[/math], the count of items.

- We finish up the [math]\displaystyle{ p }[/math]-mean by taking the matching root at the end, that is, the root matching the power that each item was individually raised to in the first step; so that's the [math]\displaystyle{ p }[/math]th root. If, for example, the items were squared at the start—that is, we took their 2nd power—then at the end we take the square root—the 2nd root.

Formula

So here is the overall formula:

[math]\displaystyle{

\llangle\textbf{d}\rrangle_p = \sqrt[p]{\strut \dfrac{\sum\limits_{n=1}^k d_n^p}{k}}

}[/math]

We can expand this out like so:

[math]\displaystyle{

\llangle\textbf{d}\rrangle_p = \sqrt[p]{\strut \dfrac{d_1^p + d_2^p + ... + d_k^p}{k}}

}[/math]

If the formula looks intimidating, the straightforward translation of it is that for some target-interval damage list [math]\displaystyle{ \textbf{d} }[/math], which has a total of [math]\displaystyle{ k }[/math] items (one for each target-interval), raise each item to the [math]\displaystyle{ p }[/math]th power and then sum them up. Then divide by [math]\displaystyle{ k }[/math], and take the [math]\displaystyle{ p }[/math]th root. What we have here is nothing but the mathematical form of the steps given in the previous section.

The double angle bracket notation for the [math]\displaystyle{ p }[/math]-mean shown above, [math]\displaystyle{ \llangle\textbf{d}\rrangle_p }[/math], is not a conventional notation. This is a notation we (Dave and Douglas) came up with, as a variation on the double vertical-bar brackets used to represent the related mathematical operation called the power norm (which you can learn more about in our later article on all-interval tunings), and was inspired by the use of single angle brackets for the average or expected value in physics. You may see the notation [math]\displaystyle{ M_p(\textbf{d}) }[/math] used elsewhere, where the "M" stands for "mean". We do not recommend or use this notation as it looks too much like a mapping matrix [math]\displaystyle{ M }[/math].

So how exactly do the three power means we've looked at so far—average, RMS, and max—fit into this formula? What power makes the formula work for each one of them?

Average

Well, let's look at the average first. You may be previously familiar with the concept of an average from primary education, sometimes also called the "mean" and taught along with the concepts of the median and the mode. The power mean is a generalization of this standard concept of a mean (and for that reason it is also sometimes referred to as the "generalized mean"). It is a generalization in the sense that the standard mean is equivalent to one particular power of [math]\displaystyle{ p }[/math]: 1. The power-of-[math]\displaystyle{ 1 }[/math] and the root-of-[math]\displaystyle{ 1 }[/math] steps have no effect, so really all the [math]\displaystyle{ 1 }[/math]-mean does is sum the items and divide by their count, which is probably exactly how you were taught the standard mean back in school. So the power mean is a powerful (haha) concept that allows us to generalize this idea to other combinations of powers and roots besides boring old [math]\displaystyle{ 1 }[/math].

So to be clear, the power [math]\displaystyle{ p }[/math] for the average is [math]\displaystyle{ 1 }[/math]; the average is AKA the [math]\displaystyle{ 1 }[/math]-mean or "mean" for short (though we avoid referring to it as such in our articles, to avoid confusion with the generalized mean).

RMS

As for the RMS, that's just the [math]\displaystyle{ 2 }[/math]-mean.

Max

But what about the max? Can you guess what value of [math]\displaystyle{ p }[/math], that is, which power would cause a power mean to somehow pluck the maximum value from a list? If you haven't come across this neck of the mathematical woods before, this may come across as a bit of a trick question. That's because the answer is: infinity! The max function is equivalent to the [math]\displaystyle{ ∞ }[/math]-mean: the infinitieth root of the sum of infinitieth powers.

It turns out that when we take the [math]\displaystyle{ ∞ }[/math]th power of each damage, add them up, divide by the count, and then take the [math]\displaystyle{ ∞ }[/math]th root, the mathemagical result is that we get whichever damage had been the maximum. One way to think about this effect is that when all the damages are propelled into infinity, whichever one had been the biggest to begin with dominates all the others, so that when we (divide by the count and then) take the infinitieth root to get back to the size we started with, it's all that remains.[note 9] It's fascinating stuff. So while the average human would probably not naturally think of the problem "find the maximum" in this way, this is apparently just one more mathematically feasible way to think about it. And we'll come to see later—when learning about non-unique tunings—why this interpretation of the max is important to tuning.[note 10]

The average reader[note 11] of this article will probably feel surprised to learn that the max is a type of mean, since typically these statistics are taught as mutually exclusive fundamentals with very different goals. But so it is.

Examples

For examples of mean damages in existing RTT literature, we can point to Paul's "max damage" in his tables in A Middle Path, or Graham's "adjusted error" and "TE error" in his temperament finder's results.

Minimized power means

The category of procedures that return the minimization of a given [math]\displaystyle{ p }[/math]-mean may be called "minimized power means", or "mini-[math]\displaystyle{ p }[/math]-means" for short. The minimax, miniRMS, and miniaverage are all examples of this: the mini-[math]\displaystyle{ 1 }[/math]-mean, mini-[math]\displaystyle{ 2 }[/math]-mean, and mini-[math]\displaystyle{ ∞ }[/math]-mean, respectively.

We can think of the term "minimax" as a cosmetic simplification of "mini-[math]\displaystyle{ ∞ }[/math]-mean", where since "max" is interchangeable with "[math]\displaystyle{ ∞ }[/math]-mean", we can swap "max" in for that, and collapse the remaining hyphen. Similarly, we can swap "RMS" in for "[math]\displaystyle{ 2 }[/math]-mean", and collapse the remaining hyphen, finding "miniRMS", and we can swap "average" in for "[math]\displaystyle{ 1 }[/math]-mean", and collapse the remaining hyphen, finding "miniaverage".

Function vs procedure

A [math]\displaystyle{ p }[/math]-mean and a mini-[math]\displaystyle{ p }[/math]-mean are very different from each other.

- A [math]\displaystyle{ p }[/math]-mean is a simple mathematical function. Its input is a list of damages, and its output is a single mean damage. It can be calculated in a tiny fraction of a second.

- A mini-[math]\displaystyle{ p }[/math]-mean, on the other hand, is a much more complicated kind of thing called an optimization procedure. Configured with the information about the current temperament and tuning scheme, and using various fancy algorithms developed over the centuries by specialized mathematicians, it intelligently tests anywhere from a few candidate tunings to hundreds of thousands of candidate tunings, finding the overall damage for each of them, where “overall” is defined by the given [math]\displaystyle{ p }[/math]-mean, searching for the candidate tuning which does the least overall damage. A mini-[math]\displaystyle{ p }[/math]-mean may take many seconds or sometimes even minutes to compute.

So when we refer to a "mini-[math]\displaystyle{ p }[/math]-mean" on its own, we're referring to this sort of optimization procedure. And when we say the "mini-[math]\displaystyle{ p }[/math]-mean tuning", we're referring to its output—a list of generator tunings in cents per generator (a generator tuning map). Finally, when we say the "mini-[math]\displaystyle{ p }[/math]-mean damage", we're referring to the minimized mean damage found at that optimum tuning.

Optimization power

Because optimum tunings are found as power minima of damage, we can refer to the chosen [math]\displaystyle{ p }[/math] for a tuning scheme as its optimization power. So the optimization power [math]\displaystyle{ p }[/math] of a minimax tuning scheme is [math]\displaystyle{ ∞ }[/math], that of a miniaverage tuning scheme is [math]\displaystyle{ 1 }[/math], and that of a miniRMS tuning scheme is [math]\displaystyle{ 2 }[/math].

Other powers

So far we've only given special attention to powers [math]\displaystyle{ 1 }[/math], [math]\displaystyle{ 2 }[/math], and [math]\displaystyle{ ∞ }[/math]. Other powers of [math]\displaystyle{ p }[/math]-means are certainly possible, but much less common. Nothing is stopping you from using [math]\displaystyle{ p = 3 }[/math] or [math]\displaystyle{ p = 1.5 }[/math] if you find that one of these powers regularly returns the tuning that is most pleasant to your ears.

Computing tunings by optimization power

The short and practical answer to the question: "How should I compute a particular optimization of damages?" is: "Just give it to a computer." Again, the RTT Library in Wolfram Language is ready to go; its README explains how to request the exact optimization power you want to use (along with your preferred intervals whose damages to target, and how to weight damage if at all, though we haven't discussed these choices in detail yet). Even for a specialized RTT tuning library, the general solution remains essentially to hand a mathematical expression off to Wolfram's specialized mathematical functionality and ask it politely to give you back the values that minimize this expression's value, using whichever algorithms it automatically determines are best to solve that sort of problem (based on the centuries of mathematical innovations that have been baked into this programming language designed specifically for math problems).

That said, there are special computational tricks for the three special optimization powers we've looked at so far—[math]\displaystyle{ 1 }[/math], [math]\displaystyle{ 2 }[/math], and [math]\displaystyle{ ∞ }[/math]—and familiarizing yourself with them may deepen your understanding of the results they return. To be clear, powers like [math]\displaystyle{ 1.5 }[/math] and [math]\displaystyle{ 3 }[/math] have no special computation tricks; those you really do just have to hand off to a computer algorithm to figure out for you. The trick for [math]\displaystyle{ p=2 }[/math] will be given some treatment later on in this series, in our article about tuning computation; a deeper dive on tricks for all three powers may be found in the article Generator embedding optimization.

Non-unique tunings

Before concluding the section on optimization powers, we should make sure to warn you about a situation that comes up only with optimization powers [math]\displaystyle{ 1 }[/math] and [math]\displaystyle{ ∞ }[/math]. Mercifully, you don't have to worry about this for any other power [math]\displaystyle{ 1 \lt p \lt ∞ }[/math]. The situation is this: you've asked for a miniaverage ([math]\displaystyle{ p = 1 }[/math]) or minimax ([math]\displaystyle{ p = ∞ }[/math]) tuning, but rather than being taken straight to a single correct answer, you find that there at first glance seems to be more than one correct answer. In other words, more than one tuning can be found that gives the same miniaverageed or minimaxed amount of damage across your target-intervals. In fact, there is an entire continuous range of tunings which satisfy the condition of miniaveraging or minimaxing damage.

Not to worry, though—there is always a good way to define a single tuning from within that range as the true optimum tuning. We shouldn't say that there is more than one minimax tuning or more than one miniaverage tuning in these cases, only that there's nonunique minimax damage or nonunique miniaverage damage. You'll see why soon enough.

Example

For example, suppose we ask for the tuning that gives the miniaverage of damage (without weighting, i.e. absolute value of error) to the truncated integer limit triangle under magic temperament. Tuning our generators to Tuning A, 1200.000 ¢ and 380.391 ¢, would cause an average of 2.961 ¢(U) damage across our eight target-intervals, like this:

[math]\displaystyle{ \begin{array} {lccccccccccccccc} & & & \frac{2}{1} & \frac{3}{1} & \frac{3}{2} & \frac{4}{3} & \frac{5}{2} & \frac{5}{3} & \frac{5}{4} & \frac{6}{5} \\ \textbf{d}_ᴀ & = & [ & 0.000 & 0.000 & 0.000 & 0.000 & 5.923 & 5.923 & 5.923 & 5.923 & ] \\ \end{array} }[/math]

[math]\displaystyle{ \begin{align} \llangle\textbf{d}_ᴀ\rrangle_1 &= \sqrt[\Large{1}]{\rule[15pt]{0pt}{0pt} \dfrac{0.000^1 + 0.000^1 + 0.000^1 + 0.000^1 + 5.923^1 + 5.923^1 + 5.923^1 + 5.923}{8}} \\ &= \sqrt[\Large{1}]{\rule[15pt]{0pt}{0pt} \dfrac{0.000 + 0.000 + 0.000 + 0.000 + 5.923 + 5.923 + 5.923 + 5.923^1}{8}} \\ &= \sqrt[\Large{1}]{\rule[15pt]{0pt}{0pt} \dfrac{23.692}{8}} \\ &= \sqrt[1]{\strut 2.961} \\ &= 2.961 \\ \end{align} }[/math]

But we have another option that ties this 2.961 ¢(U) value! If we tune our generators to Tuning B, 1202.961 ¢ and 380.391 ¢ (same major third generator, but an impure octave this time), we cause that same total damage amount, just in a completely different way:

[math]\displaystyle{ \begin{array} {lccccccccccccccc} & & & \frac{2}{1} & \frac{3}{1} & \frac{3}{2} & \frac{4}{3} & \frac{5}{2} & \frac{5}{3} & \frac{5}{4} & \frac{6}{5} \\ \textbf{d}_ʙ & = & [ & 2.961 & 0.000 & 2.961 & 5.923 & 2.961 & 0.000 & 5.923 & 2.961 & ] \\ \end{array} }[/math]

[math]\displaystyle{ \begin{align} \llangle\textbf{d}_ʙ\rrangle_1 &= \sqrt[\Large{1}]{\rule[15pt]{0pt}{0pt} \dfrac{2.961^1 + 0.000^1 + 2.961^1 + 5.923^1 + 2.961^1 + 0.000^1 + 5.923^1 + 2.961^1}{8}} \\ &= \sqrt[\Large{1}]{\rule[15pt]{0pt}{0pt} \dfrac{2.961 + 0.000 + 2.961 + 5.923 + 2.961 + 0.000 + 5.923 + 2.961}{8}} \\ &= \sqrt[\Large{1}]{\rule[15pt]{0pt}{0pt} \dfrac{23.692}{8}} \\ &= \sqrt[1]{\strut 2.961} \\ &= 2.961 \\ \end{align} }[/math]

And just to prove the point about this damage being tied along a range of possibilities, let's take a tuning halfway in between these two, Tuning C, with an octave of 1201.481 ¢:

[math]\displaystyle{ \begin{array} {lccccccccccccccc} & & & \frac{2}{1} & \frac{3}{1} & \frac{3}{2} & \frac{4}{3} & \frac{5}{2} & \frac{5}{3} & \frac{5}{4} & \frac{6}{5} \\ \textbf{d}_ᴄ & = & [ & 1.481 & 0.000 & 1.481 & 2.961 & 4.442 & 2.961 & 5.923 & 4.442 & ] \\ \end{array} }[/math]

[math]\displaystyle{ \begin{align} \llangle\textbf{d}_ᴄ\rrangle_1 &= \sqrt[\Large{1}]{\rule[15pt]{0pt}{0pt} \dfrac{1.481^1 + 0.000^1 + 1.481^1 + 2.961^1 + 4.442^1 + 2.961^1 + 5.923^1 + 4.442^1}{8}} \\ &= \sqrt[\Large{1}]{\rule[15pt]{0pt}{0pt} \dfrac{1.481 + 0.000 + 1.481 + 2.961 + 4.442 + 2.961 + 5.923 + 4.442}{8}} \\ &= \sqrt[\Large{1}]{\rule[15pt]{0pt}{0pt} \dfrac{23.692}{8}} \\ &= \sqrt[1]{\strut 2.961} \\ &= 2.961 \\ \end{align} }[/math]

(Don't worry too much about rounding errors throughout these examples.)

We can visualize this range of tied miniaverage damage tunings on a tuning damage graph:

Damages are plotted on the [math]\displaystyle{ z }[/math]-axis, i.e. coming up from the floor, so the range where tunings are tied for the same miniaverage damage is the straight line segment that is exactly parallel with the floor.

True optimum

So... what to do now? How should we decide which tuning from this range to use? Is there a best one, or is there even a reasonable objective answer to that question?

Fortunately, there is a reasonable objective best tuning here, or as we might call it, a true optimum. To understand how to choose it, though, we first need to understand a bit more about why we wind up with ranges of tunings that are all tied for the same damage.

Power continuum

To illustrate the case of the miniaverage, we can use a toy example. Suppose we have the rank-1 temperament in the 3-limit ⟨12 19], our target-intervals are just the two primes [math]\displaystyle{ \frac{2}{1} }[/math] and [math]\displaystyle{ \frac{3}{1} }[/math], and we do not weight absolute error to obtain damage. Our tuning damage graph looks like this:

We have also plotted several power means here: [math]\displaystyle{ 1 }[/math], [math]\displaystyle{ 1\frac{1}{4} }[/math], [math]\displaystyle{ 1\frac{1}{2} }[/math], [math]\displaystyle{ 2 }[/math], [math]\displaystyle{ 4 }[/math], [math]\displaystyle{ 8 }[/math], and [math]\displaystyle{ ∞ }[/math]. Here we see for the first time a vivid visualization of what we were referring to earlier when we spoke of a continuum of powers. And we can also see why it was important to understand how the maximum of a list of values can be interpreted as the [math]\displaystyle{ ∞ }[/math]-mean, i.e. the [math]\displaystyle{ ∞ }[/math]th root of summed values raised to the [math]\displaystyle{ ∞ }[/math]th power. We can see that as the power increases from [math]\displaystyle{ 1 }[/math] to [math]\displaystyle{ 2 }[/math], the blocky shape smooths out into a curve, and then as the power increases from [math]\displaystyle{ 2 }[/math] to [math]\displaystyle{ ∞ }[/math] it begins reverting back from a smooth shape into a blocky shape again, but this time hugged right onto the individual target-intervals' graphs.

The first key thing to notice here is that every mean except the [math]\displaystyle{ 1 }[/math]-mean and [math]\displaystyle{ ∞ }[/math]-mean have a curved graph; it is only these two extremes of [math]\displaystyle{ 1 }[/math] and [math]\displaystyle{ ∞ }[/math] which sharpen up into corners. Previously we had said that the [math]\displaystyle{ 2 }[/math]-mean was the special one for being curved, because at that time we were only considering powers [math]\displaystyle{ 1 }[/math], [math]\displaystyle{ 2 }[/math], and [math]\displaystyle{ ∞ }[/math]; when all possible powers are considered, though, it is actually [math]\displaystyle{ 1 }[/math] and [math]\displaystyle{ ∞ }[/math] that are the exceptions with respect to this characteristic of means.

The second key thing to notice is that due to the [math]\displaystyle{ 1 }[/math]-mean's straight-lined characteristic, it is capable of achieving the situation where one of its straight line segments is exactly parallel with the bottom of the graph. We can see that all along this segment, the damage to prime 2 is decreasing while the damage to prime 3 is increasing, or vice versa, depending on which way you're going along it; in either case, you end up with a perfect tradeoff so that the total damage remains the same in that sector. This is the bare minimum illustration of a tied miniaverage damage tuning range.

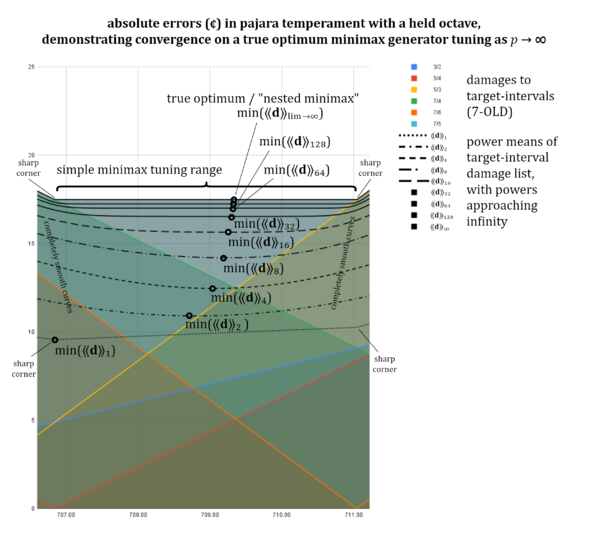

A tied minimax range is demonstrated in the pajara graph below.

How to choose the true optimum

We're now ready to explain how miniaverage and minimax tuning schemes can find a true optimum tuning, even if their miniaverage or minimax result is not unique. The key thing to realize is that any of these mean graphs that make a smooth curve—whether it's the one with power [math]\displaystyle{ 2 }[/math], [math]\displaystyle{ 4 }[/math], [math]\displaystyle{ 8 }[/math], or [math]\displaystyle{ 1\frac{1}{2} }[/math] or [math]\displaystyle{ 1\frac{1}{4} }[/math]—they will have a definite singular minimum wherever the bottom of their curve is. We can choose a smaller and smaller power—[math]\displaystyle{ 1\frac{1}{8} }[/math], [math]\displaystyle{ 1\frac{1}{16} }[/math], [math]\displaystyle{ 1\frac{1}{32} }[/math], [math]\displaystyle{ 1\frac{1}{64} }[/math] on and on—and as long as we don't actually hit [math]\displaystyle{ 1 }[/math], the graph will still have a single minimum. It will get closer and closer to the shape of the 1-mean graph, but if you zoom in very closely, you will find that it still doesn't quite have sharp corners yet, and critically, that it doesn't quite have a flat bottom yet. (The same idea goes for larger and larger powers approaching [math]\displaystyle{ ∞ }[/math], and minimax.)

As the power decreases or increases like this, the value of that single minimum changes. It changes a lot, relatively speaking, in the beginning. That is, the change from [math]\displaystyle{ p=2 }[/math] to [math]\displaystyle{ p=4 }[/math] is significant, but then the change from [math]\displaystyle{ p=4 }[/math] to [math]\displaystyle{ p=8 }[/math] is less so. And the change from [math]\displaystyle{ 8 }[/math] to [math]\displaystyle{ 16 }[/math] is even less so. By the time you're changing from [math]\displaystyle{ 64 }[/math] to [math]\displaystyle{ 128 }[/math], the value is barely changing at all. At a certain point, anyway, the amount of change is beyond musical relevance for any human listener.

Mathematically we describe this situation as a limit, meaning that we have some function whose output value cannot be directly evaluated for some input value, however, we can check the output values as we get closer and closer to the true input value we care about—infinitely/infinitesimally close, in fact—and that will give us a "good enough" type of answer. So in this case, we'd like to know the minimum value of the mean graph when [math]\displaystyle{ p=∞ }[/math], but that's undefined, so instead we check it for something like [math]\displaystyle{ p=128 }[/math], some very large value. What exact value we use will depend on the particulars of the software doing the job, its strengths and limitations. But the general principle is to methodically check closer and closer powers to the limit power, and stop whenever the output value is no longer changing appreciably, or when you run into the limitations of your software's numeric precision.

So we could say that the complete flattening of the miniaverage and minimax graphs is generally beneficial, in that the methods for finding exact solutions for optimum tunings rely on it, but on occasion it does cause the loss of an important bit of information—the true optimum—which can be retrieved by using a power very close to either [math]\displaystyle{ 1 }[/math] or [math]\displaystyle{ ∞ }[/math].

(Note: the example given in the diagram here is a bit silly. The easiest way to break the tie in this case would be to remove the offending target-interval from the set, since with constant damage, it will not aid in preferring one tuning to another. However, more natural examples of tied tunings—that cannot be resolved so easily—require 3D tuning damage space, and we sought to demonstrate the basic principle of true optimum tunings as simply as possible, so we stuck with 2D here.)

A final note on the true optimum tuning for a minimax or miniaverage tuning scheme

The true optimum tuning for a minimax tuning scheme, in addition to thinking of it as the limit of the [math]\displaystyle{ p }[/math]-mean as [math]\displaystyle{ p }[/math] approaches infinity, can also be thought of as the tuning which not only achieves the minimax damage, but furthermore, if the target-intervals tied for being dealt this maximum damage were removed from the equation, the damage to all the remaining intervals would still be minimaxed within this outer minimax. And if a tie were found at this tier as well, the procedure would continue to further nested minimaxes.

More information on this situation can be found where the computation approach is explained for tie-breaking with this type of tuning scheme, here: Generator embedding optimization#Coinciding-damage method.

Damage

In the previous section, we looked at how the choice of optimization power—in other words, how we define the "least overall" part of "least overall damage"—affects tuning. In this section, then, we will look at how the way we define "damage" affects tuning.

Definitions of damage differ by how the absolute error is weighted. As mentioned earlier, the simplest definition of damage does not use weights, in which case it is equivalent to the absolute value of the error.

Complexity

Back in the initial definitions section, we also mentioned how theorists have proposed a wide variety of ways to weight damage, when it is weighted at all. These ways may vary quite a bit between each other, but there is one thing that every damage weight described on the Xenharmonic wiki (thus far) has in common: it is defined in terms of a complexity function.[note 12]

A complexity function, plainly put, is used to objectively rank musical interval ratios according to how complex they are. A larger complexity value means a ratio is more complex. For example, [math]\displaystyle{ \frac{32}{21} }[/math] is clearly a more complex ratio than [math]\displaystyle{ \frac{3}{2} }[/math], so if [math]\displaystyle{ \frac{32}{21} }[/math]'s complexity was 10 then maybe [math]\displaystyle{ \frac{3}{2} }[/math]'s complexity would be something like 5.

For a more interesting example, [math]\displaystyle{ \frac{10}{9} }[/math] may seem like it should be a more complex ratio than [math]\displaystyle{ \frac{9}{8} }[/math], judging by its slightly bigger numbers and higher prime limit. And indeed it is ranked that way by the complexity function that we consider to be a good default, and which we'll be focusing on in this article. But some complexity functions do not agree with this, ranking [math]\displaystyle{ \frac{9}{8} }[/math] as more complex than [math]\displaystyle{ \frac{10}{9} }[/math], and yet theoreticians still commonly use these complexities.[note 13]

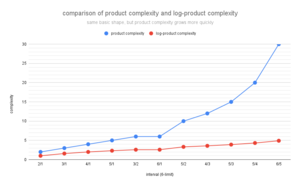

Product complexity and log-product complexity

Probably the most obvious complexity function for JI interval ratios is product complexity, which is the product of the numerator and denominator. So [math]\displaystyle{ \frac{3}{2} }[/math]'s product complexity is [math]\displaystyle{ 3 × 2 = 6 }[/math], and [math]\displaystyle{ \frac{32}{21} }[/math]'s product complexity is [math]\displaystyle{ 32 × 21 = 672 }[/math]. For reasons that don't need to be delved into until a later article, we usually take the base 2 logarithm of this value, though, and call it the log-product complexity.

As we saw with power means earlier, taking the logarithm doesn't change how complexities compare relative to each other. If an interval was more complex than another before taking the log, it will still be more complex than it after taking the log. All this taking of the logarithm does is reduce the differences between values as they get larger, causing the larger values to bunch up more closely together.

Let's take a look at how this affects the two product complexity values we've already looked at. So [math]\displaystyle{ \frac{3}{2} }[/math]'s log-product complexity is [math]\displaystyle{ \log_2{6} \approx 2.585 }[/math] and [math]\displaystyle{ \frac{32}{21} }[/math]'s log-product complexity is [math]\displaystyle{ \log_2{672} \approx 9.392 }[/math]. We can see that not only are both the values smaller than they were before, the latter value is much smaller than it used to be.

But enough about logarithms in complexity formulas for now. We'll wait until later to look into other such complexity formulas (in the alternative complexities article).

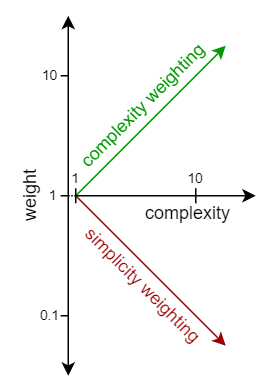

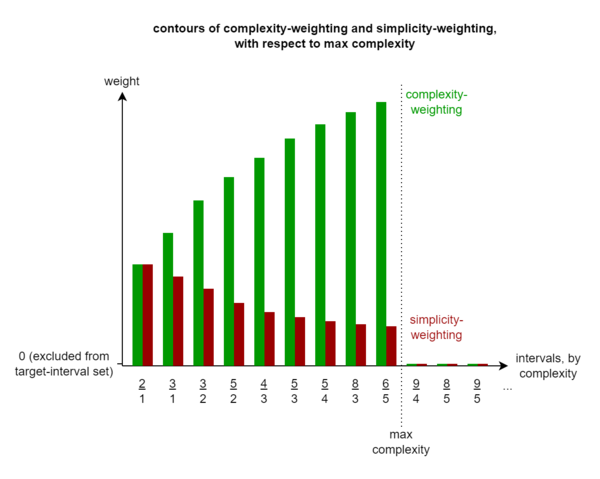

Weight slope

What's more important to cover right away is that—regardless of the actual choice of complexity formula—a major disagreement among tuning theorists is whether, when choosing to weight absolute errors by complexity, to weight them so that more attention is given to the more complex intervals, or so that more attention is given to the less complex intervals.

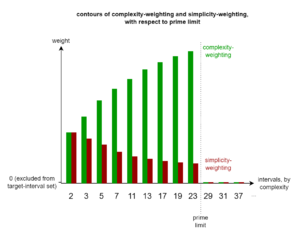

We call this trait of tuning schemes damage weight slope, because on a graph with interval complexity increasing from left to right along the [math]\displaystyle{ x }[/math]-axis and the weight (importance) of the error in each interval increasing from bottom to top along the [math]\displaystyle{ y }[/math]-axis, this trait determines how the graph slopes, either upwards or downwards as it goes to the right.

When it's the more complex intervals whose errors matter to us more, we can say the tuning scheme uses complexity-weight. When, on the other hand, it's the less complex intervals, or simpler intervals, whose errors matter to us more, we can say that the tuning scheme uses simplicity-weight.[note 14] To “weight” something here means to give it importance, as in "these are weighty matters".[note 15] So a complexity-weight scheme concerns itself more with getting the complex intervals right, while a simplicity-weight scheme concerns itself more with getting the simple intervals right.

And a unity-weight scheme defines damage as absolute error (unity-weighting has no effect; it is the same as not-weighting, so we can refer to its units as "unweighted cents"). This way, it doesn't matter whether an interval is simple or complex; its error counts the same amount regardless of this.

Rationale for choosing your slope

Being diametrically opposed approaches, one might think that one way must be the correct way and the other is incorrect, but it's not actually that cut-and-dried. There are arguments for psychoacoustic plausibility either way, and some defenders of one way may tell you that the other way is straight up wrong. We (Dave and Douglas) are not here to arbitrate this theoretical disagreement; we're only here to demystify the problem and help you get the tools you need to explore it for yourself and make up your own mind.[note 16]

If we were asked which way we prefer, though, we'd suggest letting the two effects cancel each other out, and go with the thing that's easier to compute anyway: unity-weight damage.

That said, all but two of the named tuning schemes on the Xenharmonic wiki at the time of writing use simplicity-weight, and the other two use unity-weight. But just as we saw earlier with the predominance of minimax schemes, this predominance of simplicity-weight schemes is probably mostly due to the historical proliferation of named schemes which cleverly get around the need to specify a target-interval set, because being a simplicity-weight scheme is the other of the two necessary conditions to achieve that effect. And again, while these sorts of schemes have good value for consistent, reasonable, and easy-to-compute documentation of temperaments' tunings, they do not necessarily find the most practical or nicest-sounding tunings that you would want to actually use for your music. So we encourage readers to experiment and keep theorizing.

The rationale for choosing a simplicity-weight tuning scheme is perhaps more straightforward to explain than the rationale for choosing a complexity-weight tuning scheme. The idea here is that simple intervals are more important: they occur more often in music[note 17], and form the foundation of harmony that more complex chords and progressions build upon. They're more sensitive to increase in discordance with retuning. And once an interval is complex enough, it tends to sound like a mistuned version of a nearby simpler ratio, even when perfectly tuned. As Paul writes in Middle Path, "...more complex ratios are essentially insensitive to mistuning (as they are not local minima of discordance in the first place)." So, when some intervals have to be damaged, we set the maths up so that it's less okay to have errors in the simpler intervals than it is to have errors in the more complex ones. Recalling that the target-interval set is typically chosen to be a representative set of consonances (read: simple intervals), you could look at simplicity-weight tuning as a gradation of targeting; that is, you target the absolute simplest intervals like [math]\displaystyle{ \frac{3}{2} }[/math] strongly, then the almost-simplest intervals like [math]\displaystyle{ \frac{8}{5} }[/math] weakly, and past that, you just don't target at all.

The rationale for choosing a complexity-weight tuning scheme shares more in common with simplicity-weighting than you might think. Advocates of complexity-weight do not disagree that simple intervals are the most important and the most common, or that some intervals are so complex that their tuning is almost irrelevant. What is disputed is how to handle this situation. There's another couple of important facts to consider about more complex intervals:

- They are more sensitive to loss of identity by retuning, psychoacoustically—you can tune [math]\displaystyle{ \frac{2}{1} }[/math] off by maybe 20 cents and still recognize it as an octave[note 18], but if you tune [math]\displaystyle{ \frac{11}{8} }[/math] off by 20 cents the JI effect will be severely impacted; it will merely sound like a bad [math]\displaystyle{ \frac{4}{3} }[/math] or [math]\displaystyle{ \frac{7}{5} }[/math]. Complexity-weight advocates may take inspiration from the words of Harry Partch, who wrote in Genesis of a Music: "Since the importance of an identity in tonality decreases as its number increases … 11 is the weakest of the six identities of Monophony; hence the necessity for exact intonation. The bruited argument that the larger the prime number involved in the ratio of an interval the greater our license in playing the interval out of tune can lead only to music-theory idiocy. if it is not the final straw—to break the camel's tympanum—it is at least turning him into an amusiacal ninny."[note 19]

- They are also more sensitive to increase in beat-rate with retuning. For example, for the interval [math]\displaystyle{ \frac{2}{1} }[/math] it is the 2nd harmonic of the low note and the 1st harmonic of the high note that beat against one another. For the interval [math]\displaystyle{ \frac{8}{5} }[/math] it is the 8th harmonic of the low note and the 5th of the high note. Assuming the low note was 100 Hz in both cases, the beating frequencies are around 200 Hz in the first case and 800 Hz in the second case. A 9 ¢ error at 200 Hz is a 1 Hz beat. A 9 ¢ error at 800 Hz is a 4 Hz beat.

So a complexity-weight advocate will argue that the choice of target-interval set is the means by which one should set the upper bound of complexity one tunes with respect to, but then the weighting leans toward emphasizing the accuracy of anything leading up to that point. But anything past that point is not considered at all.

So to a simplicity-weighting advocate this sudden change in how you care about errors from the maximum complexity interval in your target-interval set and anything beyond may seem unnatural, but to a complexity-weight advocate the other way around might seem like a strange partial convergence in concept where instead one should tackle two important concepts, one with each lever available (lever one: target-interval set membership, and lever two: damage weight).

Terminological notes

While it may be clear from context what one means if one says one weights a target-interval, in general it is better to be clear about the fact that what one really weights is a target-interval's error, not the interval itself. This is especially important when dealing with complexity-weight damage, since the error we care most about is for the least important (weakest) intervals.

Also, note that we do not speak of "complexity-weighted damage", because it is not the damage that gets multiplied by the weight, it is the absolute error. Instead the quantity is called "complexity-weight damage" (no "-ed"), and similarly for "simplicity-weight damage" and "unity-weight damage".

Simplicity

For simplicity's sake (ha ha), we suggest that absolute error weighting should always be thought of as a multiplication, never as a division. Complexity-weighting versus simplicity-weighting is confusing enough without causing some people to second-guess themselves by worrying about whether one should multiply or divide by a quantity. Let's just keep things straightforward and always multiply.

We have found that the least confusing way to conceptualize the difference between complexity-weighting and simplicity-weighting is to always treat weighting as multiplication like this, and then to define simplicity as the reciprocal of complexity. So if [math]\displaystyle{ \frac{3}{2} }[/math]'s log-product complexity is [math]\displaystyle{ 2.585 }[/math], then its log-product simplicity is [math]\displaystyle{ \frac{1}{2.585} \approx 0.387 }[/math]. And if [math]\displaystyle{ \frac{32}{21} }[/math]'s log-product complexity is [math]\displaystyle{ 9.392 }[/math], then its log-product simplicity is [math]\displaystyle{ \frac{1}{9.392} \approx 0.106 }[/math].

So, when complexity-weighting, if [math]\displaystyle{ \frac{3}{2} }[/math] and [math]\displaystyle{ \frac{32}{21} }[/math] are both in your target-interval set, and if at some point in the optimization process they both have an absolute error of 2 ¢, and log-product complexity is your complexity function, then [math]\displaystyle{ \frac{3}{2} }[/math] incurs 2 ¢ × 2.585 (C) = 5.170 ¢(C) of damage, and [math]\displaystyle{ \frac{32}{21} }[/math] incurs 2 ¢ × 9.392 (C) = 18.785 ¢(C) of damage. Note the units of ¢(C), which can be read as "complexity-weighted cents" (we can use this name because log-product complexity is the standard complexity function; otherwise we should qualify it as "[complexity-type]-complexity-weighted cents"). You can see, then, that if we were computing a minimax tuning, that [math]\displaystyle{ \frac{3}{2} }[/math]'s damage of 5.170 ¢(C) wouldn't affect anything, while [math]\displaystyle{ \frac{32}{21} }[/math]'s damage of 18.785 ¢(C) may well make it the target-interval causing the most damage, and so the optimization procedure will seek to reduce it. And if the optimization procedure does reduces the damage to [math]\displaystyle{ \frac{32}{21} }[/math], then it will end up with less error than [math]\displaystyle{ \frac{3}{2} }[/math], since they both started out with 2 ¢ of it.[note 20]

If, on the other hand, we were simplicity-weighting, the damage to [math]\displaystyle{ \frac{3}{2} }[/math] would be 2 ¢ × 0.387 = 0.774 ¢(S) and to [math]\displaystyle{ \frac{32}{21} }[/math] would be 2 ¢ × 0.106 = 0.213 ¢(S). These units of ¢(S) can be read "simplicity-weighted cents" (again, owing to the fact that we used the standard complexity function of log-product complexity, or rather here, its reciprocal, log-product simplicity). So here we have the opposite effect. Clearly the damage to [math]\displaystyle{ \frac{3}{2} }[/math] is more likely to be caught by a optimization procedure and sought to be minimized, while the [math]\displaystyle{ \frac{32}{21} }[/math] interval's damage is not going to affect the choice of tuning nearly as much, if at all.

Weights are always non-negative.[note 21] If damage is always non-negative, and damage is possibly-weighted absolute error, and absolute error by definition is non-negative, then weight too must be non-negative.

Here's a summary table of the relationship between damage, error, weight, complexity, and simplicity:

| Value | Variable | Possible equivalences |

|---|---|---|

| Damage | [math]\displaystyle{ \mathrm{d} }[/math][note 22] | [math]\displaystyle{ |\mathrm{e}| }[/math] [math]\displaystyle{ |\mathrm{e}| × w }[/math] |

| Error | [math]\displaystyle{ \mathrm{e} }[/math] | |

| Weight | [math]\displaystyle{ w }[/math] | [math]\displaystyle{ c }[/math] [math]\displaystyle{ s }[/math] |

| Complexity | [math]\displaystyle{ c }[/math] | [math]\displaystyle{ \frac{1}{s} }[/math] |

| Simplicity | [math]\displaystyle{ s }[/math] | [math]\displaystyle{ \frac{1}{c} }[/math] |

Example

In this section, we will introduce several new vocabulary terms and notations by way of an example. Our goal here is to find the average, RMS, and max damage of one arbitrarily chosen tuning of meantone temperament, given a particular target-interval set. Note that we're not finding the miniaverage, miniRMS, or minimax given other traits of some tuning scheme; to find any of those, we'd essentially need to repeat the following example on every possible tuning until we found the one with the smallest result for each minimization. (For detailed instructions on how to do that, see our tuning computation.)

This is akin to drawing a vertical line through tuning damage space, and finding the value of each graph—each target-interval damage graph, and each damage statistic graph (average, RMS, and max)—at the point it intersects that line.

Target-intervals

Suppose our target-interval set is the 6-TILT: [math]\displaystyle{ \left\{ \frac{2}{1}, \frac{3}{1}, \frac{3}{2}, \frac{4}{3}, \frac{5}{2}, \frac{5}{3}, \frac{5}{4}, \frac{6}{5} \right\} }[/math].

We can then set our target-interval list to be [math]\displaystyle{ \left[\frac{2}{1}, \frac{3}{1}, \frac{3}{2}, \frac{4}{3}, \frac{5}{2}, \frac{5}{3}, \frac{5}{4}, \frac{6}{5}\right] }[/math]. We use the word "list" here—as opposed to "set"—in order to suggest that the elements now have an order, because it will be important to preserve this order moving forward, so that values match up properly when we zip them up together. That's why we've used square brackets [·] to denote it rather than curly brackets {·}, since the former are conventionally used for ordered lists, while the latter are conventionally used for unordered sets.

Our target-interval list, notated as [math]\displaystyle{ \mathrm{T} }[/math], may be seen as a matrix, like so:

[math]\displaystyle{

\mathrm{T} =

\left[ \begin{array} {r|r|r|r|r|r|r|r}

\;\;1 & \;\;\;0 & {-1} & 2 & {-1} & 0 & {-2} & 1 \\

0 & 1 & 1 & {-1} & 0 & {-1} & 0 & 1 \\

0 & 0 & 0 & 0 & 1 & 1 & 1 & {-1} \\

\end{array} \right]

}[/math]

This is a [math]\displaystyle{ (d, k) }[/math]-shaped matrix, which is to say:

- There are [math]\displaystyle{ d }[/math] rows where [math]\displaystyle{ d }[/math] is the dimensionality of the temperament, or in other words, the count of primes, or more generally, the count of basis elements. In this case [math]\displaystyle{ d=3 }[/math] because we're in a standard basis of a prime limit, specifically the 5-limit, where each next basis element is the next prime, and 5 is the 3rd prime.

- There are [math]\displaystyle{ k }[/math] columns where [math]\displaystyle{ k }[/math] is the target-interval count. You can think of [math]\displaystyle{ k }[/math] as standing for "kardinality" or "kount" of the target-interval list, if that helps.

We read this matrix column by column, from left to right; each column is a (prime-count) vector for the next target-interval in our list, and each of these vectors has one entry for each prime. The first column [-1 1 0⟩ is the vector for [math]\displaystyle{ \frac{3}{2} }[/math], the second column [2 -1 0⟩ is the vector for [math]\displaystyle{ \frac{4}{3} }[/math], and so on (if you skipped the previous article in this series and are wondering about the [...⟩ notation, that's Extended bra-ket notation).

(For purposes of tuning, there is no difference between the superunison and subunison versions of an interval, e.g. [math]\displaystyle{ \frac{3}{2} }[/math] and [math]\displaystyle{ \frac{2}{3} }[/math], respectively. So we'll be using superunisons throughout this series. Technically we could use undirected ratios, written with a colon, like 2:3; however, for practical purposes—in order to put interval vectors into our matrices and such—we need to just pick one direction or the other.)

Generators

In order to know what errors we're working with, we first need to state that arbitrary tuning we said we were going to be working with. We also said we were going to work with meantone temperament here. Meantone is a rank-2 temperament, and so we need to tune two generators.