Linear dependence

Bases, such as comma bases, are considered linearly dependent when they share a common basis vector. In other words, that they can form an identical vector through linear combinations of their member vectors.

When basis vector sets do not share a common basis vector like this, they are linearly independent. Linearly dependent basis vector sets are in a sense more closely related to each other than linearly independent basis vector sets.

Linear dependence is involved in certain operations used in regular temperament theory, such as the wedge product or temperament addition, which are defined for objects that can be interpreted as basis vector sets, such as matrices or multivectors, and that also represent regular temperaments.

Linear dependence as defined for various types of vector sets

Linear dependence is defined for several objects relevant to RTT that can be defined as basis vector sets. These objects will each be discussed in detail below.

Linear dependence between basis matrices

Linear dependence is defined on sets of basis matrices (matrices acting as bases), such as two temperaments' mappings[1], or two temperaments' comma bases.

A set of basis matrices are linear dependent upon each other when some vector can be found where each basis matrix can produce this vector through a linear combination of its own constituent basis vectors. For a very simple example, the mappings [⟨5 8 12] ⟨7 11 16]} and [⟨7 11 16] ⟨15 24 35]} are linearly dependent because both mappings contain the vector ⟨7 11 16]. For a less obvious example, the mappings [⟨1 0 -4] ⟨0 1 4]} and [⟨1 2 3] ⟨0 3 5]} are also linearly dependent, because the vector ⟨7 11 16] can be found through linear combinations of each of their rows; in the first mapping's case, ⟨7 11 16] = 7⟨1 0 -4] + 11⟨0 1 4], and in the second mapping's case, ⟨7 11 16] = 7⟨1 2 3] + -1⟨0 3 5].

Sometimes basis matrices can share not just one basis vector, but multiple basis vectors. For example, the comma basis [[-30 19 0 0⟩ [-26 15 1 0⟩ [-17 9 0 1⟩] and the comma basis [[-19 12 0 0⟩ [-15 8 1 0⟩ [-6 2 0 1⟩] share both the vector [4 -4 1 0⟩ as well as the vector [13 -10 0 1⟩:

- [4 -4 1 0⟩ = [-26 15 1 0⟩ - [-30 19 0 0⟩

- [4 -4 1 0⟩ = [-15 8 1 0⟩ - [-19 12 0 0⟩

- [13 -10 0 1⟩ = [-17 9 0 1⟩ - [-30 19 0 0⟩

- [13 -10 0 1⟩ = [-6 2 0 1⟩ - [-19 12 0 0⟩

These two basis matrices are the comma bases dual to the 7-limit uniform maps for 12-ET and 19-ET, respectively. [4 -4 1 0⟩ is the meantone comma and [13 -10 0 1⟩ is Harrison's comma, so we can say that both of these temperaments make both of these commas vanish.

For a given set of basis matrices, how to compute a basis for their linearly dependent vectors

A basis for the linearly dependent vectors of a set of basis matrices, or in other words, a linear-dependence basis [math]\displaystyle{ L_{\text{dep}} }[/math] can be computed using temperament merging.

- To check if two mappings are linearly dependent, we use a comma-merge. That is, we take the dual of each mapping to find its corresponding comma basis. Then we concatenate these two comma bases into one bigger comma basis. Finally, we take the dual of this comma basis to get back into mapping form. If this result is an empty matrix, then the mappings are linearly independent, and otherwise the mappings are linearly dependent and the result gives their linear-dependence basis.

- To check if two comma bases are linearly dependent, we use a map-merge. This process exactly parallels the process for checking two mappings for linear dependence. Take the duals of the comma bases to get two mappings, concatenate them into a single mapping, and take the dual again to get back to comma basis form. If the result is an empty matrix, the comma bases are linearly independent, and otherwise they are linearly dependent and the result gives a their linear-dependence basis.

Certainly there are other ways to determine linear dependency, but this method is handy because if the basis matrices are linearly dependent, then it also gives you [math]\displaystyle{ L_{\text{dep}} }[/math].

Linear dependence between multivectors

Linear dependence is defined for sets of multivectors, such as two temperaments' multimaps, or two temperaments' multicommas. For more information, see Douglas Blumeyer and Dave Keenan's Intro to exterior algebra for RTT#Linear dependence between multivectors.

Linear dependence within a single basis matrix

Linear dependence is defined among the basis vectors of a single basis matrix. For more information, see #rank-deficiency and full-rank.

Linear dependence between individual vectors

Linear dependence is defined between sets where each contains only a single basis vector. The sense in which individual basis vectors like this can be linearly dependent is the simplest of all: it is only if they are multiples of each other. For example, ⟨12 19 28] and ⟨24 38 56] are linearly dependent, because ⟨24 38 56] = 2⟨12 19 28]. But ⟨12 19 28] and ⟨12 19 27] are not.

Linear dependence between temperaments

The conditions of temperament addition motivate a special definition of linear dependence for temperaments. For more information, see: Temperament addition#2. Linear dependence between temperaments.

RTT applications involving linear dependence

Wedge product

Linear dependence has an interesting effect on the wedge product. Ordinarily, the wedge product can be used with multivectors to find new temperaments in an equivalent way to how new temperaments are found using concatenation with matrices. However, if the wedged multivectors are linearly dependent, then the multivector resulting from their wedge product will have all zeros for entries, and therefore it will not represent any temperament. Linear dependence does not impose this limitation on the concatenation of matrices approach, however; if equivalent linearly dependent matrices are concatenated, then a new matrix representing a new temperament will be produced. For more information, see Douglas Blumeyer and Dave Keenan's Intro to exterior algebra for RTT#The linearly dependent exception to the wedge product.

Temperament addition

Temperament addition only results in a usable temperament when the input temperaments are addable, an advanced property that builds upon linear dependence. For more information, see Temperament addition#Addability.

Variance

Linear dependence is defined both for basis vector sets whether they are covariant ("covectors", such as maps) or contravariant (plain "vectors", such as prime-count vectors). For simplicity, this article will use the word "vector" in its general sense, which includes either plain/contravariant vectors or (covariant) covectors.[2]

Plain vectors and covectors cannot be compared with each other, however. Linear dependence is only defined for a set of basis vector sets, or a set of basis covector sets. Linear dependence is not defined for a set including both basis vector sets and basis covector sets. For example, a set including one mapping (a basis covector set) and one comma basis (a basis "plain-vector" set) has no directly meaningful notion of linear dependence[3]. So, while it is convenient to use "vector" for either type, it is important to be careful to use only on type at a time, never mixing the two types.

Versus collinearity

Basis vector sets would be considered collinear if, not only were they linearly dependent, every vector able to be formed from any of their basis vectors can all be reduced to single basis vector. This would mean that literally every formable vector in any of the basis vector sets would fall along the same geometric line. So this is the same as the notion of collinearity in geometry, where three or more points found on the same line are said to be collinear, which also works for a set of lines or line segments being along the same line. And in geometrical terms, a vector could be considered to be a directed line segment.

Rank-deficiency and full-rank

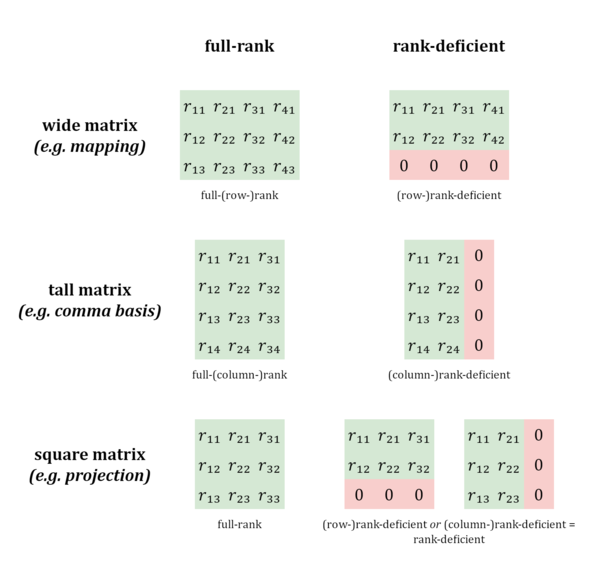

A matrix is full-rank when its rank equals whichever is smaller between its width (column count) and height (row count):

- For a wide matrix (height is smaller), it is full-rank when its rank equals its height.

- For a tall matrix (width is smaller), it is full-rank when its rank equals its width.

- For a square matrix (height = width), it is full-rank when its rank equals its height and width.

If a matrix is not full-rank, then it is considered rank-deficient.

"Rank" here is being used in the linear algebra sense, where it refers to the shape of a matrix after it has been put into a particular reduced form. For details on the additional meaning that "rank" takes on for regular temperaments in RTT, see the RTT rank section below. For more details on the reduced form used to check rank, see the Checking rank section below.

Checking rank

- For a wide matrix, put it into (row-style) Hermite normal form (HNF for short) and remove its all-zero rows; the height of this reduced matrix is its rank.

- For a tall matrix, put it into column-style HNF (CHNF for short) and remove its all-zero columns; the width of this matrix is its rank.

- For a square matrix, either of these two approaches can be used; the result will be the same.

Using mathematical software, checking whether a matrix is full-rank can be done quickly and easily; for example, in Wolfram Language, we can run MatrixRank[A] == Min[Dimensions[A]].

Row-rank and column-rank

For a wide matrix, such as a mapping matrix in RTT, column-style HNF would inevitably reveal at least one all-zero column. And so, all-zero columns are not of primary interest here. Instead, we are only interested in whether or not the reduced matrix contains any all-zero rows. Consequently, it may be clearer to be more specific, and speak of its "row-rank", rather than simply its "rank". When a wide matrix is full-row-rank, this means that all of its rows are linearly independent. And if a wide matrix is not full-row-rank, then some of its rows are linearly dependent, and it is row-rank-deficient.

Analogously, for a tall matrix (such as a comma basis in RTT), we're only interested in all-zero columns, and may speak more specifically of its column-rank. When a tall matrix is full-column-rank, this means that all of its columns are linearly independent, and otherwise, some of its columns are linearly dependent and therefore it is column-rank-deficient.

However, regardless whether a matrix is wide, tall, or square, its row-rank will always equal its column-rank. In other words, if we both HNF and CHNF a matrix, we will find a square reduced matrix, whose width and height are both equal to the rank. For intuition on why this happens, see the #Why row-rank always equals column-rank section below.

RTT rank

In RTT, the term "rank" is most often used to refer to the rank of a regular temperament. A regular temperament is a musical abstraction, not a matrix, and therefore the linear algebra sense of "rank" does not directly apply to it. However, the historically established convention has been to define a temperament's rank as the rank of its mapping matrix, which is thereby taken to be the primary representation of the temperament in this context.

This notion of rank of a temperament poses some risk for confusion, however, because mappings are not the only matrices which can be used to represent temperaments; their duals, comma bases, may also be used for this purpose. And since the comma basis is dual to its mapping, then by the rank-nullity theorem, the rank of the comma basis is not necessarily equal to the rank of the temperament. Rather, the rank of the comma basis is equal to the nullity of the temperament (and the mapping). In order to avoid confusion on this matter, then, whenever we wish to refer to the rank of a comma basis, we can be more specific and refer to its column-rank.

Canonical form guarantees full-rank

A mapping that is in canonical form is guaranteed to be full-(row-)rank. A comma basis that is in canonical form is guaranteed to be full-column-rank.

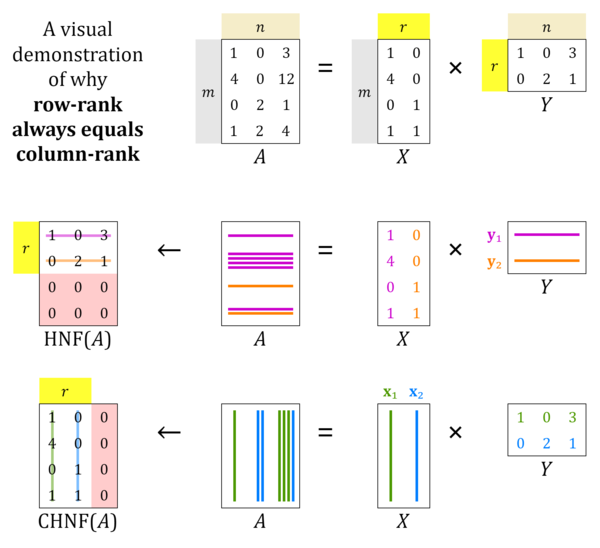

Why row-rank always equals column-rank

Any [math]\displaystyle{ (m,n) }[/math]-shaped matrix [math]\displaystyle{ A }[/math] can be expressed as the product of a [math]\displaystyle{ (m,r) }[/math]-shaped matrix [math]\displaystyle{ X }[/math] and a [math]\displaystyle{ (r,n) }[/math]-shaped matrix [math]\displaystyle{ Y }[/math], such that the [math]\displaystyle{ r }[/math]-dimensions cancel out in the middle and we're left with an [math]\displaystyle{ (m,n) }[/math]-shaped matrix.

By the definition of matrix multiplication, we can interpret [math]\displaystyle{ X }[/math] as the matrix whose rows instruct us how to express the rows of [math]\displaystyle{ A }[/math] as linear combinations of the rows of [math]\displaystyle{ Y }[/math], and vice versa, [math]\displaystyle{ Y }[/math]'s columns instruct us how to express the columns of [math]\displaystyle{ A }[/math] as linear combinations of the columns of [math]\displaystyle{ X }[/math]. For example, if [math]\displaystyle{ X }[/math] and [math]\displaystyle{ Y }[/math] are both [math]\displaystyle{ (3,3) }[/math]-shaped matrices and the third column of [math]\displaystyle{ Y }[/math] is [3 0 2⟩, that tells us that the third column of [math]\displaystyle{ A }[/math] will equal 3 of the first column of [math]\displaystyle{ X }[/math] plus 2 of the third column of [math]\displaystyle{ X }[/math].

So suppose we ask: what's the smallest we could make [math]\displaystyle{ r }[/math] for a given [math]\displaystyle{ A }[/math]? If we can find an [math]\displaystyle{ X }[/math] and a [math]\displaystyle{ Y }[/math] such that [math]\displaystyle{ r = 2 }[/math], this tells us both that every row of [math]\displaystyle{ A }[/math] is a linear combination of only two different rows, specifically the two rows of [math]\displaystyle{ Y }[/math], and also that every column of [math]\displaystyle{ A }[/math] is a linear combination of only two different columns, specifically the two columns of [math]\displaystyle{ X }[/math]. Which means that if we ask for either the row-rank or the column-rank of [math]\displaystyle{ A }[/math], we'll get [math]\displaystyle{ 2 = r }[/math].

In general, we can see that this minimized [math]\displaystyle{ r }[/math] will give us both [math]\displaystyle{ A }[/math]'s row- and column- rank, and thus that these two ranks are always equal.

And now for an RTT example with demonstrations in Wolfram Language code. For an example with a square matrix, we can look to projection. A projection matrix [math]\displaystyle{ P }[/math] is a generator embedding matrix [math]\displaystyle{ G }[/math] times a mapping [math]\displaystyle{ M }[/math], or [math]\displaystyle{ P = GM }[/math]. We know that [math]\displaystyle{ P }[/math] is [math]\displaystyle{ (d,d) }[/math]-shaped, [math]\displaystyle{ G }[/math] is [math]\displaystyle{ (d,r) }[/math]-shaped, and [math]\displaystyle{ M }[/math] is [math]\displaystyle{ (r,d) }[/math]-shaped.

Consider third-comma meantone, a tuning of a rank-2 temperament of 5-limit JI, where we have [math]\displaystyle{ d = 3 }[/math] and [math]\displaystyle{ r = 2 }[/math].

P = {

{ 1, 4/3, 4/3 },

{ 0, -4/3, -1/3 },

{ 0, 4/3, 1/3 }

};

Last[HermiteDecomposition[P]]

Last[HermiteDecomposition[Transpose[P]]

→ {

{ 1, 0, 1 },

{ 0, 4/3, 1/3 },

{ 0, 0, 0 }

}

→ {

{ 1/3, 2/3, -2/3 },

{ 0, 1, -1 },

{ 0, 0, 0 }

}

On the other hand, we have the minimax-E-copfr-S (or primes miniRMS-U) tuning of 12-ET, where [math]\displaystyle{ d }[/math] still equals [math]\displaystyle{ 3 }[/math] but now [math]\displaystyle{ r = 1 }[/math]:

M = {{ 12, 19, 28 }};

G = PseudoInverse[M]

→ {{ 12, 19, 28 }} / 1289

P = G.M

→ {

{ 12² , 12·19, 12·28 },

{ 19·12, 19² , 19·28 },

{ 28·12, 28·19, 28² }

} / 1289

Last[HermiteDecomposition[P]]

antitranspose[Last[HermiteDecomposition[antitranspose[P]]]]

→ {

{ 12, 19, 28 },

{ 0, 0, 0 },

{ 0, 0, 0 }

}/1289

→ {

{ 12, 19, 28 },

{ 0, 0, 0 },

{ 0, 0, 0 }

}/1289

So we can see that that in either case, the HNF and CHNF give the same count of all-zero vectors, and that the count of remaining vectors is equal to [math]\displaystyle{ r }[/math].

Footnotes

- ↑ Mappings are not typically thought of as bases, but their row vectors can be considered to span rowspaces in an analogous way that comma bases span spaces.

- ↑ This article will also use "multivector" to refer to either plain/contravariant multivectors or (covariant) multicovectors (elsewhere on the wiki you will find "varianced multivector" to refer unambiguously to either type in the general sense).

- ↑ though the two temperaments here — the one defined by this mapping, and the other defined by this comma basis — can have a notion of linear dependence, as can be understood by finding the comma basis that is the dual of the mapping and checking the two comma bases for linear dependence, or vice versa, finding the mapping that is the dual of the comma basis and checking the two mappings for linear dependence. This notion of linear dependence is discussed in more detail here.