Categorical Entropy, Mutual Information, and Channel Capacity

The Categorical Entropy (CE), Categorical Mutual Information (CMI), and Categorical Channel Capacity (CCC) are simple additive white Gaussian noise (AWGN) models quantifying how distinct the notes and intervals in a scale are from one another. They were developed by Mike Battaglia and Keenan Pepper.

Introduction

One goal of xenharmonic music is to create music in new tuning systems that sounds "intuitive", "familiar", "intelligible", "hospitable", etc. To achieve this, some xenharmonic music theorists look for qualities exhibited by current or historical musical traditions (e.g. European/American, Middle Eastern, Indian, Indonesian musics), and attempt to generalize them. Such generalized properties may be used to determine families of tuning systems which share common features.

So far, much of this generalization has been focused on the search for tunings with chords that approximate simple fragments of the harmonic series, which produce psychoacoustic effects considered desirable in Western polyphonic music. To that extent, models have been developed such as Paul Erlich's "Harmonic Entropy," which attempts to quantify directly the "approximate harmonicity" of a chord, as well as the theory of regular temperament, which provides a method to find tuning systems that can approximate simple harmonic chords to within a desired accuracy level.

It has become clear, however, that there exist other phenomena than the harmonic ones described above that are similarly worth generalizing. In this article, we present a basic analysis of such phenomena that one might term "scalar" or "categorical."

Perception of Scale and Mode

Within a broad variety of musical traditions, many listeners exhibit some sense that there exists a background "scale" or "mode" that a piece of music can be "in" at some point in time, to which notes can either "belong" or not "belong." Listeners of these musical traditions typically learn to distinguish these scales from one another, either consciously or unconsciously, to the extent that they are usually able to determine which scale is the correct one to sing a melody in for some piece of music.

Virtually all of the world's musical traditions make use of scales in this way, to which they often ascribe different emotional qualities. Distinguishing between these scales, and more generally distinguishing between which notes are "appropriate" or "inappropriate" to play at a particular time in a piece of music, is usually considered important to most musical traditions. The general ability of most listeners to do this has been established in a large body of research by Carol Krumhansl and others.[1]

What is a "Note?"

The notion that scales are made up of discrete "notes" is less trivial than it would appear at first glance. For example, the tuning of each "note," in practice, can often vary considerably, even within a short time duration in a performance. Notably, techniques such as heavy vibrato are used deliberately in a wide assortment of musical traditions. Notes can also be adjusted intonationally to create various melodic or harmonic effects, or can be "bent" in a continuous glide.

We typically take for granted our ability to recognize, usually without much thought, such detunings as being "different tunings of the same note" rather than "two different notes." These phenomena routinely appear in music without disturbing the listener's sense of which note is being played, or where that note is located in the scale. And indeed, music would sound very different to us if our perception of notes, and all the melodic, harmonic, and "tonal" qualities they entail, were rendered totally unrecognizable every time a singer were 15 cents out of tune or used vibrato!

We consider the above an interesting feature of many musical traditions which we would like to model. However, some scales may lend themselves to being categorized into "background notes" more easily than others. We would like to understand how this works, quantify it, and find scales for which the perception of each note is as robust to mistuning as possible.

Categorical Perception and Ear Training

There is evidence in the literature that musical ear training enhances the ability of listeners to quantize the pitch spectrum into notes in this in the manner described above. This phenomenon that has been called "categorical perception" in the literature.[2][3] Listeners develop a heightened ability to perceive pitches as intonational variants of the underlying set of "categories" of notes. Each note develops a kind of "channel," "bandwidth," etc, surrounding the reference, and pitches within the channel tend to be perceived as representing an "intonational variation" of the reference category.

It has even been shown that trained listeners have an easier time distinguishing between equidistant intervals if they correspond to different categories.[4] For example, trained Western musicians typically have an easier time distinguishing between 325 and 375 cents than with 375 and 425 cents, since the former corresponds to a "minor third" vs a "major third", whereas the latter corresponds to two "major thirds." Listeners with AP typically an additional level of categorical perception for absolute pitches, rather than pitches relative to some tonic.[5]

One may be tempted, from the above literature, to conclude that years of ear training are necessary to be able to understand a new musical tuning. However, there is also research showing that listeners are good "musical tourists": they are generally able to identify, given a piece of music in a foreign musical tradition with which they have no experience, which notes are considered "appropriate" or "inappropriate" to play at a particular time in that piece.[6] This was studied explicitly in the context of North Indian and Gamelan music being played to Western listeners, and compared with expert "native" listeners: Western listeners were generally able to produce "rankings" of notes in "appropriateness" that were relatively consistent to those of the native listeners, although it is noteworthy that in expert listeners, the rankings were influenced somewhat by the note's perceived "membership" among a set of traditional scales.[7][8]

The above is evidence that, even absent any particular ear training regimen, the music has an intrinsic ability to "train" the listener's ear to develop some sense of the basic discrete "notes" currently being used, as well as their musical relevance through the course of a composition. Furthermore, given that many musical traditions can also exhibit vibrato, a range of intonational deviations, or in the case of Gamelan music, deliberate mistuning to create a chorus-like effect, it is reasonable to suggest that the listener's ability to do this exhibits some degree of robustness to intonational or tuning variations.

We consider that many of the world's musical traditions may have developed a structure that makes the music particularly "intelligible" on this level, both in the choice of scales that they use and in the way they choose to play them.

Categorical Perception vs JI "Interpretations"

Although this has not been studied explicitly in the literature, we consider there to be substantial evidence that this perception of different note "categories," or more generally interval or chord categories, does not necessarily correspond to the perception of different underlying JI "interpretations," or at least not in a simple one-to-one way.

A good example is traditional barbershop music. Barbershop quartets will switch between intonational variants of intervals to obtain the simplest JI representation possible - for example, a barbershop quartet may tune a "minor third" as 6/5 to bring it closer to 4:5:6, or as 7/6 if it is the top dyad of a dominant 7 chord, so as to tune the entire thing to 4:5:6:7. However, the intonational shift from 6/5 to 7/6 does not render it unrecognizable as an intonational variant of the original note, or disrupt the sense of scale position, in the same way it would if the intonation were shifted from 6/5 to 5/4. Were this not the case, barbershop music would sound very different.

That this is even possible is non-trivial, and tells us that these are two distinct phenomena. Indeed barbershop quartets get very good at this, to the extent that the entire barbershop tradition can be thought of as being a method of adjusting the JI interpretation of chords while preserving the scalar, melodic, and tonal structure of the music.

An Example: Categorical Experiments

A good set of listening examples to demonstrate this are the "Categorical Experiments" by Mike Battaglia retuning the Bach Fugue in C major, where the fifth varies from 686 cents (7-EDO) to 720 cents (5-EDO).

When the fifth is tuned to a meantone tuning, such as 31-EDO, the thirds are decent approximations to 5/4 and 6/5. When the fifth is tuned to a superpyth tuning, such as 22-EDO, the thirds are now decent approximations to 9/7 and 7/6. However, many western listeners seem to agree that there exists some level of the music that remains intelligible through all of these retunings - the sense of scale, melody, and chord qualities such as "major and minor" - despite the differing JI interpretations used.

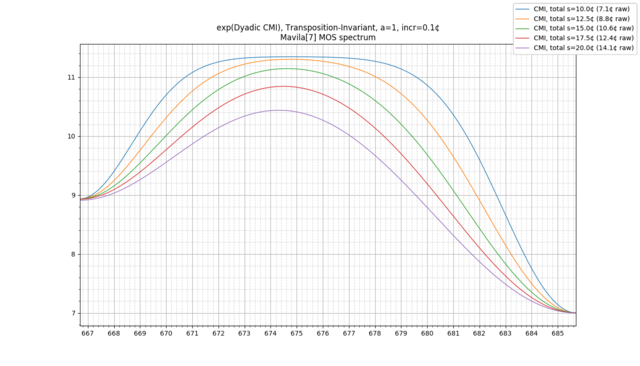

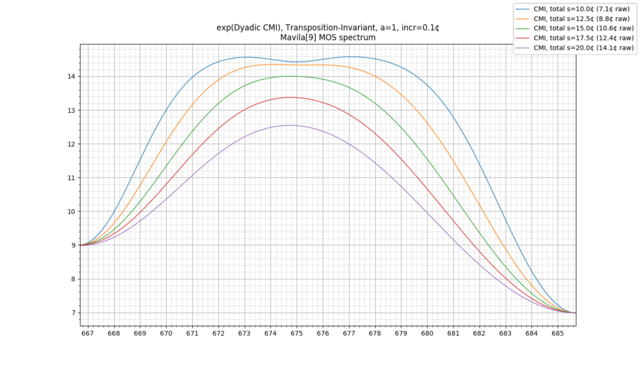

An easy way to see this is to compare the above to what happens if the fifth were tuned flat of 7-EDO, such as in mavila temperament. This doesn't just change the JI intonation of major and minor thirds, but actually causes them to switch places in the scale, so that the formerly "major" third is now ~300 cents, and the formerly "minor" third is now ~375 cents (as in 16-EDO). Examples of such retunings can be found in Mike Battaglia's Mavila Experiments for comparison. It is pretty easy to hear that formerly "major" pieces now sound "minor" and vice versa. Such a dramatic shift doesn't quite seem to happen when the 10:12:15's become 6:7:9's in the first set of examples, as happens when you move from 19-EDO to 22-EDO: while the intonational change is certainly noticeable, there is also some sense in which they retain the "minor" tonal identity, rather than changing to something entirely different.

Of course, further research is certainly needed, and the above should be considered as only representing anecdotal evidence from within the xenharmonic community. Furthermore, even within the community, there may be considerable variance in perception that should be studied and understood. However, there does seem to be some reasonable agreement that it is possible to retune the diatonic scale in a way that the JI interpretations change, but tonal identities such as "major" and "minor" do not, indicating that there is indeed another layer of information. For now, we consider this anecdotal evidence to be sufficient to proceed in exploring this principle further, which we will attempt to do with our model.

Developing New Categorical Perceptions: "Detwelvulating"

The above has led some listeners in the xenharmonic community to express the feeling that they may be unconsciously "categorizing" new tunings in the context of 12-EDO without realizing it. This sentiment goes all the way back to Ivor Darreg's message to "Detwelvulate!" One way to interpret this maxim is to get away from unconsciously interpreting the notes of new tunings as altered versions of 12-EDO categories, and rather develop a new categorical perception for the tuning at hand. This has led to questions about how this can be accomplished.

It is noteworthy that this is a relatively new way of thinking about this topic, and perhaps not the only way to accomplish this goal. For example, some composers have instead attempted to unlearn their 12-EDO based category system and not replace it with anything, freely exploring the use of the entire pitch continuum without any sense of discrete note or scale at all. Others have attempted to build a very large set of "universal categories," often JI-centric ones, in an attempt to cognize any scale by relating the notes in it to their categorized JI interpretations. These are certainly valid artistic approaches, and we would not wish to rule them out; however we will suggest a different path.

Our paradigm is to develop a different categorical perception for each individual tuning system, or at least for a sufficiently large chromatic scale from within that tuning system. These categorization schemes need not have anything to do with JI: for instance, 7/6 and 6/5 might be categorized as the same thing in one tuning (such as a "minor third"), whereas in another tuning, 8/7 and 7/6 might be categorized as the same thing, with 6/5 being mapped as an intonation of a different entity. The goal is to be able to wear different hats, so to speak, which are developed so as to be appropriate to each tuning, and switch between them when switching tunings.

We consider that many of the world's musical traditions may have developed a structure that makes the music particularly "easy to categorize" in this way. We will attempt to explore how this works in an information-theoretic model which is in some sense a "categorical" or "scalar" variation of Paul Erlich's Harmonic Entropy.

The Model: Scales as Musical "Information" Channels

We will use an information-theoretic model of a scale as a channel through which musical "information" of some type is represented and transmitted. The different scale notes and intervals are taken to "mean" different things with respect to the context of the music, which we will view as being representations of distinct messages of musical information.

Another way to phrase this is that this musical information is being encoded into the notes of the scale, transmitted when the scale is played, subjected to noise in the form of pitch or intonational deviations, and decoded when the listener deciphers the notes heard to obtain a mental construct of the original information transmitted.

The success of our model will hinge on our ability to quantify exactly how suitable a scale is for this purpose -- without making any assumptions at all about the nature of this "musical information." We will see that this is possible to do: we will simply assume information of the type we are describing exists, in some form, and that the perception of discrete scale steps or intervals serves as a signaling mechanism to transmit it on some meaningful level.

We will then ask: how easily can the scale notes and intervals be differentiated from one another, both for a "naive" and an "expert" listener? Whatever information these notes or intervals represent, we will not be able to correctly identify it if the intervals are so close in size that the listener cannot tell them apart.

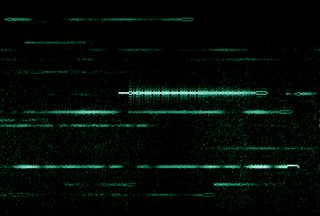

A good analogy is the picture to the right, representing multiple simultaneous radio transmissions taking place on multiple carrier frequencies. We know that information of some type is encoded into each carrier frequency, which "means" something to the listener when it is received and decoded. We don't know what the nature of this "meaning" is or what type of information is being transmitted. However, we do know that, if we would like any information to be transmitted at all, each carrier frequency must be given its own bandwidth, where it is adequately separated from other frequencies, in order to avoid interference.

Our model of a scale works in basically the same way: we do not know exactly what mysterious musical information is conveyed by the notes, or how the listener will interpret it, but we do know that we want the notes to be spread out enough to avoid interference from one another so that listeners can decipher them correctly.

Model Assumptions

In our model, we make two basic assumptions:

- Information of some nature is transmitted via the notes (or intervals) of a musical scale, such that some significant part of a listener's perception of music is determined by their perception of which note or scale position is being played.

- Different scale notes (or intervals) can generally be distinguished more easily from one another if they are further apart.

For the latter assumption, we assume that as a general rule, for example, listeners will be more easily able to distinguish between 300 and 400 cents than 330 and 360 cents, which are more likely to be confused with one another. As mentioned, this need not be 100% true in all circumstances, given the way that categorical perception skews subjective judgments about interval sizes, but we regardless consider this to be an unobjectionable general principle that is mostly true on average.

Using this principle, our aim is to quantify how "different" the intervals in a scale are from one another, which we view as an important perceptual quality of a musical scale. Our main thesis is that those scales for which each note is given as wide a "bandwidth" as possible will be easiest to categorize. This gives each note an allowance for real-world tuning variation, deliberate mistuning effects such as vibrato, or general "blurriness" in the auditory system of the listener, while still making it as clear as possible what notes are being played. Maximizing this "bandwidth" increases our chance that the note can be unambiguously interpreted without "interference" from competing ambiguous interpretations from nearby notes.

It is important to note that we don't necessarily place a musical value judgment on this property. Scales which have ambiguous intervals can also musically useful, as the ambiguities between intervals that are close but different can be used for musically expressive purposes. We simply would like to quantify, as a starting point, which scales have these kinds of ambiguous intervals in this way to begin with, and which don't.

The Nature of Musical "Information"

To gain a better understanding of what we are talking about, it is clear that there are many natural examples of the type of "musical information" described above, which are routinely transmitted and pertain to the perception of scale notes.

For example, different notes can transmit "modal" information relative to the listener's perception of which mode is currently being played. Notes can evoke a sensation of "fitting in" or "not fitting in" to the perceived modal context, either sounding modally "appropriate" or "chromatic." A sequence of notes that does not "fit in" could also be perceived as signaling a change to the listener's perception of which mode is currently being played, and hence constitute a different type of informational message: a "modulation."

On a different level, "melodic" information could additionally be transmitted that is purely cultural, in that a set of notes can resemble a musical phrase or "lick" commonly heard in a musical tradition. A sequence of notes could also convey some sense of melodic "contour," and cause the listener to form predictions about whether the next note will be higher or lower than the current one.

On yet another level, the information could be "tonal," in that intervals can represent Western tonal "functions" such as "minor third" or "perfect fifth," which we already know can be represented via a wide assortment of tunings. A note may evoke a sensation of being part of a particular chord from the Western tonal tradition. It may evoke the sensation of changing the sense of the "current chord," while preserving the general sense of scale and mode. Notes could be heard as arpeggiations of commonly played chords.

However, despite the seemingly endless possibilities for scales to convey information on different musical layers, we reiterate that it is not necessary to model any of this. Instead, our approach will enable us to simply model the capacity of the scale to serve as a discrete information channel for any type of information, assuming only that different scale notes communicate different informational messages. We will then model how distinguishable the individual scale notes and intervals are from one another at the reciever, even in the presence of tuning "noise."

Shannon's Theory of Information

Claude Shannon's theory of information was originally developed for use in telecommunications to serve this exact purpose: it enables us to speak meaningfully of the "information capacity" of a noisy channel without requiring us to know anything about what messages will be sent on it, or how they will be encoded.

An auditory example that makes the musical analogy particularly prominent is that of radio transmissions. In Ham Radio, the PSK31 format is a way to encode a message on a single carrier frequency. In a real-world radio spectrum, multiple PSK31 transmissions are always continuously beginning and ending at different times. If a sample of spectrum activity is simply played as an audio file, we hear different pitches slowly going in and out of existence, each representing a different message of some unknown nature. You can click on the right to hear an example of this; it sounds like various microtonal chords and scales slowly forming, modulating, and drifting. While we don't know what information the notes carry, we do know the carrier frequencies must be spaced far enough apart from one another to avoid interference. The PSK31 engineers needed to design their audio frequency-based transmission system to make the signal robust to noise without knowing literally anything at all about the nature of the "meaning" of the messages being encoded into the carrier frequencies. This is basically the situation that we face when designing a scale for "categorical clarity."

Imperatively, in both situations, we have the same limitation of not knowing anything about what particular information is being encoded. Indeed, it would be crazy if we were required to make guesses about the encoded semantic "meaning" of a particular radio transmission just to model how noisy the channel is! Likewise, we need not worry about this in our musical situation, only that whatever semantic "meaning" exists, it is somehow being represented by the pitches of the scale. The scale pitches are just another set of auditory frequencies that simply need enough breathing room to be robust from receiving errors in the presence of noise.

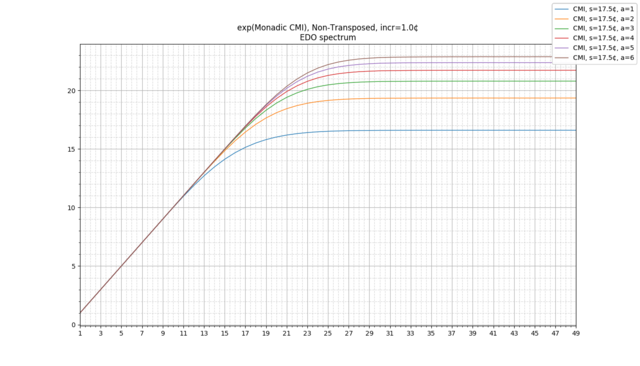

Shannon developed a set of techniques that enable us to clearly do everything listed above. We can measure how intelligible a signal is expected to be when sent on a noisy channel (the "Mutual Information"), and even maximize the amount of information that can be clearly sent across a noisy channel by changing the probability distribution of symbols being sent, so that "unambiguous symbols" are sent most frequently and "possibly ambiguous ones" less frequently (the "Channel Capacity"). We will use Shannon's theory to develop our metrics for a scale, which we call Categorical Entropy, Categorical Mutual Information, and Categorical Channel Capacity.

As we will see, we will obtain some noteworthy and basic results: for example, that mistuning a scale slightly can lead to an increase in clarity, by moving notes away from competing interpretations.

A Motivating Example: Diatonic Scale Perception Breakdown near 7-EDO and 5-EDO

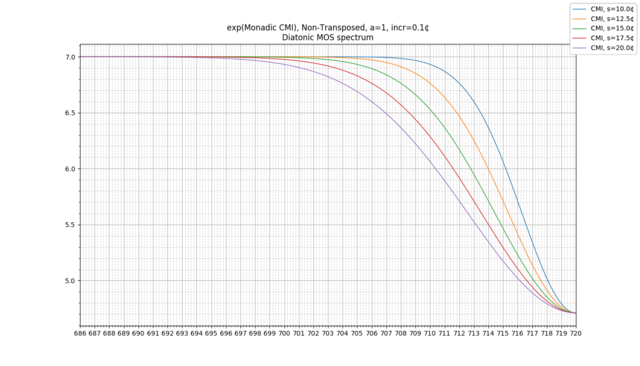

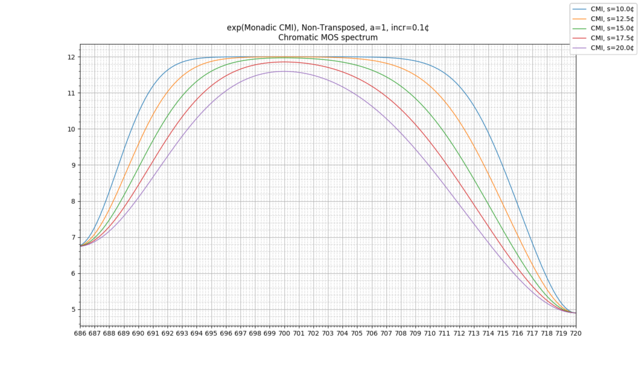

To illustrate what we are talking about, consider the "Categorical Experiments", which demonstrate this effect for Western music and the diatonic scale.

While it is clear that some aspect of the general perception of note and scale is preserved even under a wide variety of deformations, it can be seen that this perception begins to break down near the extremes of 7-EDO (with L/s = 1/1) and 5-EDO (with L/s = 1/0).

When the fifth approaches that of 7-EDO, the chromatic semitone approaches 0 cents, so the "minor," "major," "augmented," and "diminished" versions of each interval begin to converge in size and become ambiguous with one another, and it becomes more likely that the listener confuses major and minor third and so on. As we get to 7-EDO, these intervals become identical, and at that point, the scale may start to shift into a totally different perception entirely.

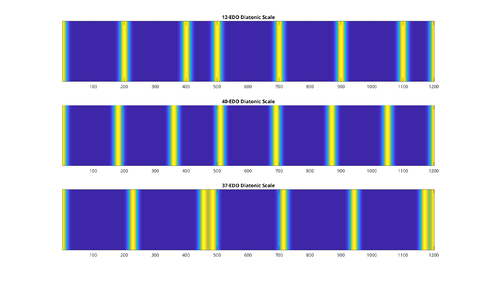

Likewise, when the fifth approaches that of 5-EDO, the diatonic semitone approaches 0 cents, so that the "major second" and "minor third" converge in size and become ambiguous, as do the "major third" and "perfect fourth," the "perfect fourth" and "diminished fifth," and so on. For instance, if the fifth is tuned to 714 cents as in 37-EDO, within the context of the diatonic scale, a Western listener may perceive the 454 cent interval as representing a common practice "major third," and the 486 cent interval as representing a common practice "perfect fourth." However, these intervals are close enough that the listener may occasionally think that the 454 cent interval is the 486 cent interval and vice versa, and hence interpret the current interval as a representation of the "perfect fourth" rather than the intended "major third."

It is noteworthy that the same "breakdown" occurs to a much smaller extent as the tuning converges on 12-EDO, the tuning where the diatonic scale's L/s = 2/1. In this situation, the diminished fifth and augmented fourth converge in size and become ambiguous. Likewise, in the context of the harmonic scale, the augmented second and minor third become ambiguous, as do the major third and diminished fourth. However, in each of these cases, the "total level of ambiguity" is in some sense much less than in 5-EDO or 7-EDO, where every single interval in the diatonic scale has become ambiguous. We will want a model that scores this appropriately. (As we will see, other MOS's sometimes fare much worse than the diatonic scale when tuned to the L/s = 2/1 point.)

Confounding Factors: Variance in Hearing, Musical Training, Joint Distributions

We note that whether 37-EDO is ambiguous enough to produce the effect described above is likely variable from person to person. Some listeners may be still capable of distinguishing between the major third and perfect fourth in this situation without much trouble. For those listeners, moving to the generator of 43\72, or 717 cents, may cause more trouble, as the "major third" is now represented by 467 cents and the "perfect fourth" is now represented by 483 cents. For other listeners, 37-EDO may already be at the point where the diatonic semitones are too small to distinguish, so that their ears "give up" and they hear the entire thing as a pentatonic scale with some intonational variations. Those listeners may not have the same perception in 32-EDO or 27-EDO, where the diatonic semitones are larger.

Lastly, we note that musical training may alter the perception of the above significantly, and perhaps serve as a possible confounding effect. For example, expert listeners may be able to use musical context to gain additional "clues" as to which scale interval is being played, even in the case of extreme tuning ambiguity. This may hold even to an extreme degree that even in 5-EDO or 7-EDO, people can still sometimes perceive instances of "major" and "minor thirds" occurring even if they are tuned identically, based on learned patterns from musical context. This is similar to how listeners can perceive a difference between "augmented seconds" and "minor thirds" in Western music, even though these intervals are tuned identically in 12-EDO (and most listeners have had little to no prior exposure to different tunings of the diatonic scale).

In these situations, for such listeners, the "breakdown point" does not lead to a total breakdown in scale perception. However, we still consider that as a general rule, it is certainly easier to distinguish different intervals on average when they're tuned further apart from one another. Hence, we consider this distinctness criterion to be worth maximizing, particularly since in xenharmonic music we are often concerned with exploring tunings for which we have no prior musical setting to draw from.

Regardless of where the "breakdown point" may be for a particular listener, it is clear that at some point on the way to 5-EDO, ambiguities of the type mentioned will begin to arise and become frequent. Our model will represent this variability with a single free parameter called [math]s[/math] that represents the "fineness" of hearing, similarly to Paul Erlich's Harmonic Entropy model. The listener will be able to adjust [math]s[/math] to see how robust a scale is to these types of mis-decodings.

Lastly, we also note that we are only modeling the individual notes of a scale, whereas in real musical situations, the surrounding notes (in a melody or chord) further give the listener information about what notes are being played. That is, the set of sequences of notes are nontrivially jointly distributed, so that the listener is more easily able to determine what notes are being played given a fragment of the surrounding context than if they are played in isolation. An interval which is "ambiguous" when played in isolation may quickly become unambiguous once the listener hears the following notes and is able to identify the scale being played; this ability will clearly vary with musical training. It would be interesting to extend the model to handle these kinds of situations, but we will leave that for future research.

Within the Model

In our model, what is happening is that in these situations is that the listener can simply become confused about which interval scale step they are hearing, because the intervals involved do not have enough "bandwidth" to be free from interference from other intervals. This can lead to an incorrect interpretation of the musical information the notes are supposed to represent.

There is a very important caveat here. When we say that the listener may start to confuse major and minor thirds, whole and half steps, etc, as things get closer to 7-EDO, we have made a very important assumption: that the *goal* of the music is to have these things be different to begin with in such a way that is musically meaningful (as in Western music). Or, to be precise, we are assuming that some reference scale - the diatonic, for instance - has within it a set of intervals which are important to be able to tell apart from one another, and that we would like to measure how well some tuning of this scale lets listeners do that. But we note that there is clearly some point at which two closely-tuned intervals switch from sounding two different "types" of interval, albeit ambiguously tuned, to sounding like they are simply intended to be one "type" of interval to begin with.

This phenomenon is most familiar in Western music with 12-tone circulating temperaments such as Werckmeister III: there are many different sizes of fifth, for instance, but they all sound like slight variations on the same archetypical interval of the perfect fifth. This ought to be thought of as a feature, not a bug: if one's goal is to design a 12-tone well temperament, it would make no sense to evaluate the slightly different fifths negatively as being "ambiguous and difficult to tell apart." Rather, one would certainly *want* the fifths to be close enough in size that they all sound like different variations of the same thing. That's the entire point!

Of course, our goal here is not just to measure how good different tunings of scale are at representing Western tonal functions. The real question is: as the diatonic scale is tuned closer and closer to 7-EDO, at what point does it stop sounding like an ambiguous diatonic scale, and start sounding like something else - perhaps a slightly unequal "well temperament" of 7-EDO?

We will not attempt to answer these questions in any absolute sense, but rather present our analysis as follows: given some scale, we will present metrics that simply quantify how clearly the intervals in the scale can be differentiated from one another, by measuring how many "effective" types of easily-differentiable-interval are present in the scale (the "alphabet size" of the scale). The higher this quantity is, the more "information" the model claims the scale is capable of transmitting unambiguously. If two intervals are very unambiguous and different in size, the metric will count them as two "effective" types of interval. As they get closer and closer, the metric gracefully starts to count them instead as one effective type of interval in a smooth way. (Again, of course, it is up to ones musical judgment if this is a good thing or not.)

The main idea is that using these metrics, we can ask questions like:

- Given some archetypical reference scale, how categorically "stable" is the perception of that scale relative to itself?

As we will see, the model predicts that there are many scales with the interesting property that, even if they are treated as some kind of idealized reference scale, are not their own "optimal" tuning with respect to this quantity. That is, the model will suggest adjustments in tuning which make different intervals easier to differentiate from one another.

Some Last Caveats

Of course, this is not the only quantity that matters, so it should be used alongside other useful metrics. For instance, naive application of this model will naturally suggest that the "ideal" scale is something like a Golomb ruler, where every single pair of notes is some unique interval size. While this technically satisfies the criteria of maximizing this one particular quantity, this is perhaps not ideal for other musical reasons, where we may be most interested in scales with a max-variety of 2 or 3. So, of course, we expect that composers will tend to incorporate these additional criteria as they see fit, perhaps using the model only on MOS scales to see which ones maximize this quantity.

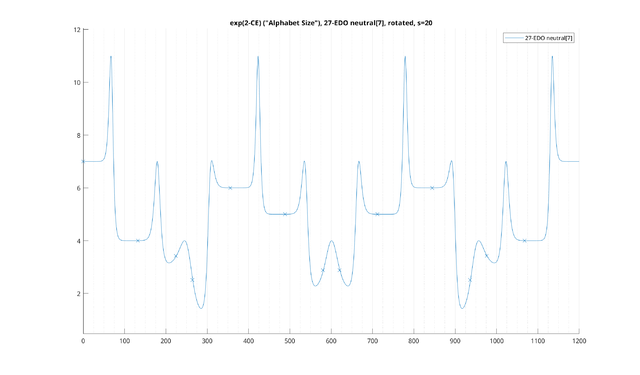

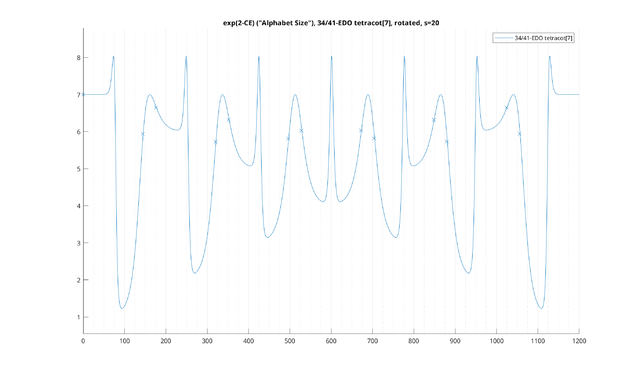

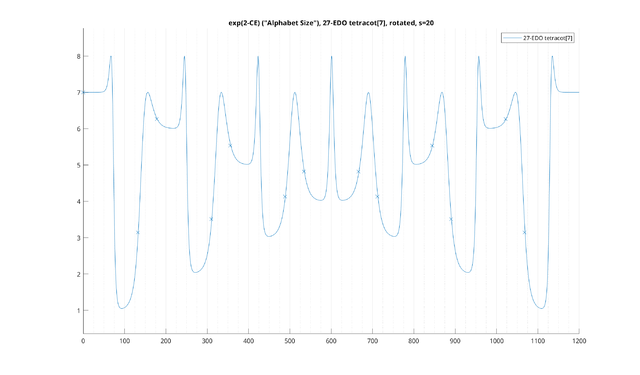

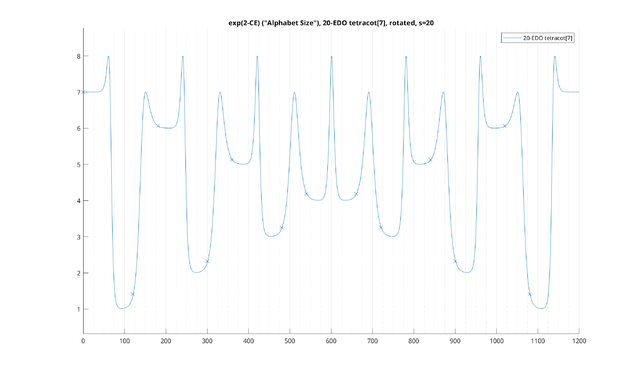

Another caveat is that many MOS's from "good" regular temperaments tend to form rather ambiguous, quasi-equal MOS's that don't fare well on this sort of metric (such as tetracot[7]). Given such a scale, this model, one way or another, will basically suggest making it less quasi-equal - somewhat closer to the tuning of "tetracot[7]" in 13-EDO. And indeed, if categorical clarity is all that we care about, it is certainly much easier to tell the intervals of the 6L1s MOS apart in 13-EDO than in 34-EDO - but this improvement has come at the cost of the approximation to just intonation. So we leave it up to the composer how they'd want to make that tradeoff.

In short, we really wanted to just focus on the categorical analysis of scales with this particular model, leaving to future research the best way to combine with other models focusing on other things (such as Harmonic Entropy).

Formal Model Definition: Probabilistic Scales

NOTE: this part gets fairly technical, so you may want to just skip below to the example pictures. And then look at the examples for CMI and examples for CCC!

We will begin our model by extending the usual definition of a scale to include probabilistic pitch deviations.

That is, rather than each scale degree having a single, unambiguous, deterministic tuning, the tuning will instead be given a random variable, representing a probability distribution of possible realizations. The mean of this random variable will be given by the scale degree's reference pitch.

We will then place a probability distribution on the scale degrees themselves. That is, we will consider certain scale degrees to have a larger probability of being played than others. Intuitively, we would like the most probable note to be considered as the "tonic," the second-most probable to be considered something like a "reciting tone," etc. If we would like a scale which does not have any such prioritization of intervals, we will represent it by a scale which has an equal probability for all scale degrees (i.e. a uniform distribution).

These two probability distributions will enable us to use the techniques of information theory to evaluate various "categorical" properties of the scale. In particular, it will enable us to view the scale as a probabilistic information channel with an associated mutual information representing how "noisy" it is.

Before we define this, however, we will need to clarify which version of the word "scale" we are talking about.

Preliminaries: What is a Scale?

Although the term "scale" has been used in different ways, we will use a notion of scale that is

- invariant to the choice of any particular tonic or "mode"

- invariant to the choice of any particular transposition or "key"

For example, in 12-EDO, we want to consider C major, D dorian, E phrygian, etc, as well as C# major, D major, etc, all as being different modes and transpositions of the same basic scale: the diatonic scale.

In this text, to keep things simple, we will generally assume that we are working with octave-periodic scales. However, it is fairly trivial to change everything to have a different interval of equivalence, such as in the Bohlen-Pierce scale, or even to use non-periodic scales such as Maqam Saba, if so desired.

When we work with octave-periodic scales, we will generally represent the scale as a single octave, for which each note is assumed to represent all octave-transposed versions.

Lastly, we will define the notes of our scale by specifying them in cents, relative to some arbitrary pitch in the scale we choose as having the special value of "0 cents." Note that this choice of pitch does not signify a choice of tonic, or "most important" note - rather, that type of information is more adequately represented by the probability distribution on each notes, so that the most probable note can be considered the tonic. However, we generally need to pick some note to be the 0 cent reference, so that there is more than one way to represent the same scale: for example, the sets {0, 200, 400, 500, 700, 900, 1100} (12-EDO Ionian) and {0, 100, 300, 500, 600, 800, 1000} (12-EDO Locrian) are considered to both be equally valid representations of the same diatonic scale.

Making Scales Probabilistic

We will first begin by placing the exact tuning of the notes in a scale into a probabilistic superposition. That is, the exact tuning of each note will be given by a probabilistic point-spread function.

As example, let's look at the 12-EDO octave-periodic diatonic scale, where the major mode is chosen as a reference point. We will start with a usual scale as a set of exact musical pitches:

$$ S = \left\{ 0, 200, 400, 500, 700, 900, 1100 \right\} $$

We will first replace the above tunings with a probabilistic tuning, where the mean is centered on each scale degree. Let us notate that as follows:

$$ S = \left\{ \overline{\mathbf{0}}, \overline{\mathbf{200}}, \overline{\mathbf{400}}, \overline{\mathbf{500}}, \overline{\mathbf{700}}, \overline{\mathbf{900}}, \overline{\mathbf{1100}} \right\} $$

where the bold and overline means we are defining each entry to be a random variable that is "approximately" the value given (e.g. takes it as a mean), but with some nonzero variance in the tuning (and possibly other nonzero moments as well).

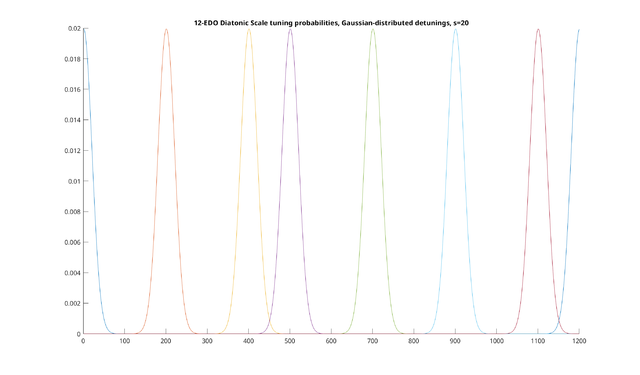

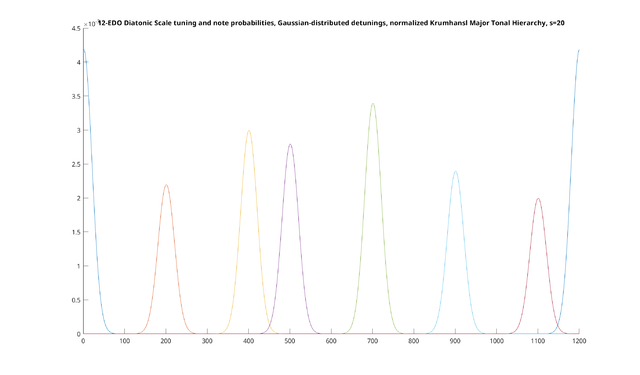

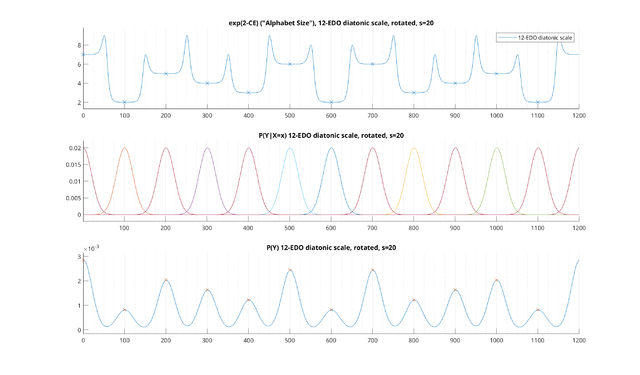

A typical choice for the random variable would be to make each note Gaussian-distributed, wrapping at the octave, with some standard deviation s. If we do so, and we set s=20 cents, we get the following probability distributions for each note:

This means that for each reference note, we should expect the tuning of the note to be distributed according to the curve in question. Increasing the value of s widens the curves, so that detunings are more common, whereas decreasing narrows them. (Note the value of 20 cents here may be slightly too wide for the diatonic scale, but it's a decent starting point, and is useful just to show the basic principle.)

Note that this probabilistic spreading of pitch can be viewed as representing the sum total of a wide range of sources of tuning deviations: deliberate performance bends, unintentional tuning deviations, vibrato, or even perceptual pitch "blurring" effects introduced by the auditory system. It is not necessary that each reference note have the exact same probability distribution of possible tunings, or even that the notes be Gaussian distributed, but for now we will assume identical Gaussian distributions on each note. It is then assumed that the ability to adjust the "s" parameter will give sufficient flexibility to represent the total degree of tuning deviation, on average.

Lastly, we will not only use scales where the notes have probabilistic tunings, but where the notes have probabilities of being played to begin with. For example, if we want to represent the major mode, we might use something like the following probability distribution:

$$ S = \left\{ \begin{align} \overline{\mathbf{0}}:& 21\%, \\ \overline{\mathbf{200}}:& 11\%, \\ \overline{\mathbf{400}}:& 15\%, \\ \overline{\mathbf{500}}:& 14\%, \\ \overline{\mathbf{700}}:& 17\%, \\ \overline{\mathbf{900}}:& 12\%, \\ \overline{\mathbf{1100}}:& 10\% \end{align} \right\} $$

These probabilities were created from Krumhansl and Kessler's major tonal hierarchy, by taking the relative ratings of each note in the major scale and normalizing the sum to 100%. Note that the original hierarchy does not give explicit probabilities or "strengths" for each note, just simply a relative ranking on notes, so this is intended to simply be an informal analogue just to illustrate the basic idea. If we use the above probability distribution on notes being played, and combine it with the probability distribution on tunings from above, we get the following set of probability distributions:

The important thing to note is that, as can be seen in the above picture, these probability distributions can sometimes overlap. That is, given the tuning "450 cents," there is some probability that it is a detuned version of the "400 cent" reference note, played sharp, or a detuned version of the "500" cent reference note, played flat. Indeed, there is technically a nonzero probability that it is even an extremely detuned version of the 1100 cent note, played 650 cents flat, although the probability is so small that it is basically zero.

As we will see above, this is the basic feature on which the entire model rests: that multiple notes can be detuned to generate the same realization. We can then ask where and how often these "clashes" occur, and obtain a bunch of information-theoretic metrics modeling various aspects of our scale.

To delve further, we will need to simplify our notation somewhat.

A Better Notation

Above, we formalized our scale [math]S[/math] as a "random variable of random variables". That is, first there is a random variable determining which note is played, and then for each note, a second random variable determining what the tuning of that note is. It so happens that it is much easier to represent this mathematically if, rather than thinking of this as a single random variable [math]S[/math], we think of it as two jointly-distributed random variables:

- [math]X[/math], a discrete random variable representing "reference notes" in a "reference scale," with an associated probability of each reference note being played

- [math]Y[/math], a continuous random variable representing the "output" tuning that ends up being played, one way or another, superimposed for all possible choices of [math]X[/math]

In other words, we want [math]X[/math] to represent the discrete steps of our scale. We can name the outcomes of [math]X[/math] anything we want: relative names like "P1", "M2", "M3", etc, or absolute names like "C", "D", "E", etc or anything else. [math]X[/math] is a probabilistic superposition of these reference notes, weighted by the probability of each one being played. In the absence of any particular probability distribution for [math]X[/math], the uniform distribution with all notes having equal probability can be thought of as the best "default" distribution.

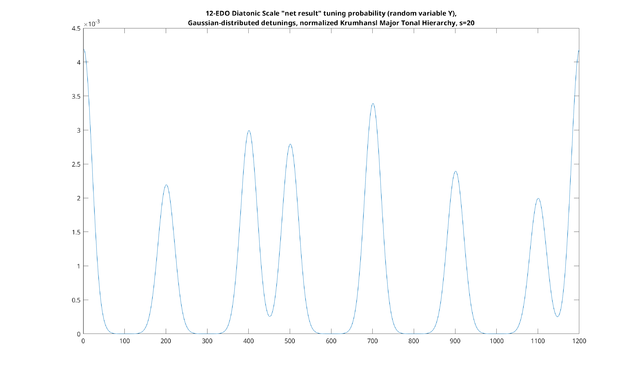

[math]Y[/math], on the other hand, represents the probability of each exact tuning in cents somehow being generated, one way or another, from the different values of [math]X[/math]. This can be thought of as the sum of the probabilistic tuning curves for each note in [math]X[/math], weighted by the probability of that note. To more easily visualize [math]Y[/math], here is a plot of the probability of [math]Y[/math] of the major scale from above, weighted by the normalized Krumhansl's major tonal hierarchy:

This is just the sum of all the Gaussian curves from the last picture. As you might expect, there are local maxima of probability for each note in [math]X[/math]. Also, as you can see, in this scale, there is a slightly higher probability of 450 cents being generated than 300 cents: 300 cents is not in the scale at all, and would need to be bent ~100 cents from either of its nearest neighbors, whereas 450 cents can be generated by a ~50 cent bend from either of its nearest neighbors. (Of course, this is a simplification due to the identical Gaussian mistunings and the lack of any "chromatic" notes in our scale; adding chromatic notes to the scale with a small probability would likely change this result.)

Given the above formalism, we can then talk in the usual manner of the following probabilities:

- [math]P(X=x)[/math] - the probability of the reference note [math]x[/math] being played from [math]X[/math] to begin with, apart from tuning

- [math]P(Y=y)[/math] - the probability of the output tuning [math]y[/math] in cents being generated somehow from all possible [math]X[/math]'s, one way or another

- [math]P(Y=y|X=x)[/math] - the conditional probability, given that the reference note played is [math]x[/math], that the output tuning is [math]y[/math] cents

- [math]P(X=x|Y=y)[/math] - the conditional probability, given that output tuning was [math]y[/math] cents, that [math]x[/math] was the reference note that generated it

So in our current example:

- [math]P(X=x)[/math] is the probability distribution on reference notes, which is the "major tonal hierarchy" probability distribution we derived above by normalizing Krumhansl and Kessler's result

- [math]P(Y=y)[/math] is the last curve shown above, the probability distribution on output tunings being generated "at the end of the day"

- [math]P(Y=y|X=x)[/math], given some chosen reference note [math]x[/math], is simply the aforementioned Gaussian curve representing the probability distribution of tunings for [math]x[/math].

- [math]P(X=x|Y=y)[/math] has not been previously talked about, but is very important: given a particular realized tuning such as "450 cents", this is the probability, for each [math]x[/math] that it was a generated as a "detuned version of" that [math]x[/math].

The third one is particularly important. For example, if we give our "400" cent reference interval the name "M3", then [math]P(Y=y|\text{M3})[/math] is the Gaussian probability distribution corresponding to the thing we previously called [math]\overline{\mathbf{400}}[/math].

The fourth one will be seen, later on, to form the basis of our notion of Categorical Entropy.

Example Outcomes

Given that we now have our two-variable formalism, we can talk about particular outcomes of our scale. An outcome, in this case, will be a particular note and a particular tuning, or formally, a particular outcome from [math]X[/math] and a particular outcome from [math]Y[/math], which we will write using the notation Interval name: tuning. If we name the notes of our scale "P1", "M2", "M3", etc, then here are some example outcomes:

$$ \text{M2}: 200¢ \\ \text{M2}: 190¢ \\ \text{M2}: 210¢ \\ \text{M3}: 400¢ \\ \text{M3}: 386¢ \\ \text{P4}: 504¢ \\ \text{M3}: 450¢ \\ \text{P4}: 450¢ \\ \text{P4}: 1199¢ $$

The first three are outcomes where the major second was chosen to be played, and tuned three different ways. Next is a major third tuned exactly to the reference at 400 cents, and a major third detuned slightly to 386 cents (close to 5/4). Next is a perfect fourth, tuned 4 cents sharp of the reference. These are all fairly straightforward.

Next we have our two most important examples: a major third tuned very sharp, to 450 cents - (relatively uncommon, but possible), and a perfect fourth tuned very flat, also to 450 cents (likewise, uncommon, but possible). The main thing to note here is that for our probabilistic scale, these are two distinct outcomes. That is, the outcome here isn't just the realized tuning of "450 cents": it is the combination of the 450 cent tuning, and the intended reference note which has been tuned that way.

In other words, an outcome of our probabilistic scale should be thought of as representing "the whole truth": the intended reference note in the mind of the performer, and the realized tuning that is then played. Both pieces of state are represented.

Lastly, we have a perfect fourth tuned to 1199 cents - a deliberately bizarre example, just to show you that this is technically a possible outcome, even though the chances of it happening are so low that you may as well think of it as zero!

Probabilistic Scales as Information Channels

A very useful way to look at the above is where [math]X[/math] is an "input," perhaps a note on a piece of sheet music, that is being sent into some abstract system: the performer's instrument, interpretive choices, and the auditory system of the listener, which ultimately outputs [math]Y[/math] as a result. As a result, the pair [math](X, Y)[/math] can be thought of as representing a channel, one of our previously-described goals.

In a typical musical situation, a listener does not have direct access to the original input [math]X[/math], the original reference note the performer was intending to play. Rather, the listener only has access to their perception of the realized tuning [math]Y[/math] - the received "output" of the channel - and must infer the value of [math]X[/math] based on that. Note that this need not be a conscious process, as in naming the interval an ear training test, but for most listeners may be a largely subconscious one simply involving a general perception of whether the heard note fits into the current scale or not, or an understanding of how to correctly sing along with a melody.

For many possible received values of [math]Y[/math], the tuning will be close enough to some reference in [math]X[/math] that it can be unambiguously decoded. A "good" scale will be one which is relatively easy to decode in this way, where the most commonly heard "outputs" yield only one realistic guess of what the "input" most likely was.

The basic challenge with such inference is when a value of [math]Y[/math] is received that could have been generated with roughly equal probability by multiple values of [math]X[/math], such as our 450 cent example. In that situation, we now have multiple competing interpretations for our interval, for which the listener shall have to struggle to use additional sources of information to guess what [math]X[/math] is. We would like our scales to have these situations happen relatively infrequently. In our example, the ambiguity of 450 cents doesn't pose much of a problem because we don't expect it to be played very often, since it's a rather extreme deviation from the nearest reference. However, as we will see later, it is possible for scales to yield ambiguous tunings much more frequently, even scales which are considered "good" by other criteria.

Limitations and Future Extensions of this Model

In real music, there are many factors that can influence the musical interpretation of an "ambiguous" note or interval - the [math]X[/math] that is inferred from some given [math]Y[/math] - such as: the musical context and surrounding notes, the harmonic context of additional notes being played, fragments of melodies that listeners recognize, and so on.

In order to get to "the next level" of musical realism in our model, we have to extend our probabilistic scale - we can look at sets of notes played simultaneously (e.g. chords), or sequences notes (e.g. melodies), or sequences of sets of notes (e.g. chord progressions) - which will have a highly interesting and non-trivial joint distribution, which may differ from musical style to musical style, something which we will do in future research. Then, when an ambiguous note is played, we can also include a fragment of musical context from the surrounding notes/chords/etc, which may alter the relative probabilities of the note being inferred (rather than the note being played in isolation).

In some sense, we would like to model how good a scale *could be*, given an entire culture that uses it, with expert musicians and listeners for that scale, and an entire body of music that people know, etc. Our model is clearly just a starting point that falls short of a goal as extreme as *that* - but we will see later that, even within just the simple model, there are some basic metrics we can look at to begin to head into this direction. One example is the Categorical Channel Capacity, in which we look at the *best* possible distribution on the notes of a scale, in which unambiguous notes are played "just enough" to add some spice, and ambiguous notes are played more frequently. An extension of these things to an expanded probability distribution of multiple note sequences, chords, etc would be quite radical and enlightening in revealing the "best way" to use a scale; it would be quite interesting to see if real-world musical traditions tend to follow any of the results such metrics would give.

For now, our model of a probabilistic scale is as basic as possible - it simply looks at a scale and a probability distribution on that scale. This can be thought of as a very basic "average" on all possible melodies, chords etc, of how much each note or pitch class "matters" and how "probable," "salient," "relevant," or "generally likely to be played" it is. Our goal is simply to get as much mileage from this simple starting point as possible, with any results being understood to be kind of a basic guideline or starting point from which things may somewhat vary in different real-world musical situations.

We also want to emphasize that, although we are quantifying some kind of "ambiguity" that a musical scale an have, we do not necessarily place any musical value judgment on scales either having or not having this property. Rather, our view is that this property simply "exists," and we want to quantify it. Intervallic or pitch ambiguity can be very musically useful, particularly in the hands of an expert musician who is able to use it expressively to play with the audience's expectations. Clearly, the "threshold of ambiguity" will be much higher if the scale is being played by an expert musician, and using real melodic phrases that are recognized by expert listeners - and we hope that the [math]s[/math] parameter in the model provides some way to adequately model some of this variance. And lastly, we note that, even for "expert" listeners, there will always be some scales, such as those having many steps that are only 1 cents wide, for which it will be very difficult for the notes in the scale to sound different from one another at all. But of course, there are many musically interesting things you can do with scales that have such extreme "microtones," even if the intervals [math]400¢[/math] and [math]401¢[/math] are not quite as inherently "categorizably different" as [math]400¢[/math] and [math]300¢[/math] are.

Now that we have formalized all this, we have everything we need to create our model of Categorical Entropy.

Categorical Entropy

Given the above, the most basic quantity relevant in inferring a reference note from a realization is the conditional probability

$$ P(X=x|Y=y) $$

or the probability, given some received output [math]y[/math], of each [math]x[/math] being the thing that generated it.

This is automatically determined as a derived quantity once the probabilities [math]P(X=x)[/math] and the spreading functions [math]P(Y=y|X=x)[/math] are set. Given those two things, we can then use Bayes' Theorem to get:

$$ P(X=x|Y=y) = P(Y=y|X=x)\frac{P(X=x)}{P(Y=y)} $$

Our goal, then, is to determine how much, for each tuning realization [math]y[/math], the above probability distribution on values of [math]X[/math] tends to focus on only one choice. If the above distribution yields a 99% chance of being one particular value of [math]X[/math] and 1% on the rest combined, it is relatively unambiguous. If it yields a 49% chance on one, a 49% on another, and a 2% chance on the rest, it is more ambiguous.

The traditional way to measure this is Claude Shannon's definition of the entropy, which for this particular random variable is defined as follows:

$$ H(X|Y=y) = -\sum_{x\in X} P(X=x|Y=y) \log P(X=x|Y=y) $$

This is the Raw Monadic Categorical Entropy (1-CE) of the note [math]y[/math] with respect to the scale [math]X[/math]. Typically, the base of the logarithm is chosen to be either [math]2[/math] or [math]e[/math], representing either "bits" or "nats"; we will leave it open here.

Higher values denote that the interval is more ambiguous, whereas lower values denote that it is less ambiguous.

The terms "raw" and "monadic" will be made clear shortly. As we will see, a "transpositionally-invariant" notion of CE is much more useful, but defining the raw entropy in this way is a good starting point.

Raw Monadic Categorical Entropy (1-CE)

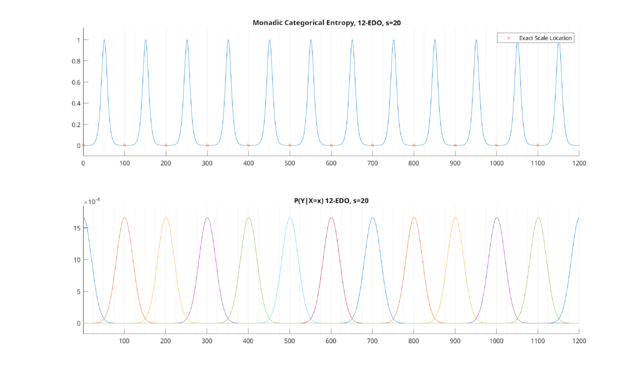

Let's look at the 1-CE for the 12-EDO scale, with all probabilities equal at 1/12, setting [math]s[/math] to 20 cents. If we do so, we get:

The curve above is the entropy; the red "X"'s are the exact scale degrees (meaning the reference notes of 12-EDO). We can see that, as expected, intervals that are close to the reference are low in entropy, whereas intervals that are far are high in entropy.

The lower curve shows the point spread function for each interval in the 12-EDO reference. We note that these curves bear some resemblance to the "identification functions" in Figure 1 of Siegel 1977, as well as Figure 1 of Burns 1978. (We will not present a plot of the point spread functions again, since they're fairly simple to understand.)

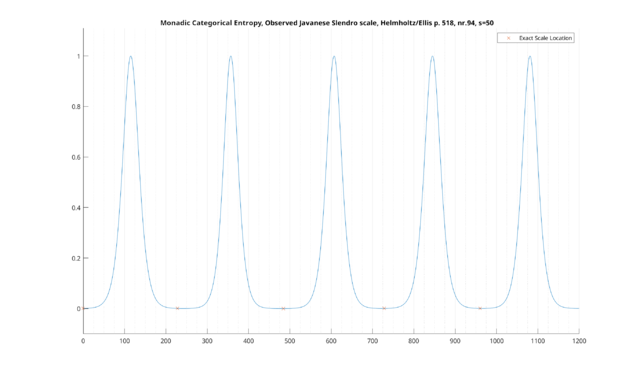

We can do the same for the notes of Slendro - we will use Helmholtz/Ellis's reference (p. 518, nr. 94, "slendro.scl"), set to 0, 228, 484, 728, and 960 cents, wrapping at the octave. As our intervals are much further apart, we may as well increase the value of [math]s[/math] to 50 cents, to demonstrate the difference. Doing so, we obtain

We can see we have gotten a similar result. Interestingly, you will note that the exact scale degrees (given by the red "X"'s in the above plot) are not exactly located at the minima of entropy - something that will become very important later.

Raw Dyadic Categorical Entropy (2-CE)

We can also increase the number of notes in our model. Rather than simply comparing a note to the reference, we can compare pairs of notes to pairs of notes in the reference to obtain the Dyadic Categorical Entropy (2-CE).

To do so, we will assume each note in the dyad can be independently mistuned from its reference by the same amount, given again by the parameter s.

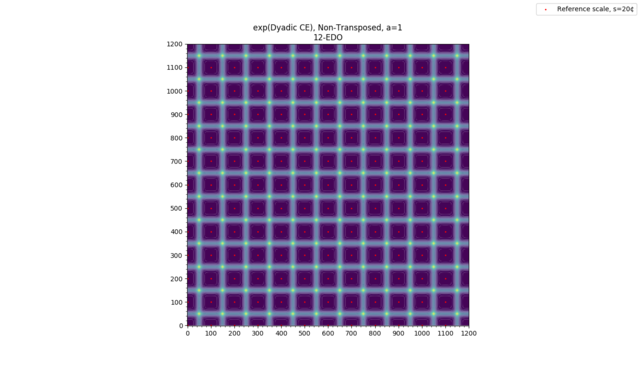

Here is an example of our dyadic 12-EDO from before, with all notes at equal probability:

So far, this is fairly simple: for 12-EDO as a reference, the lowest 2-CE triads will be found when tuned exactly to 12-EDO, and increase in 2-CE as they are detuned. We will see that things will get more interesting for different scales.

Raw n-adic Categorical Entropy (n-CE)

Before we can move to non-equal temperaments, we want to note that we can extend the above principle to get a notion of 3-CE, 4-CE, etc, although we will not be able to plot this here.

In general, we can do so by changing our random variables [math]X[/math] and [math]Y[/math] as follows:

- [math]X_n[/math], a discrete random variable of "reference chords", and an associated probability of each reference chord being played

- [math]Y_n[/math], a continuous random variable representing the possible "output" chord tunings that can be generated somehow from all possible reference chords in [math]X_n[/math], with an associated probability of each tuning being generated one way or another. This can be viewed as a tuple of cents, representing the tuning of each note relative to the "0 cent" reference.

Given that, we can again look at our basic probabilistic quantities as follows:

- [math]P(X_n=x_n)[/math] - the probability of the n-note reference chord [math]n_x[/math] being played to begin with

- [math]P(Y_n=y_n)[/math] - the probability of the tuning of the n-note output chord [math]y_n[/math] being generated somehow from all possible reference chords

- [math]P(Y_n=y_n|X_n=x_n)[/math] - the conditional probability, given that the reference chord being intended is [math]x_n[/math], that the output tuning is [math]y_n[/math]

- [math]P(X_n=x_n|Y_n=y_n)[/math] - the conditional probability, given that the output chord was [math]y_n[/math], that [math]x_n[/math] was the reference chord that generated it

The quantity [math]P(Y_n=y_n|X_n=x_n)[/math] represents the tuning probability distribution for each reference chord [math]x_n[/math]. Generally, we will want each note in the n-chord to be able to be independently mistuned by the same distribution we used in the monadic case. So for example, if our original tuning distribution was a Gaussian distribution, this would be a "white" multivariate Gaussian distribution with the same value of [math]s[/math] on each note (so the covariance matrix would be a diagonal matrix of all [math]s^2[/math] entries).

Given the above, the n-adic Categorical Entropy is defined as:

$$ H(X_n|Y_n=y_n) = -\sum_{x_n\in X_n} P(X_n=x_n|Y_n=y_n) \log P(X_n=x_n|Y_n=y_n) $$

Generally, we will want our list of "reference chords" to be those taken from some scale, i.e. for n-adic CE, we the set of all n-ads contained in a scale. In set-theoretic terms, this is called the n'th Cartesian power of the scale, containing all n-tuples of notes in the scale (including duplicates, and with different orderings counting as different notes).

For example, for the 12-EDO pentatonic scale (notated as C-D-E-G-A), to get 2-CE, the 2nd Cartesian power would be the following:

(C, C) (C, D) (C, E) (C, G) (C, A)

(D, C) (D, D) (D, E) (D, G) (D, A)

(E, C) (E, D) (E, E) (E, G) (E, A)

(G, C) (G, D) (G, E) (G, G) (G, A)

(A, C) (A, D) (A, E) (A, G) (A, A)

This would be the sample space for [math]X_2[/math], assuming that [math]X[/math] is the pentatonic scale. You can see that both (C, G) and (G, C) are included, despite having the same notes. Also, note that (D, A) and (C, G) are both included as distinct entities, despite both being a "perfect fifth" and having the same tuning. Lastly, note that unisons are also included as (C, C), (D, D), etc.

It may seem redundant to contain some of these different orderings of the same notes, particularly for triads where (C,E,G), (C,G,E), (E,C,G), etc are all different chords. However, this will end up being important later, when we define transpositionally-invariant CE.

(Of course, we note that while it is certainly possible to extend this to look at arbitrary sets of reference chords, rather than those taken from the n'th Cartesian power of the scale, we will not do so here.)

Given that, then, the important question is how to give probabilities to each reference chord. The important thing to note is that the probabilities from the original scale do not uniquely specify a probability distribution on n-ads, because we do not necessarily assume that the individual notes in the n-ad are independently distributed of one another, but rather can be jointly distributed. So we can give an arbitrary probability distribution on the set of n-ads.

We note that the dimension of the plot seems to be shifted by one versus HE: Whereas 2-HE is a 1D plot, and 3-HE is a 2D plot, here we have 1-CE is a 1D plot, 2-CE is a 2D plot, etc. This will change when we add transpositional invariance below.

The Problem with "Raw" CE

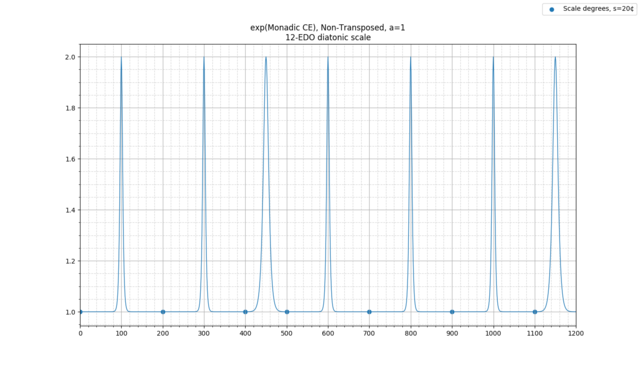

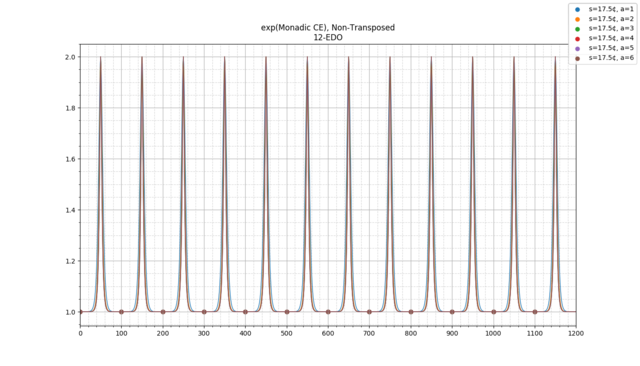

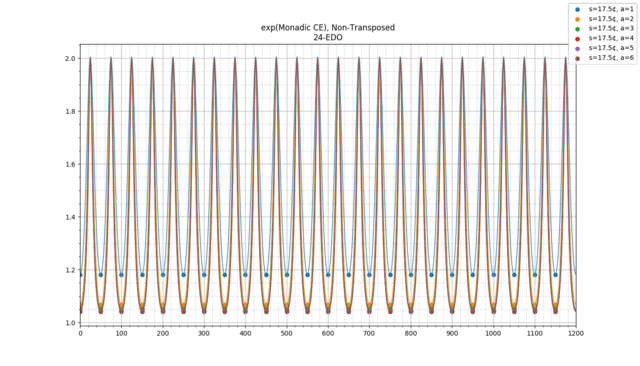

We first see the issue with our "raw" version of CE when we look at the 12-EDO diatonic scale, this time using our spiffy new plotting software:

(This is now the exp of entropy but is otherwise the same as before. Exact scale degrees are given by circles rather than x's. Looking directly at the exp of entropy is a useful measure for reasons we will go into later.)

This plot of the 1-CE of the 12-EDO diatonic scale uses all notes with equal probability. (If we instead use the Krumhansl-derived probabilities from before, we get a plot that is negligibly different.)

As we can see, there are 7 distinct minima, one for each scale degree of the diatonic scale. Maxima occur in between scale degrees; as a result, we can see that 300 cents is a maximum, being between 200 and 400 cents.

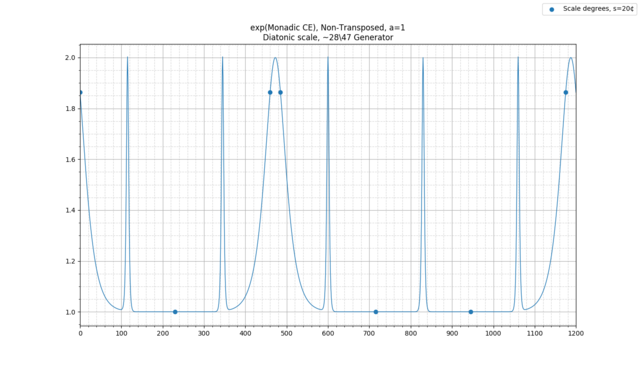

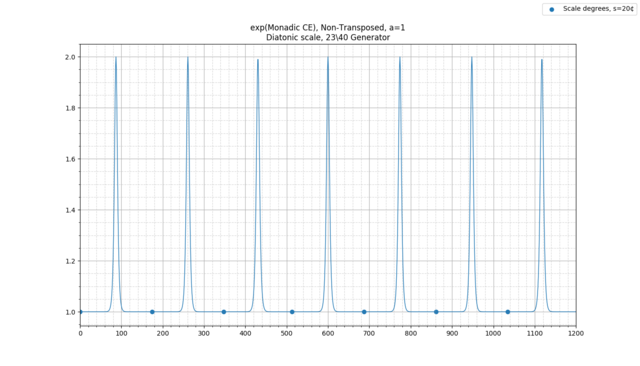

To see how this doesn't model Western music perception, look at how the diatonic scale changes as it is detuned to the extremes of 5-EDO or 7-EDO, where we previously noted the perception of the scale begins to break down. Here is a plot of the raw 1-CE of the diatonic scale generated by 715 cents (approximately 28\47):

You can see that in some meaningful sense, the behavior is as expected - the major third and perfect fourth have become ambiguous, as have the major seventh and the tonic. However, things are different at the 7-EDO extreme. Consider the diatonic scale generated by 690 cents (23\40):

Previously, we noted that near 7-EDO, it becomes difficult to distinguish major and minor thirds, seconds, sixths, and sevenths, as well as perfect and augmented fourths, and perfect and diminished fifths. However, this is not shown in the above graph!

The basic issue with the above model is that we are basically treating the diatonic scale here the same way we would treat a Gamelan slendro or pelog: as an unchanging scale, with no modulation or transposition. We just have seven pitches that notes are simply matched to. This works well for Gamelan music, for instance, where you only use immovable-pitch scales and will never hear a note between two notes of the current pitch set.

In Western music, however, changes in the pitch set are quite frequent, resulting from techniques such as modulation, chromaticism, retonicizations, secondary dominants, borrowed chords, etc. As a result, the pitch set is constantly changing in subtle ways, so that there is always a chance that the transposition of the scale has changed.

The behavior we really want is to compare the incoming note to all different transpositions of the scale, not just the original chosen one. To do so, we will define the transpositionally-invariant categorical entropy.

Transpositionally-Invariant Categorical Entropy

For our raw n-adic CE, we defined the variables [math]X_n[/math] and [math]Y_n[/math]. Our mistuning/spreading function is then represented as the probability of each output tuning, given a particular choice of reference chord:

$$ P(Y_n=y_n|X_n=x_n) $$

for which the basic example is a multivariate Gaussian with standard deviation s, centered at the notes in the reference chord [math]x_n[/math].

Suppose to this, we add another random variable [math]T[/math], a continuous variable representing the number of cents the current scale is being transposed. If [math]T=0[/math], the output tuning is the same. But if [math]T=\mu[/math] for some nonzero [math]\mu[/math], then the output tuning of each note is shifted by [math]\mu[/math] cents, so that the mean of the output Gaussian is shifted by [math](\mu, \mu, ... ,\mu)[/math] cents.

Then our mistuning function becomes the probability of each output tuning, given a particular choice of reference chord and transposition:

$$ P(Y_n=y_n|X_n=x_n, T=t) $$

We can also then ask, for a particular output chord tuning and transposition, the probability that each reference chord [math]x_n[/math] generated it:

$$ P(X_n=x_n|Y_n=y_n, T=t) $$

Now, to represent the situation where we aren't sure what the transposition is, we will let [math]T[/math] be the uniform distribution on possible transpositions, e.g. the uniform distribution on cents from 0 to the equivalence interval. (This definition can be finessed for aperiodic scales, although we will not do this here.) Then we can ask about the joint distribution on chords and transpositions, given a particular transposition:

$$ P(X_n=x_n, T=t|Y_n=y_n) $$

And then, lastly, we marginalize on transpositions to ask can ask about the marginal probability for each chord given an output tuning, where all possible transpositions are taken into account:

$$ P_T(X_n=x_n|Y_n=y_n) = \int_{0}^{q} P(X_n=x_n, T=t|Y_n=y_n) dt $$

where [math]q[/math] is the equivalence interval, so will typically be 1200 cents, and the subscript [math]P_T[/math] indicates that we have marginalized on the T.

Once we have defined the above, we can redefine our transpositionally equivalent CE as:

$$ H_T(X_n|Y_n=y_n) = -\sum_{x_n\in X_n} P_T(X_n=x_n|Y_n=y_n) \log P_T(X_n=x_n|Y_n=y_n) $$

Given this, we can also define the "raw" CE as the quantity [math]H(X_n|Y_n=y_n, T=0)[/math], indicating that it is only relative to the one possible transposition of 0 cents.

Transposition-Invariance, Coordinate Change, and Dimensionality

After doing the above, all n-ads that are simply transposed versions of one another will have the same categorical entropy. For example, the chords (0, 400, 700) and (100, 500, 800) will have the same CE, as they only differ by the vector (100, 100, 100), or a constant transposition of each note by 100 cents. In general, integration on the [math]T[/math] variable above ends up "smearing" the 2D plot along the (1, 1, 1) vector (and similarly for higher-dimensional CE).

Since all transposed versions of the same chord have the same CE, we can simply take the version of each chord with the first note at "0" cents, such as (0, 400, 700) above, and then drop the first coordinate entirely, yielding (400, 700). This represents the tuning of each note relative to the lowest note in the chord.

As a result, transposition invariance reduces the dimension of the space by one, making the plots similar to the way that HE works: the transpositionally-invariant 2-CE is a 1D plot, the transpositionally-invariant 3-CE is a 2D plot, and so on. (The transpositionally-invariant 1-CE is just a single 0-dimensional scalar and hence uninteresting.)

An Important Technical Note About Scaling [math]s[/math] For Transpositionally-Invariant CE

If we are using the usual choice of Gaussian spreading function, then the above integral is simply an integral on a multivariate normal distribution. This integral yields a new multivariate Gaussian.

While we will not prove this here, it can be seen that the new Gaussian will have covariance matrix equal to [math]C=s^2(I_{n-1} + O_{n-1})[/math], where [math]s[/math] is the standard deviation parameter, [math]I_{n-1}[/math] is the [math](n-1)\times(n-1)[/math] identity matrix, and [math]O_{n-1}[/math] is the [math](n-1)\times(n-1)[/math] all-ones matrix. This skews the Gaussian along a particular axis; changing the coordinate system to whiten the Gaussian is equivalent to choosing triangular note axes for transposed 3-CE, tetrahedral axes for transposed 4-CE, etc. For those familiar with n-HE, this is exactly how the multivariate Gaussian works in that system.

The important thing about the above is the covariance matrix has determinant equal to [math]ns^{2(n-1)}[/math], which can be thought of as the "total effective variance" of the Gaussian. If we were to change the coordinate system to whiten this Gaussian (e.g. put it in triangular/tetrahedral/etc axes), the "s" value that would generate this Gaussian is scaled relative to the non-transposed version by a factor of [math]n^{\frac{1}{2(n-1)}}[/math].

In other words, if we have [math]n=2[/math], so we are looking at transposed dyadic CE, our effective value of [math]s[/math] becomes [math]s\sqrt{2}[/math]. If we're looking at transposed triadic CE, our effective value of [math]s[/math] becomes [math]s\cdot 3^{\frac{1}{4}}[/math]. For 4-CE, the effective value is [math]s\cdot 4^{\frac{1}{6}}[/math] and so on.

To be unambiguous, for transpositionally-invariant CE, we will generally refer to this as the "effective" or "total" value of s, with the original s value pre-scaling being referred to as the "raw s."

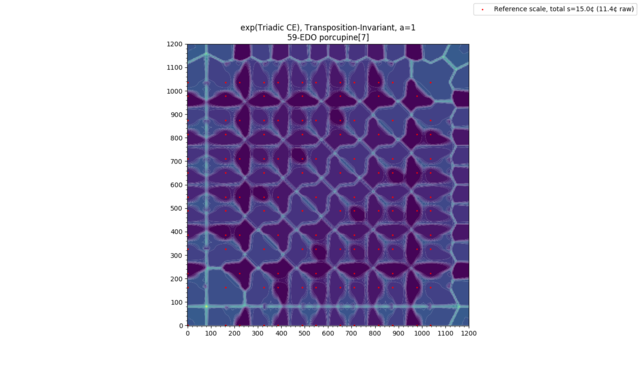

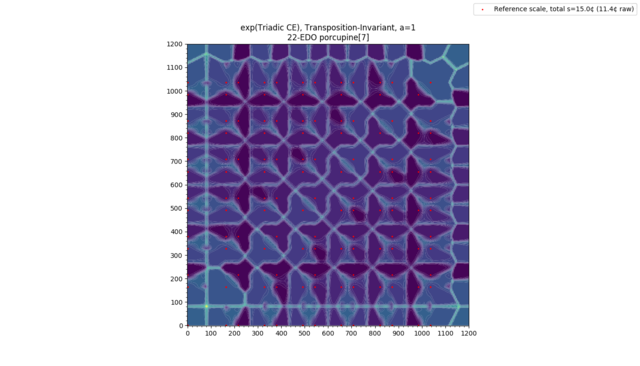

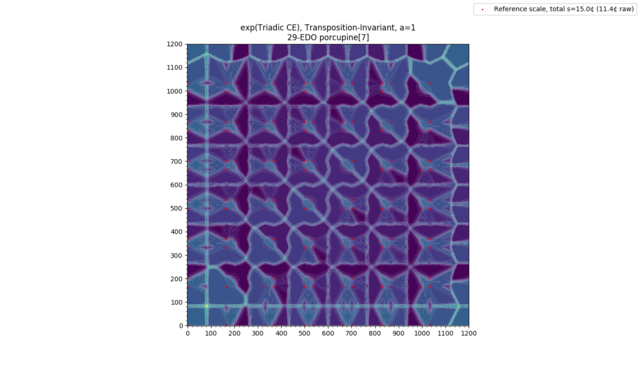

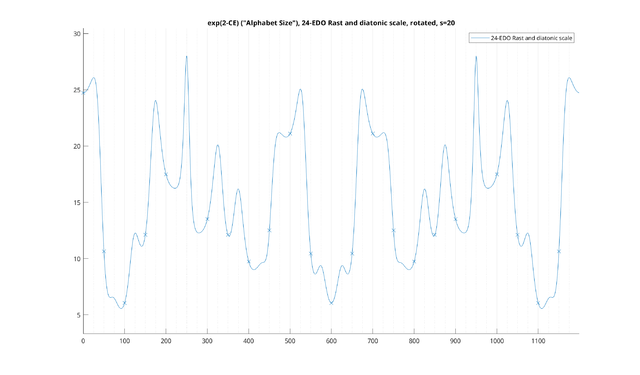

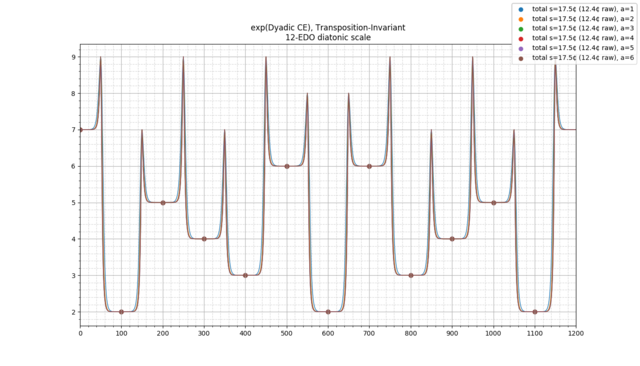

2-CE Examples, Transpositionally-Invariant

Below, all examples use the uniform distribution on the dyads of the scale.

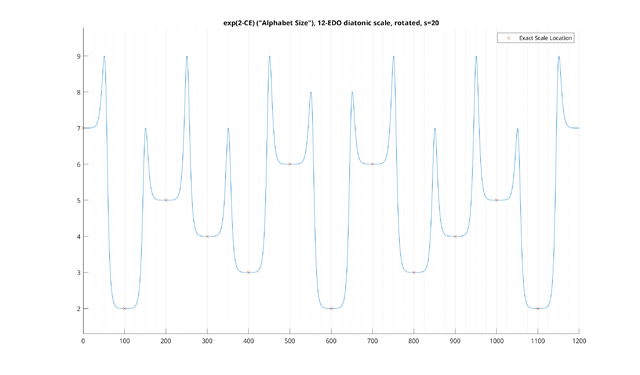

Example: 12-EDO diatonic scale, transpositionally-invariant

Let's look at a particular example: the transposed 2-CE for the 12-EDO diatonic scale, with uniform probabilities on each note, with a "total" s of 20 cents (or ~14.1 cents per note). We then obtain the following:

And now, we see a very different graph, representing all of the intervals appearing anywhere in the scale.

Note that above, we have not directly plotted the entropy, but rather the exponential of entropy, a quantity sometimes called "alphabet size." This is a useful quantity to look at: it quantifies how useful each interval is in telling you where you are within the scale. For uniformly distributed scales, it can be interpreted as the remaining number of possible scale transpositions consistent with your receiving that dyad as input. However, the alphabet size is much more general, and can even handle ambiguous dyads and nontrivial scale distributions, for which the same basic interpretation holds.

For example, you can see that 200 cents has an alphabet size of 5. This can be interpreted as telling you that there are five places in the diatonic scale it can appear: for example, in the major mode, it appears between the tonic and M2, the M2 and M3, the P4 and P5, the P5 and P6, or the P6 and P7. In short, in this particular situation where we are trying to match things to the diatonic scale, once we have heard a major second, we have narrowed it down to only five possible transpositions of the diatonic scale which agree with this "measurement."

On the other hand, consider the 100 cent interval, which has an alphabet size of two. The 100 cent interval "narrows things down" much more than the 200 cent interval. Once we've heard it, we know there are only two transpositions remaining.

Then, as a trivial example, you can see that for the 12-EDO diatonic scale, the 0 cent interval has an alphabet size of 7: simply playing a unison does not help you figure out at all where you are within the scale.

These examples all involve looking at notes which are within the scale, but we can also ask this question of notes that are not. For example, you can see that in this scale the 50, 250 and 450 cent intervals have an alphabet size of 9, which is even more ambiguous than one single note. This is because there is now ambiguity as to which diatonic intervals these are to begin with. For instance, it is possible that this 250 cent interval is a sharp major second, in which case there are five transpositions remaining. But, it could also be a flat minor third, which would make it four possibilities.[9] Since there's an equal chance of both at 250 cents, we have nine possibilities total. We also get a smooth curve for categorically ambiguous intervals at any point on in the spectrum, so that the alphabet size clearly generalizes the number of occurrences of the interval in the scale. This will become particularly important as we change the tuning of the generator, which we will do in our next examples: as things get close to the extremes of 5-EDO and 7-EDO, even the intervals of the scale itself will start to become increasingly ambiguous due to smearing effects in this way.

We also note that this basic metric can be interpreted several ways. For instance, if we know that we are playing some kind of "major" modality, hearing a major second narrows the possiblities down to five choices of tonic. On the other hand, we can also interpret it as though we know what the tonic is, but don't know what the mode is. Then, hearing a major second on the tonic means there are five possible modes that agree with this - Lydian, Ionian, Mixolydian, Dorian, and Aeolian - with Phrygian and Locrian eliminated. The same mathematical metric models both of these situations equally. Thus, regardless of whichever of these interpretations is musically relevant, in both situations we can clearly see that the 100 cent interval is much more useful than the 200 cent interval in "orienting" yourself within the diatonic scale.

This is, in general, how to interpret the CE for each interval in a scale: it tells you how much uncertainty is remaining in establishing the overall picture of the scale in your head once you've heard just that one interval. The lowest-CE intervals are related to what Paul Erlich calls "signposts" in his paper Tuning, Tonality, and Twenty-Two-Tone Temperament. However, note that the absolute number of the alphabet size only corresponds literally to the "number of transpositions" or "number of modes" if the intervals are given a uniform distribution, and if you are looking at an interval which is relatively categorically unambiguous. Otherwise, it is simply a scalar value which is to be interpreted similarly, as a kind of "probability-weighted number of transpositions."

We also note that the same metric can be used for scales which are non-uniform in distribution to begin with. For instance, if for whatever reason the minor third is extremely unlikely to be played in some piece of music, then the alphabet size of 250 cents would decrease, since the probability of the M2 is so much likelier than the m3 to begin with. Thus, this interval would become more effective in orienting ourselves in the scale, since we have removed an ambiguous possiblity from the scale. We will deal with this more when we talk about the "channel capacity" of the scale later.

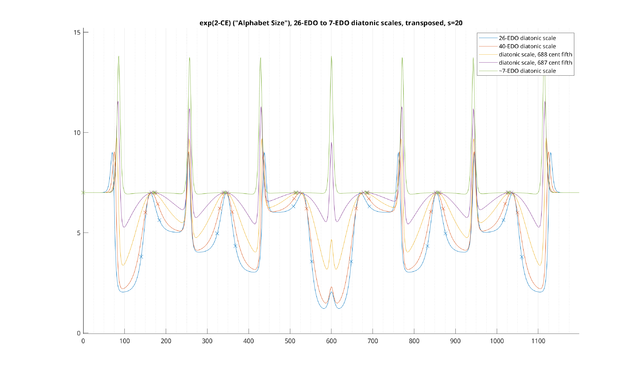

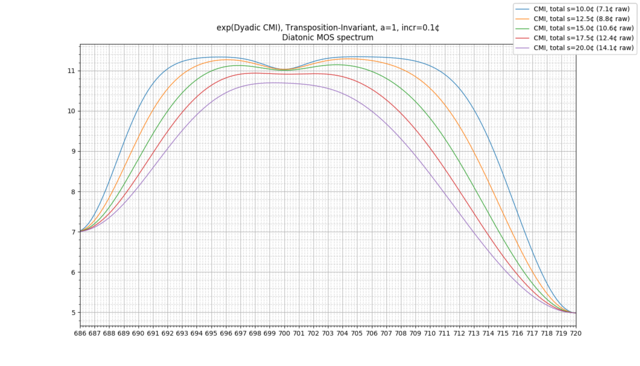

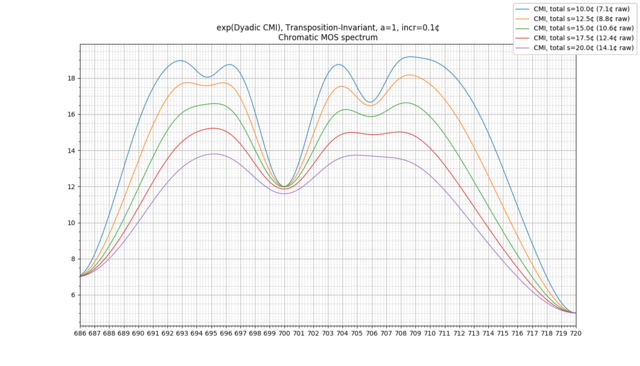

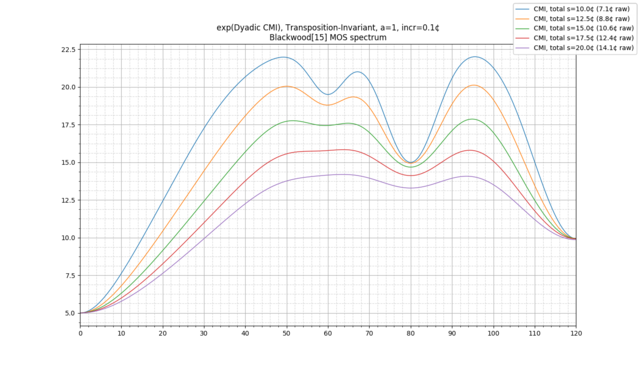

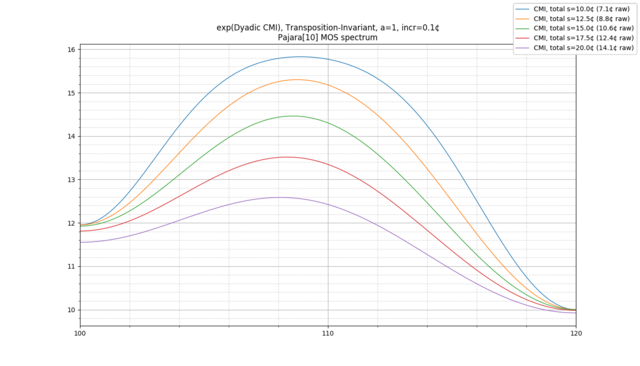

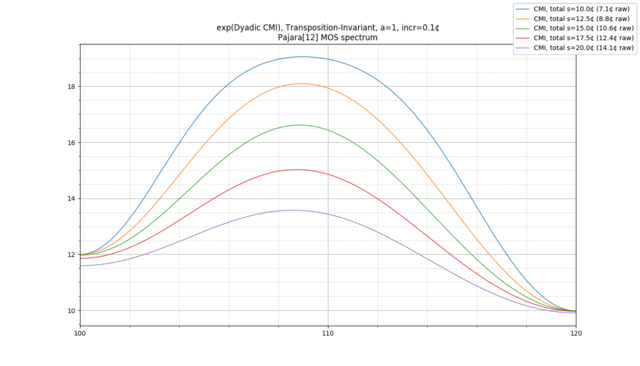

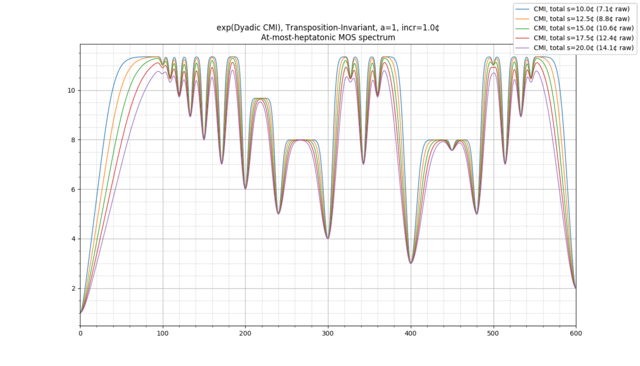

Next, we will look at what happens to this plot as we change the tuning of the diatonic scale.

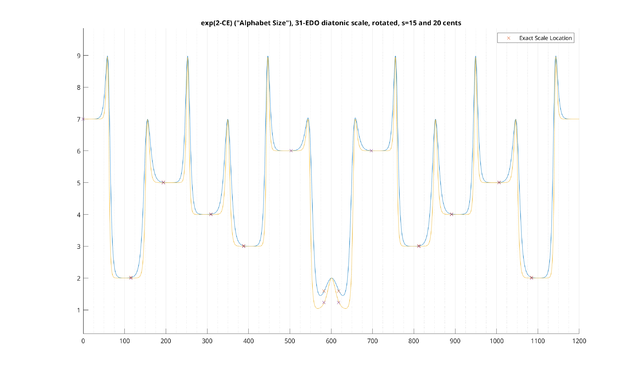

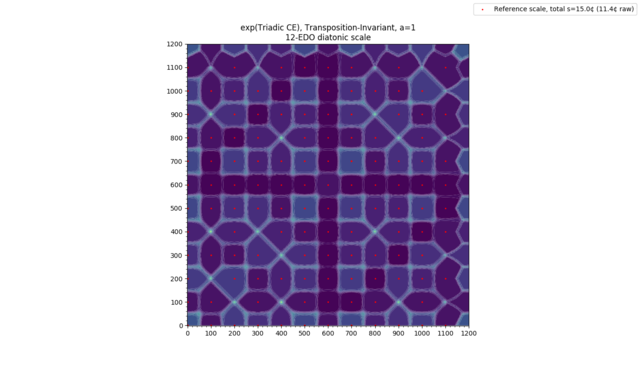

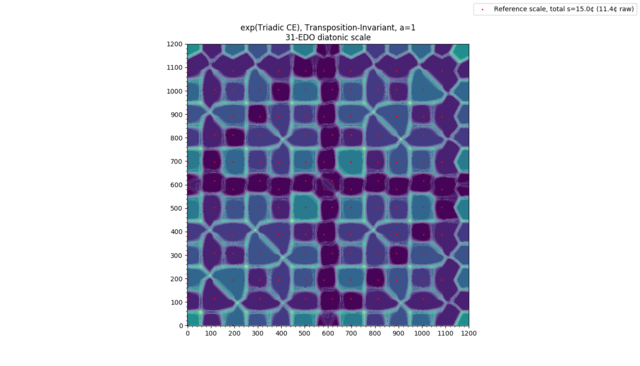

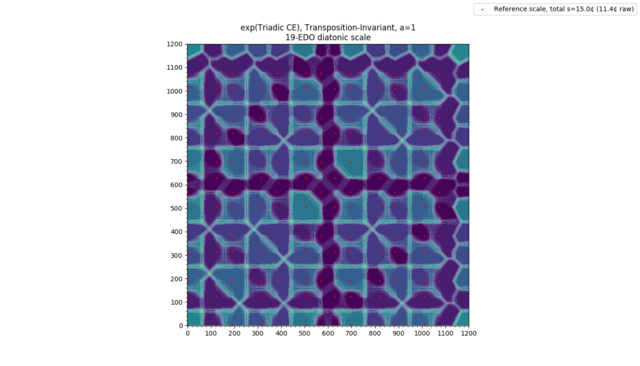

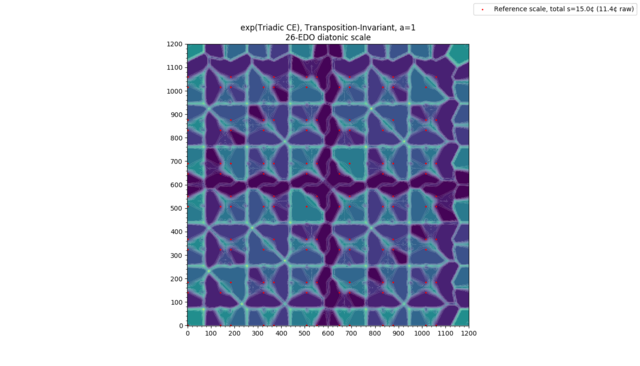

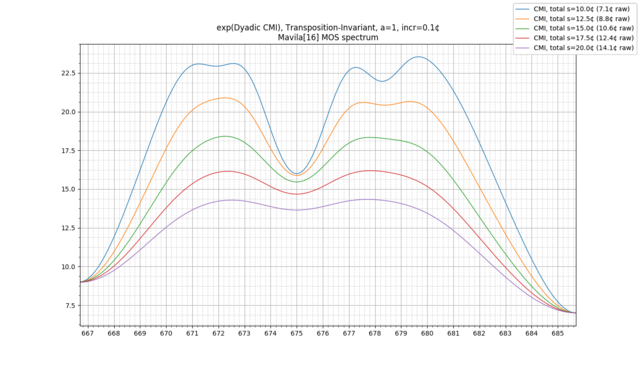

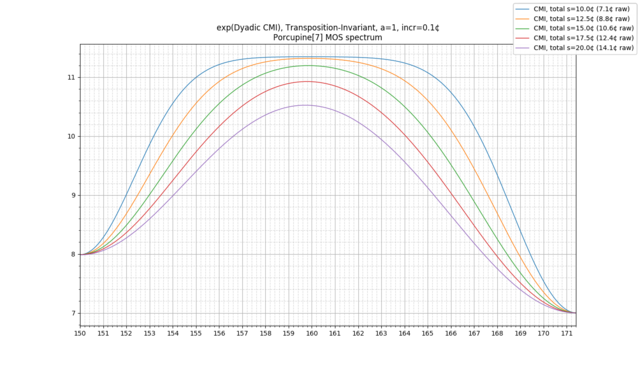

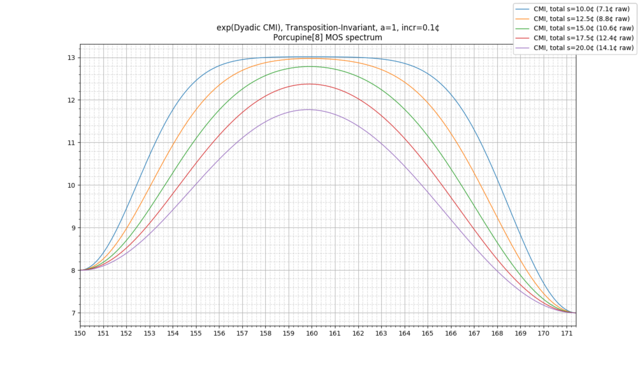

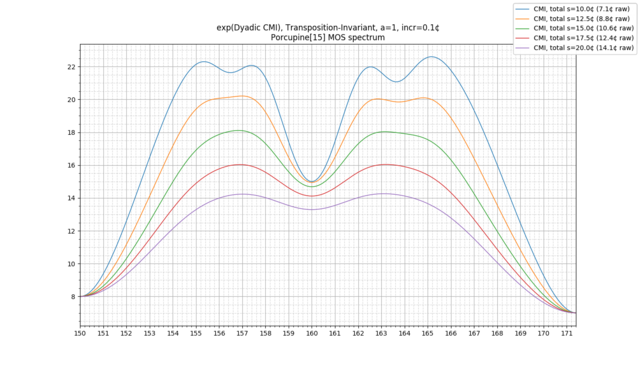

Example: 31-EDO diatonic scale, transpositionally-invariant

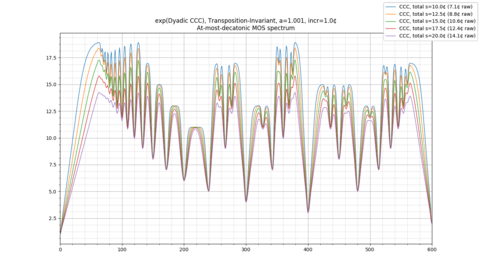

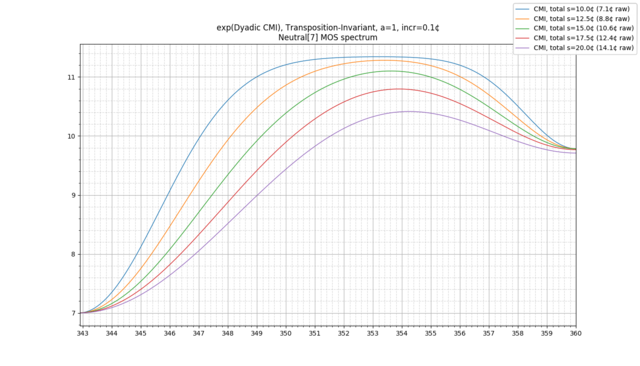

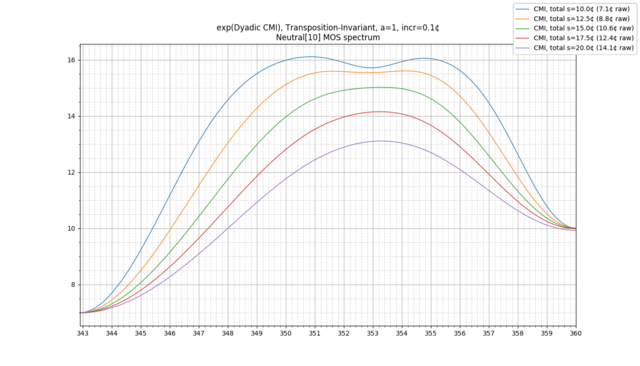

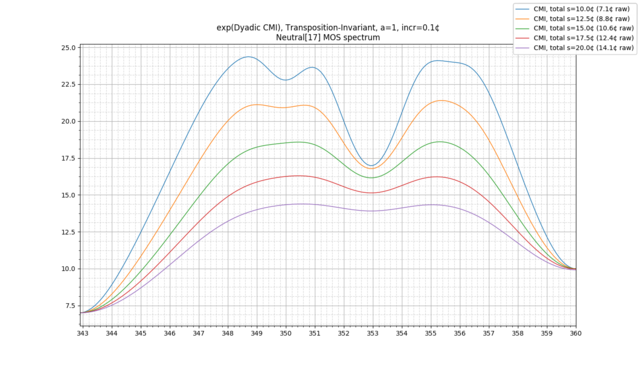

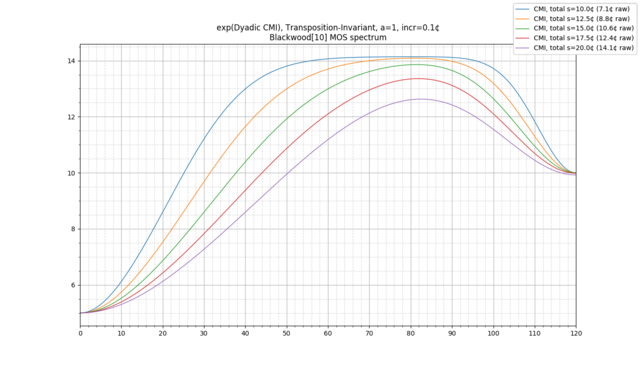

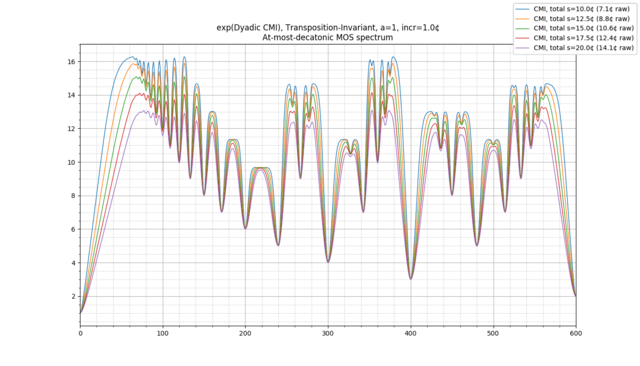

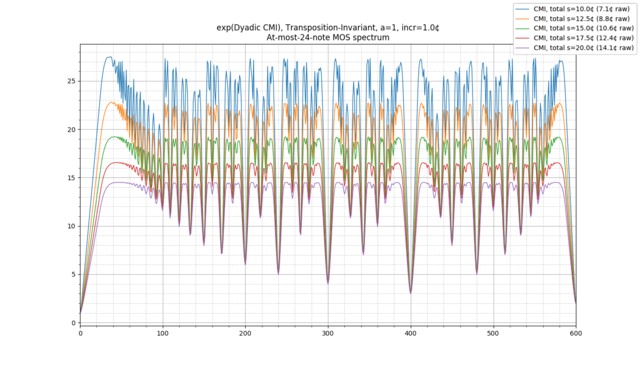

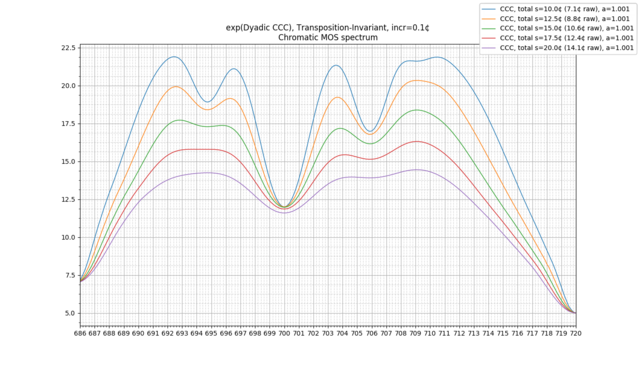

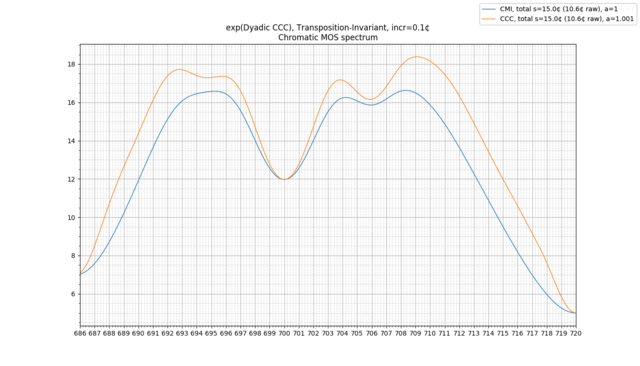

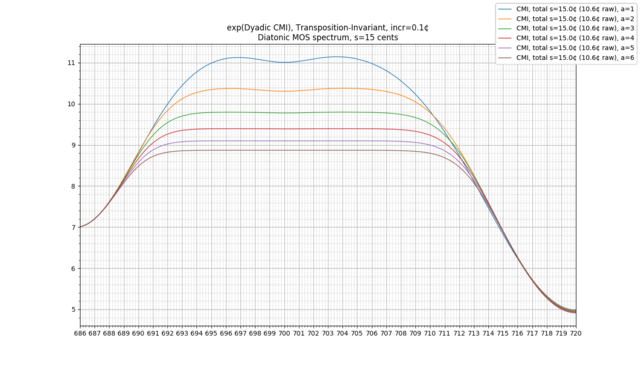

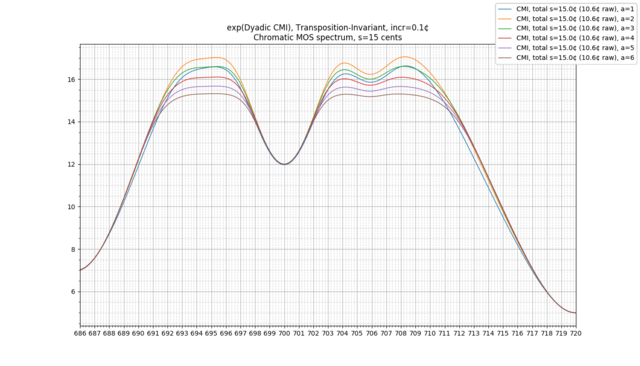

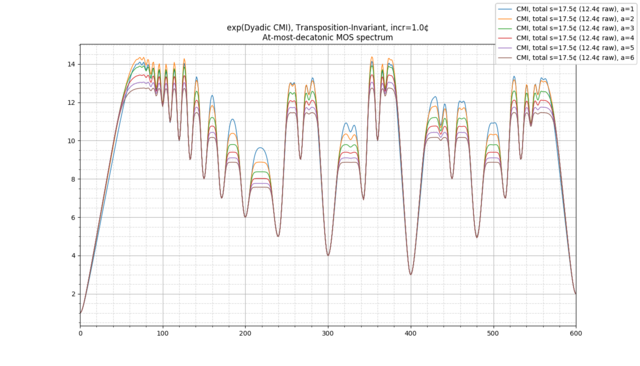

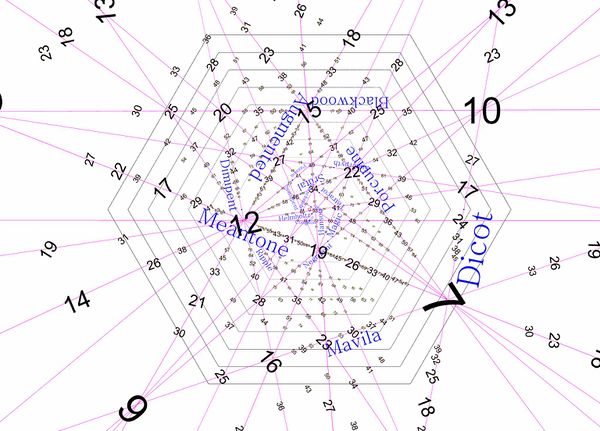

Now, let's consider a different example: the 31-EDO diatonic scale, made transpositionally-invariant. This time, we will plot the scale for "total" [math]s[/math] both at 15 cents (in blue) and 20 cents (in orange), or equivalently [math]s[/math] values of 10.6 cents per note and 14.1 cents per note, respectively: